Wikipedia, we have a Google refresh problem!

What did the web crawler say to Wikipedia? I’ll update you later!

So the question is … just how much later?! I’m wondering because there seems to be a discrepancy between just how fast Wikipedia bots/editors seem to shoot down vandals and how fast Google actually refreshes information to reflect this correction in the search engine.

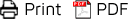

Today I was doing some research on new game technology and in particular, on a famous game developer who is showcasing it. I typed in my request in Google search engine and up pops a link to the developer’s Wikipedia page. Except it wasn’t quite how I’d imagined the page information to appear in a search engine.

Note: I have removed the developer’s identity for fear of aiding the vandal in his/her smear campaign. The screenshot demonstrates the nature of the problem.

Wow!! This must be so frustrating for those extremely on the ball editors at Wikipedia, imagine shooting down the bad guys only to be taunted by fragments of their evil deed in the inaccessible cached space in search engines. Not to mention the prolonged embarrassment of the individual who has been the target for the vandalism.

So where does the problem lie? With the search engine information refresh rate. Search engines use web crawling agents to read pages on World Wide Web and extract the keywords. Google also stores a cached version of most of the pages that can be viewed even if the original page is down.

Content on the Web tends to be very dynamic by nature as information is continually modified, added and deleted. The data that search engines store becomes outdated very quickly. To try to prevent these discrepancies, web pages are scanned periodically, this can be once a day, a week, a month – depending on the information provided by the site administrator or search engines statistics. This creates a gap in which we still can see the old content, already removed from the original page, and we still can find a link to it using keywords based on the old content. In this case, if I typed in three inappropriate words the vandal used, the developer in question will appear first on the list (and funnily enough on top of “sexy stripper” who was below).

So can this problem be solved? Is there a way for Wikipedia editors or users to mark a page to be refreshed sooner? That’s a question for Google (in this case) and other search engines as this feature seems to be missing. As of writing this article the issue has already existed for two days and still has not refreshed to reflect the changes.