Katherine Hayles Keynote Address at the Computational Turn

How many books can a person to read in a lifetime? In her keynote address at Swansea University’s Computational Turn workshop, Katherine Hayles surmised that if we read a book a day till we’re 85, it would amount to something like 25,000 books, though realistically the average bibliophile consumes only around 1000-2000 in her life. And so the capacities of our brain to consume text set the traditional scale of knowledge. We read as much as we can afford to, with our demanding lives, which means not reading that much. The Victorian period, for instance, produced at least 20,000 books; literature departments today study less than 5% of that.

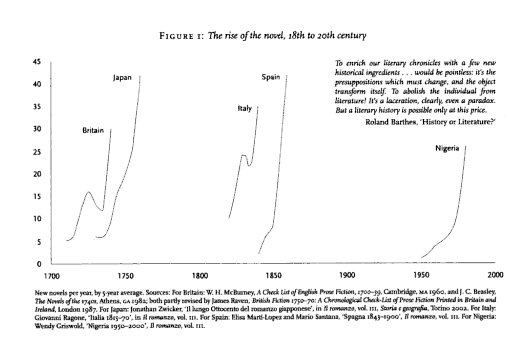

But what if we go about tampering with this scale? For one, you get Franco Moretti’s idea of distant reading. Rather than hunkering down with a book and a cup of coffee, distance readers renders details of a massive number of texts into piles of statistical data. This is literary scholarship with no reading necessary; the book itself disappears. Instead you get graphs. One of Moretti’s visualizations shows the emergence of the market for novels in Britain, Japan, Italy, Spain, and Nigeria between about 1700 and 2000. The wild spikes in popularity look like fish hooks, even as each country’s bibliophilia caught on at different times. So computation plus visualization allow us to get a more complete, birds eye view of cultural trends than intensive reading of a few works lumped in a canon.

To some advocates of cultural analytics, this pattern tracing relieves us from foraging for meaning. If we can toss interpretation out the window and allow algorithms to do the work we’re physically incapable of – if we let the machine read for us – then we won’t fumble around with our blind, historically polluted human assumptions. The human is dethroned as the privileged center of understanding; patterns can emerge that can show us what we would never have been aware of otherwise. This shift in scale radically changes the kinds of questions we can ask, and the types of answers we get.

But according to Hayles this new trend introduces an unnecessary binary: close readings that dig out meaning in a book’s narrative on the one hand; meta or sub level syntax revealed through thousands of text on the other. Hermeneutic interpretation vs pattern. Hayles thinks it’s more complicated. What we actually get from new computational methods – of using algorithms, computer programs, and databases to analyze cultural objects – is a distributed cognition system, part in the head of a person, part in the computer. Meaning and analysis, data and narrative are mutual supports. We shouldn’t altogether toss out the traditional humanities. Anyway, someone still has to decide what makes a well-designed algorithm or an interesting pattern.

She illustrated this point using the book Only Revolutions, whose author deliberately “contaminated narrative with database”. The book forces you to read it by turning pages in revolution, since each page contains a poem written in diametrically opposite directions. Hayles and her students created algorithms to discern words author Danielewski never uses in the work. By feeding the computer a massive list from English literature, plus the Only Revolutions text, they arrives at a ‘nix list’ of forbidden words: or, see, say, nor, seem, than, they, as, either, like, matter, mean, neither. The art to this type of literary analysis, she claimed, is to find analytic parameters that will reveal interesting patterns.

Hayles’ example is a new type of close textual reading that unearths patterns consciously designed by the author – who himself could only make such an intricately symmetrical work with aid of a computer. But what about finding narrative intention on a macro scale, a la Moretti’s distant reading, or Lev Manovich’s work at UC San Diego that seeks patterns among thousands of amateur flickr photos the authors themselves are totally unaware of?

What these larger patterns might hint at, said Hayles, is Nigel Thrift’s concept of a collective, technological unconscious. These are cultural assumptions that aren’t explicitly articulated by a single person but take concrete form through creative and artistic practices. After all, if we’re using the same technology, we all share its design limitations, the unconscious constraints it places on the form and content of cultural works. Any software program embodies assumptions about what constitutes narrative and sequence. But these assumptions only become obvious if we zoom out to observe patterns over time, and we require computational methods to reveal it. We only now have the tools to carry out this kind of distant cultural reading.

So what does that mean for the computational turn? If we’re all in an information intensive environment, altering the content of our attention and products of our attention, maybe cultural analytics can help us understand what our current technological unconscious has to ‘say’ about itself. Close reading alone – so old school – just won’t do the trick anymore.