Scientific referencing and hypertext: The necessity of a visual overview

This entry is meant as an introduction to the tool my group and I are developing for our Datavisualisation class. We will be trying to geographically map the rise and diffusion of different research fields over time. The reason for wanting to see these developments are diverse, but one of them derives from the increasing amount of (academic) information on the web which causes it to slowly become unnavigateable. Here I’ll try to illustrate this background and the possible importance of such a tool.

In our modern day and age it gets increasingly difficult, with every passing year, to imagine what life must have looked like for traditional scholars. Imagine Thomas Aquinas or Roger Bacon working with the tools they were dealt with to describe the world, truth and objectivity. The discussions of different epistemological concepts were being fought out through publications that varied greatly over decades, generations and sometimes even centuries.

The speed and accessibility of literary developments started increasing, not only thanks to cultural shifts that made literacy go up in and education more widely available, but also due to technological inventions that sped up the production and reproduction of books and other writings. After the Gutenberg revolution (the invention of the printing press by Johannes Gutenberg in the 15th century) the spread of scientific works greatly increased and the discussions between different trains of thought in different publications started to increase greatly. In fact, scientific fields as a notion by themselves were conceived only after this development and have since diversified and specified. Where a classic thinker in ancient Greece would occupy himself with questions of physics and mathematics as well as literature and aesthetics, today we have specialists in every field, be they “quantum mechanics” “American beat literature of the 1960’s” or “van Gogh’s early works between 1883 and 1889”.

Through technological and cultural developments we have gained so many different fields of discussion that referencing starts to become necessary in order to combine works and trains of thought from different contributors to ones field. The fact that literature and reference lists are a very important aspect of an academic text today is a prime indication of this. The problems that arise are mainly infrastructural: How do we keep a clear overview of the references? How can we check whether the reference is correct and how do we fit this enormous set of texts into a library that can support them. Even if the previous questions are adequately answered, one important issue remains: How to index? How is it possible for someone who reads a text to be able to access the referenced ones immediately and without much difficulty if he or she needs to scour such a vast amount of entries in a system?

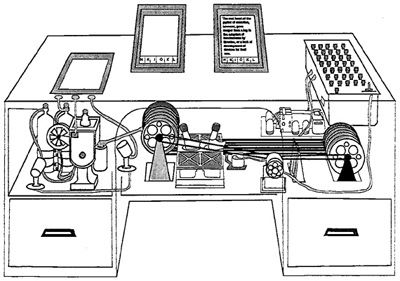

As with most fields there is always discussions going on about who the prime pioneers of that specific field were. In New Media studies there is a great consensus over the prime role of scholars like Marshall McLuhan as an early new media theorist. There are however texts that are a lot older, like Vannevar Bush’s text on the Memex for example. In 1945, Bush discusses the ever growing problem described previously, of being unable to index all those scientific works in a central database that can access and combine them without much difficulty (Bush, 1945).

Most readers of this article will know about the machine so there is no need to delve deeper into its specifics at the moment, suffice it to say that Bush’s idea started to stress the importance of a good system that can index references while immediately accessing the texts involved.

Most readers of this article will know about the machine so there is no need to delve deeper into its specifics at the moment, suffice it to say that Bush’s idea started to stress the importance of a good system that can index references while immediately accessing the texts involved.

This idea of combining different texts from a database together directly later became known as Hypertext and was discussed by and experimented with many theorists and scholars like Ted Nelson (Project Xanadu) and Douglas Engelbart (developer of the first Mouse and GUI’s) eventually leading to Tim Berners-Lee’s World Wide Web we all still use today. Now that jump from 1945 to the late 1980’s/early ‘90s is a long but that has it’s reasons. The period in between is full of discussion of how to organise this system of referencing first conceived of by Bush. These discussions are still raging on until today, but fact of the matter remains that the mainly adopted one is the HTTP based World Wide Web that is operated everywhere today.

This hypertext system with its direct linking is a prime example of what early theorists were trying to accomplish: The entire database available through a workstation, texts that are indexed, linked and linkable by the user. In a way, the hypertextual character of the modern internet should be any scholars wet dream concerning references: Everything is available, one can keep up to date with current discussions and in fact, contribute to them almost simultaneously. Some theorists, especially in the early 1990’s, the early days of the world wide web, went as far as to announce the death of the book with its limiting linking possibilities was now really finally approaching. Scholars like George Landow proclaimed the lives of academics would finally easy up because of the new technologies capability of organising and referencing.

Everything that glitters is not gold however. As one can read in texts like “Is Google Making us Stupid?” by Nicholas Carr (2008), there are some downsides to be considered surrounding the internet and its hypertextual character. Issues about information overload and the diffusive character of so many links that we cannot keep track of what we are looking for anymore. When a scholarly text on global warming is cited 1563 times, then what does that help us, what does that tell us? All of a sudden Landow’s Hypertextual paradise turns back into the hellish mega-library discussed before.

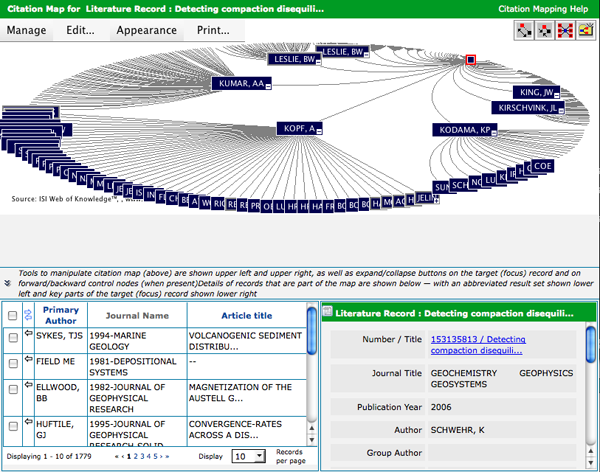

The Tool we are developing for this course should bridge – at least a part of – this gap. There are some previous examples of similar projects but none of them really fit the bill if one really wants to delve into the origins of certain research fields and discussions. The Web of Science, for example, has its own visualization of references but it can’t really tell anything about the specific relations between texts other than the one queried.

I will describe the tool we are developing in more detail and continue to try and place it within the posed frameworks in the next post. There are many possibilities for textual based interfaces available in http and html in order to illustrate a scholarly database, but without a visual aid they remain as unclear and diffuse as they were before the Gutenberg Revolution.

References

Bush, Vannevar. “As we may think.” Athlantic Monthly. Boston, 1945.

Carr, Nicholars. “Is Google Making us Stupid?”. The Atlantic Magazine. Boston, 2008.

Landow, George P. Hyper/Text/Theory. John Hopkins University Press: Baltimore, 1992

Nelson, Ted. “Literary Machines: The report on, and of, Project Xanadu concerning word processing, electronic publishing, hypertext, thinkertoys, tomorrow’s intellectual revolution, and certain other topics including knowledge, education and freedom”. Mindful Press: Sausalito, 1981.