Sciencemappr: Some dataset related implications

In my previous blogpost I posed some questions concerning the visualization project I am currently working on. The purpose of the project is to visualize global scientific interest for human rights issues and the emergence and disappearance of scientific disciplines concerning these issues. In the following I point to some dataset related implications and limitations that we encountered while working on the project.

Information visualization serves for gaining insight in a complexity of data with the purpose of discovery and explanation with the ultimate goal in supporting the process of decision making (Card et al, 1999). Most important is to question what data is analyzed in what manner to what purpose. It is only possible to draw conclusions from a information visualization when it is known what kind of information the dataset holds (availability), how correlations between variables within the datasets are defined (analysis strategy)and whether the dataset contains the evidence of the insight claimed (integrity). I will briefly discuss some important issues that needs to be addressed following these topics and try to validate the visualization project.

Data availability

When designing a information visualization with the purpose to visualize global scientific work concerning several human right issues, ideally the visualization analysis tool needs to be on top of the whole mass of scientific knowledge that exists. How much this is preferable it is not as self-evident because even the scientific community fiercely debates and argues for a better scientific community collaboration system (van de Sompel et al, 2004). One of the current problems is the lack of cross-linkages of academic work, even with internet technology that serves global libraries infrastructures. A lot of academic work is in quarantine, held in closed silos and thus not interlinked (Börner, 2007). Expectations of the project team about visualizing a dataset that holds all human knowledge needed to be adjusted and an alternative though representative dataset needed to be found. The Thomson Scientific Web of Science (http://isiwebofknowledge.com) is considered one of the most complete database of scholarly data that was available. With more than 46.1 million articles, over 11,261 high impact journals and the capturing of 65 million cited references each year (http://wokinfo.com/realfacts/qualityandquantity/) the dataset will be a good approach to reach the goals of the visualization project.

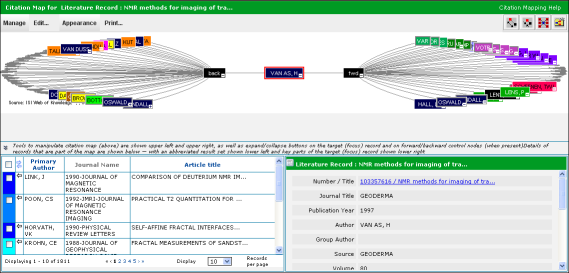

Underneath is a still of the ISI Web of Knowledge visualization tool which allows for depth citation analysis of academic work. Researchers can track an article’s cited and citing references which gives an insight in its wider relationships. With this tree graph a researcher can analyze the most important citation relationships for their own research. Our information visualization project is based on the same dataset but uses another set of attributes which allows for a different analysis strategy and thus points to an alternative use and perspective of the available data.

Fig.1 ISI Web of Knowledge ‘Citation Map for Literature Record’

Analysis strategy

As earlier stated, the analysis strategy depends on the available variables in the dataset. To visualize academic work on a geographical map geolocation variables are needed. Academic articles provide attributes such as the author’s address, affiliation with academic institutes, from which discipline the article comes from and the topics of the articles. Also the publication date, citations and coauthorships over time are documented which allows for historical citation- and collaboration analysis.

These attributes give us the possibility to visualize in which part of the world human rights issues are being researched, which institutes and disciplines are most interested in researching these issues and the level of collaboration between authors, scientific disciplines and institutions. Also, the project team believes it is interesting to investigate whether particular disciplines through time are concerned with human rights issues. The emergence of scientific disciplines are influenced by the political climate or needs of society and as such disciplines also disappear by historical and political changes (Krishan, 2009). The project team believes the analysis tool will point to interesting changes in the landscape of scientific disciplines and collaboration over time which can trigger and justify further research on the influential political and social forces.

Knowledge mining and integrity

One of the issues in analyzing a dataset concerns the accuracy of data extraction from the ISI Web of Knowledge database. There are highly sophisticated ways of automated text mining extraction methods but unfortunately these are beyond reach of the project team resources. Text mining is one the most applied approaches to make sense of a dataset of scholarly work but have some important implications. It is possible to analyze particular search words in text, for instance from the abstract or the keywords of the article. This is a reliable method if the articles are written with respect to the scholarly conventions and specific language of the academic discipline or domain (Börner, 2009). Because it is expected that the usage of these keywords are interpreted or used by different authors differently, chances are, some articles that are not about the human right issues will slip in and do harm to the integrity of the dataset used for analysis. Especially it will be difficult to separate different meanings and usage of words between different disciplines. A classic example is the different meanings of the word ‘rock’, which points us to the simple fact that words can have totally different meanings (polysemic). A musicologist, for instance, uses the word ‘rock’ for pointing to a music genre and a archaeologist means stone. One way of dealing with this issue is by using the discriminating search operator ‘NOT’ in the search queries used in the dataset of the information visualization tool. For the example above this would mean that a musicologist will use the combined search term ‘rock’ ‘NOT’ ‘archaeology’ to filter out archaeology articles that are irrelevant for his research. Still, this method will always imply some level of compromise as people are simply to creative with words and different meanings. It is therefore necessary to balance between the level of quantity and quality of the dataset.

Fig.2 Visual of the preliminary design of the Sciencemapper analysis tool. Designed by : Hero, K. & Kamphuis, K. (2011)

The picture above is a preliminary design of the Sciencemapper tool the designers of the project team produced. As the interface shows the user is able to highlight particular disciplines and institutions which is communicated through the map which shows the geographical locations of academic articles. The size of the dots on the map give an estimation of the amount of articles that are published concerning one of the human right issues. In an instant is thus clear where the interest is highest that can also be shown trough time by using the time bar. Expected is the bar chart and size, emergence or disappearance of the geolocated dots on the map, point to interesting changes in the scientific disciplines landscape and attention for human right issues. Hopefully this possess enough important question that deserve an answer.

References:

Börner, K. (2007) ‘Making sense of mankind’s scholarly knowledge and expertise: collecting, interlinking, and organizing what we know and different approaches to mapping (network) science’ School of Library and Information Science, USA.

Card, S.K. Jock D.Mackinlay, Ben Schneiderman (1999) ‘Readings in Information Visualization Using Vision to Think’ San Diego: Academic Press, USA

Krishnan, A. (2009) ‘What are Academic Disciplines? Some observations on the Disciplinarity vs. Interdisciplinarity debate’ University of Southampton, National Centre for Research Methods.

Van de Sompel, H. (2004) ‘Rethinking Scholarly Communication, Building the System that Scholars Deserve’ D-Lib Magazine, September 2004. Retrieved on 27-04-2011. http://www.dlib.org/dlib/september04/vandesompel/09vandesompel.html

Web of Knowledge (2011) ‘Real Facts:Realnumbers: Real Knowledge’ retrieved on: 27-04-2011 http://wokinfo.com/realfacts/qualityandquantity/