Data mining at The Times

Data mining, also defined as KDD (Knowledge Discovery in Databases), is a subfield of computer science which has extensively raised in the last years, rapidly becoming a discipline known to a widespread audience, mainly because of its use in the new media. Since open data have started being increasingly available, professionals as data miners have developed into crucial positions among editorial teams. Although the topic of data mining applied to journalism could have already been explored, the perspective of an insider could help to go beyond a dangerous naivety, that often leads to biased descriptions of a such specific field.

Stefano Ceccon is data mining specialist at The Times, postdoctoral research assistant at the City University of London and co-founder of Tribe Apps; but, above all, is someone who can easily provide a deep comprehension of this subject.

Stefano Ceccon is data mining specialist at The Times, postdoctoral research assistant at the City University of London and co-founder of Tribe Apps; but, above all, is someone who can easily provide a deep comprehension of this subject.

Stefano, how would you define data mining and its use at The Times?

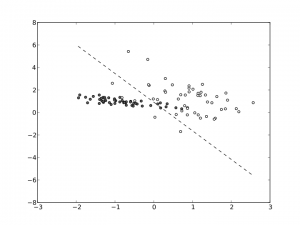

A general but accurate definition could be ‘the process of extracting knowledge from data”. Although often associated to data journalism or data science, it is actually a term that has been used for many years in relation to machine learning and statistics, and it refers to a technical set of algorithms and approaches to analyse complex or big data. Usually far from journalists’ skills, in its more technical extension data mining is often far from classical statisticians’ skills too, because it is based upon computer programming expertise. At The Times and The Sunday Times I am part of a team of three people: one journalist, one data scientist and one programmer. We all have quite an overlap with the others: I am a data scientist but also have programming skills, while our programmer can do some data analysis. We work together as a data journalism unit, providing outputs and analysis to the news desk.

Could we say that data mining will, at some point, bypass what Lovink called “the art of asking the right question”?

I don’t believe in the power of blind data analysis. As recently pointed out by David Hand at IDA conference and Tim Harford at the Royal Statistical Society conference, the dangers in big data are overlooked by the public, and even the scientists. There are crystal clear examples of that, for instance Google Flu, which was cited as a good example of data-driven algorithm performing better than traditional statistical monitoring techniques for flu predictions. Then, one day, it started going bonkers and it is not clear why. That said, we have good examples of genuine data-driven findings and predictions that worked really well so I do believe that data can give valuable insights and help finding novel patterns. After all, humans are not naturally good at probability as they are inherently biased.

As Van Dijk stated “transparency of procedures and methods for gathering sources are key factors for progress in the area of knowledge production.” Most of the people do not have the scientific knowledge necessary to argue epistemological issues nor biases in data mining. Who is supposed to verify you are not manipulating data?

As in the past, journalists trust other parties analysis and compare their experience in the field with the findings that data analysis produces. It is a feedback system which provides some form of checks. The other important aspect of checking is made possible by transparency: analysts should share the code of their analysis so that they can be checked. We do have a plan to put the codes on github or similar websites, although so far we have not. We publish the methodology of our analysis on our blog for now. I think we do have a responsibility for what we do and I always put integrity and robustness first. In some cases this is not easy as often the public wants the big headline, but we have to stick to what data tells us.

Nowadays data mining and data-driven journalism actively contribute to knowledge production. Is not dangerous that this power is yielded to few scientists?

Scientists are enablers and rarely can act or take decisions that directly affect people. The power is held by those who take decisions at higher levels, for example big corporates, or editors. The bigger danger is indeed the lack of expertise in the field. Everyday new tools to process, analyse and present data are being published but this is clearly not being met by the “production” of experts, leading to a gap that if fit with the wrong people can have disastrous effects for the public and the science as well.

References

Butler, Decan. “When Google got flu wrong.” Nature 494, 155–156 () doi:10.1038/494155a <http://www.nature.com/news/when-google-got-flu-wrong-1.12413>.

Lovink, G. 2008. “The Society of the Query and the Googlization of Our Lives: A Tribute to Joseph Weizenbaum.” Eurozine 2008. http://www.eurozine.com/articles/2008-09-05-lovink-en.html.

Dijck, José van. 2010. “Search Engines and the Production of Academic Knowledge.” International Journal of Cultural Studies 13 (6): 574–92. doi:10.1177/1367877910376582.