Artificial intelligence for everyone: indico.io

Image: Still from Amazon Machine Learning introduction video

Image: Still from Amazon Machine Learning introduction video

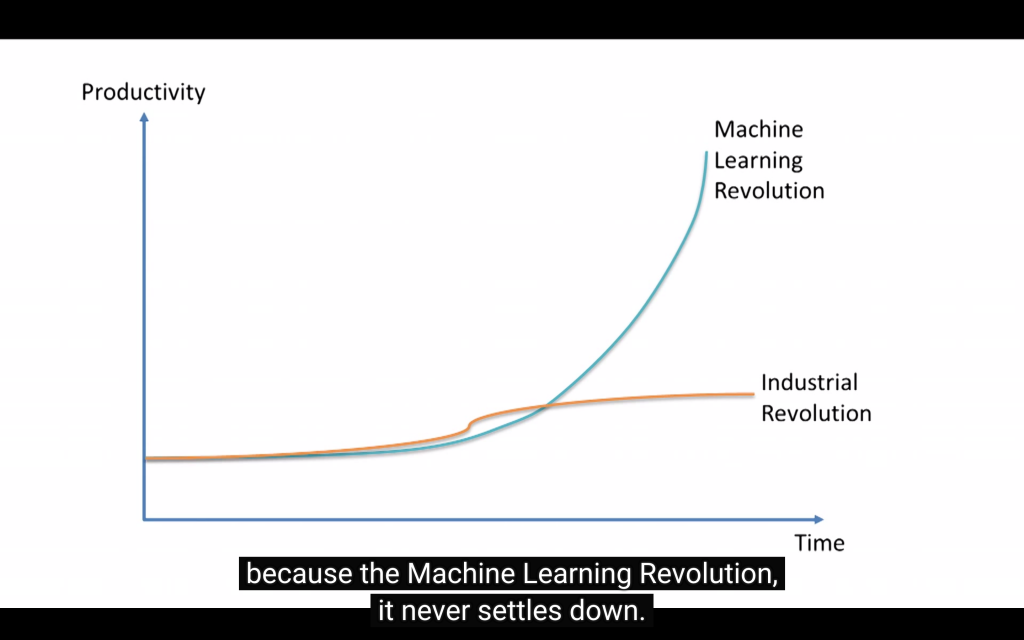

Artificial Intelligence (AI) developed alongside with computing since its earliest days. Today when thinking about AI the famous Kasparov beating chess computer Deep Blue, smartphone personal assistants like Siri or the pop cultural dystopian visions of AI turning against humans may come to mind. But with the ever rising amount of Big Data, AI gained importance in helping data scientists make sense of data. While this technology has been available for the technology giants and their laboratories, the barrier for mass appliance of AI was rather high. Recent technology advancements in AI research (especially in the sub discipline machine learning) and falling hardware costs opens this technology to a broader public. (Stafford 2015) Besides the engagement of the Big Four (Amazon, Apple, Facebook, Google) this caused a surge of start-ups engaging in building and optimizing AI applications. (Zilis 2014)

Images: indico website

This development led to a proliferation of AI application programming interface (API) providers. APIs help software or hardware developers to solve complex or resource intensive problems by providing a simple interface. They are the black box in programming since a thorough understanding of the inner workings of an API are not needed to make use of them. Integrating an API for very complex data mining problems solves two problems: the high cost of maintaining an infrastructure and the externalized development of statistical or machine learning models needed for analysis. Indico.io is one of the many recently published AI APIs that make their services available to a wide audience. Indico.io provides “pre-trained models” which are pre-built solutions for common tasks. One of the models indico.io provides is a tool for political text analysis. Using these APIs, programmers have access to AI models that recognize the political alignment of a text. It is able to analyze whether a text is considered more conservative or more liberal. Similar tools like sentiment analysis, automated text tagging or emotion recognition are available.

Image: Demis Hassabis, CEO, DeepMind Technologies – The Theory of Everything

The underlying data used for training the models or the statistical methods applied in these APIs are not necessarily known to the developer using it. That means that it remains a hidden layer in the software development process, even to the developer himself. The CEO of indico.io writes in the tech magazine TechCrunch “Deep-learning models can separate signal from noise, finding patterns that would typically take experts months to codify.“ (Victoroff 2015) This cybernetic notion of explaining AI is also repeated by other experts in this field. The founder of DeepMind (famously acquired by Google) talks about how „A(G)I automatically converts information into knowledge“ (Hassabis 2015) Information is seen as part of a sender – receiver model which – when just enough feedback (training) is added – produces knowledge.

Image: Still from TED Talk The wonderful and terrifying implications of computers that can learn by Jeremy Howard

When looking into the discourse surrounding AI and machine learning, one notices a strategy comparable to the strategies used by cybernetics in the 1950s to the 1970s. Cybernetics tried to establish itself as a universal science that “could operate either as the primary discipline, directing others on the search for truth, or as a discipline providing analytic tools indispensable to the development and progress of others.” (Bowker 1993) Data scientists take a similar position when emphasizing their lack of domain knowledge while solving problems of other disciplines. Foucault tells us to be attentive of a discourse that presents itself with a claim of observation and neutrality:

„And the discourse that would then take form would no longer have that old artificial and clumsy theatricality: it would develop in a language that would claim to be that of observation and neutrality. The commonplace would be analyzed through the efficient but colorless categories of administration, journalism, and science.” (Foucault 2009, 171-2)

The aforementioned proliferation of AI APIs leads to a further and deeper implementation of AI produced knowledge as it is seen in the very simplified form of basic sentiment analysis provided by indio.co. It becomes an everyday commodity of digital objects that permeate our society. This raises the question of what kind of knowledge is produced? The belief in universalization of data science as a science able to describe society by technical means, manifests itself suddenly very directly when looking at AI generated “political analysis”.

References:

Bowker, Geoff. “How to Be Universal: Some Cybernetic Strategies, 1943-70.” Social Studies of Science Vol. 23 (1993): 107–27.

Foucault, Michel. Security, Territory, Population: Lectures at the Collège de France 1977–1978. St Martins Press, 2009.

Hassabis, Demis. “DeepMind Technologies – Theory of Everything.” 12 May 2015. 12 Sept. 2015. .

Stafford, Jan. “Predictive Analytics Tools: How APIs, AI Make Data Accessible.” 13 Sept. 2015. 12 Sept. 2015. <http://searchsoa.techtarget.com/feature/Predictive-analytics-tools-How-APIs-AI-making-data-accessible>.

Victoroff, Slater. “Big Data Doesn’t Exist.” TechCrunch 10 Sept. 2015. 12 Sept. 2015. <http://social.techcrunch.com/2015/09/10/big-data-doesnt-exist/>.

Zilis, Shivon. “The Current State of Machine Intelligence.” Medium. 10 Dec. 2014. 12 Sept. 2015. .