Autonomy and why vehicles won’t stop killing, but they’ll try

Semi-autonomous vehicles (SAV’s) have been around in some form for a few years now, and they are becoming ever more present on city streets. Just a couple of years ago few auto manufacturers offered any kind of autonomy in their vehicles, now however most offer at least some form of basic autonomy – from emergency braking to lane assist; both technologies let the vehicles computer system over-ride human inputs if the conditions are deemed to be dangerous enough. A handful of manufacturers go further than this offering vehicles that can drive themselves with minimal driver input (eg. Tesla). The obvious direction this technology is heading is towards total vehicle autonomy, and the race to get there is a heated one.

So while SAV’s are not new, the debate around their ethical responsibility and the ethical responsibility of the software and manufacturer is particularly current. It takes few simple demonstration videos on Youtube to show some obvious safety issues with SAV’s.

Recently it’s come to light that possibly two people have died in Tesla vehicles while Autopilot (Teslas autonomous driving mode) was activated (Boudette:2016vf). Often SAV’s have been cited for their low fatality rate compared to human drivers, but if the second death turns out to be Autopilots fault then the number of fatalities will drop from one in 130 million miles to one in every 65 million miles – similar to that of human drivers globally (approximately 60 million miles) and far below the US average of 94 million miles (teslacom:ty). As a result safety concerns surrounding autonomous vehicles (AV’s) have been a topic of much academic and social debate.

It is the old philosophical trolley problem dressed up for the 21st century.

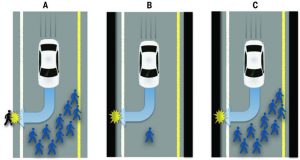

The social dilemma of autonomous vehicles, published in the journal Science (Bonnefon, Shariff, Rahwan 1573) surveyed peoples (conflicting) opinions about autonomous vehicle safety. People surveyed wanted AV’s to minimise casualties in extreme circumstances, so when given the option between killing one passenger or ten pedestrians, most respondents suggested the sacrifice of one to save the collective ten. When the question was rephrased to suggest the respondent would be the passenger who would be sacrificed, opinions changed radically – from collective good to personal interest. As Iyad Rahwan (associate professor in the MIT Media Lab) puts it “everybody wants their own car to protect them at all costs.” (MITNews:ui)

This debate around safety runs at odds with users of the technology who purposely misappropriate its’ use. In late 2015 a Tesla set the record for fastest electric vehicle coast to coast, driving across the US in under 58 hours with autopilot engaged for 96% of the journey (Transportation:tg). Reese (one of the vehicles passengers) said “at speeds around 90mph” (maximum posted speed limits in the states on their journey were all between 70 and 80mph) and so by default were actively forcing the car to break the law. I say the ‘car’ is breaking the law, although this situation is ambiguous. Clearly the passengers are deciding the speed the vehicle should be travelling, but for all intents and purposes the car is doing the majority of the driving.

The question of who would be at fault if a vehicle crashed while in its autonomous driving mode is currently a legal grey area (Lewis:tr). To mitigate potential accidents SAV’s and AV’s must continually assess risk: the risk of travelling at speed inappropriate for the conditions; making necessary but dangerous manoeuvres; or making a decision affecting other road users to avoid a worse outcome (ie. the lesser of two evils) (Goodall 93)

The risk must be apportioned correctly to each affected party. Autonomous vehicles “can predict various crash trajectory alternatives and select a path with the lowest damage.” and that “an automated vehicle’s decisions that preceded certain crashes had a moral component” (Goodall:2014uc). Accidents are bound to happen, especially if the car is faced with a decision where there is no positive outcome, and the driver cannot take appropriate action in time. Ultimately the vehicle will make these decisions on which course of action to take based on the programs’ code (Doctorow:2015wn). Therefore if a poor decision is made (in the eyes of the law) will the programmer who wrote that piece of code be reprimanded. Ultimately they will be writing the hierarchy of who is most to least valuable in any given situation, and the assessment of this criteria is ongoing, lest an accident happen unexpectedly (like so many accidents do!).

Between this and the other aforementioned ethical, moral, and legal problems with SAV’s and AV’s it is no wonder that there is currently heated debate surrounding the topic. As Bonnefon (research director at the Toulouse School of Economics) puts it “The moral dilemma for AV is something brand-new. We’re talking about owning an object which you interact with every day, knowing that this object might decide to kill you in certain situations” (Gent:wa).

4, BBC Radio. The Trolley Problem. 18 September 2016. https://www.youtube.com/watch?v=bOpf6KcWYyw

Bonnefon, Jean-François, Azim Shariff, and Iyad Rahwan. “The Social Dilemma of Autonomous Vehicles.” Science (New York, N.Y.) 352.6293 (2016): 1573-6. 17 September 2016.

Boudette, Neal E. “Autopilot Cited in Death of Chinese Tesla Driver.” The New York Times 2016. 17 September 2016. nytimes.com/2016/09/15/business/fatal-tesla-crash-in-china-involved-autopilot-government-tv-says.html

chamber361. Insane Volvo Brake Test Epic Fail. 17 September 2016. https://www.youtube.com/watch?v=aNi17YLnZpg

Davies, Alex. “Obviously Drivers Are Already Abusing Tesla’s Autopilot.” WIRED. N.p., n.d. Web. 17 Sept. 2016. wired.com/2015/10/obviously-drivers-are-already-abusing-teslas-autopilot/

Dizikes, Peter “Driverless Cars: Who Gets Protected?.” “Driverless Cars: Who Gets Protected?.” MIT News. N.p., n.d. Web. 17 Sept. 2016. news.mit.edu/2016/driverless-cars-safety-issues-0623

Doctorow, Cory “The Problem with Self-Driving Cars: Who Controls the Code?.” “The Problem with Self-Driving Cars: Who Controls the Code?.” The Guardian 2015. 16 September 2016. theguardian.com/technology/2015/dec/23/the-problem-with-self-driving-cars-who-controls-the-code

Gent, Edd, Live Science Contributor June 23, and 2016 03 22pm ET. “Moral Dilemma of Self-Driving Cars: Which Lives to Save in a Crash.” Live Science. N.p., n.d. Web. 17 Sept. 2016. livescience.com/55175-self-driving-cars-moral-dilemma.html

Goodall, Noah. “Ethical Decision Making During Automated Vehicle Crashes.” Transportation Research Record: Journal of the Transportation Research Board 2424 (2014): 58–65. 16 September 2016. trrjournalonline.trb.org/doi/10.3141/2424-07

Goodall, Noah J. “Machine Ethics and Automated Vehicles.” Lecture Notes in Mobility. Ed. Gereon Meyer and Sven Beiker. Springer International Publishing, 2014. 93–102. Print.

Lecture Notes in Mobility. Lecture Notes in Mobility. Springer International Publishing, 2014. 15 September 2016.

Lewis, Tanya, Staff writer May 22, and 2014 11 03am ET. “Rules for Self-Driving Cars in Legal Gray Area.” Live Science. N.p., n.d. Web. 18 Sept. 2016. livescience.com/45815-self-driving-cars-in-legal-gray-area.html

RockTreeStar. Tesla Autopilot Tried to Kill Me! 15 Sept. 2016 https://www.youtube.com/

“A Tragic Loss.” tesla.com. N.p., 2016. Web. 18 Sept. 2016. tesla.com/blog/tragic-loss