Dissecting Facebook’s Censorship

Napalm Girl by Nick Ut (AP). Retrieved from http://fortune.com/2016/09/09/facebook-napalm-photo-vietnam-war/

Since September 2016, Facebook’s banning of the “Napalm Girl” photograph, one of the most powerful images that represented the atrocities of the Vietnam War, has sparked a debate on Facebook’s censorship power and policies. In the 1972 photograph, Kim Phuc, a Vietnamese nine-year-old girl, is seen running naked among other children to escape from a napalm attack. The photograph was shared on the Norwegian-newspaper Afternposten’s Facebook page and subsequently removed by Facebook, which initially claimed the image violated the company’s policies on displaying nudity (Ingram 2016). Although the photo was then reinstated by Facebook itself, following a brief explanation that “the value of permitting sharing outweighs the value of protecting the community by removal” (cited in Time 2016), Internet users became more aware of the censorship power of social network websites.

However, Facebook’s internal censorship process remains a mystery at best (Heins 2013-2014) and the “Napalm Girl” photograph was only an example among many Facebook’s disputable content takedowns (Onlinecensorship 2017). Thus, our research aims to shed light on what content is taken down by Facebook, as well as why and how the company makes certain judgments about content. Then, drawing upon our own research, we will challenge the common assumption that social network sites in general, and Facebook in particular, are forums for the free exchange of ideas and public discourse. (Diamond 2010, Shirky 2011, Rappler 2015). As Gillespie (cited in Dewey 2016) observed, “The myth of the social network as a neutral space is crumbling, but it’s still very powerful”. We will unravel this myth with empirical research and finally make some suggestions regarding content moderation and censorship on social networks.

Why Facebook’s censorship matters?

As of June 2017, Facebook has had 2 billion users (Zuckerberg 2017), which is almost one-quarter of the earth’s population (22.9%) (Statista 2017). The large user base makes Facebook one of the most dominant social network websites, which are argued to be an important tool for coordinating political movements, supporting the public sphere, facilitating political expression, and debate (Jackson 2014, Shirky 2011). Thus, its content censorship could have a tremendous impact on matters of public concern.

There has been evidence that Facebook has complied with government requests to block certain content in Vietnam, Pakistan, Russia, Turkey, India, etc. (Isaac 2016, Pham 2017). Facebook said in an emailed statement, “we review all these requests against our terms of service and applicable law. We are transparent about the requests we receive from government and content we restrict pursuant with local law in our Global Government Requests Report” (cited in Pham 2017). The above-mentioned report for the last 6 months of 2016 shows Facebook restricted specific contents in 19 countries (Facebook 2017). While we recognize the company’s effort to share this statistic, it is important to note that the full details of thousands of local restricted contents are nowhere to be found apart from 15 examples in the FAQ. Similarly, content which was removed either automatically by the filtering algorithm or by Facebook personnel also disappeared without leaving a trace unless user took record of it themselves. This raises questions about Facebook’s transparency and the legitimacy of their censorship decisions.

It is also important to note that Facebook is not just a platform for the exchange of opinions and ideas, but a de facto infrastructure. To brand Facebook’s murky stance on censorship as an invisible structure seems fitting because details of the censorship rules and procedure are not presented elsewhere. Gillespie (2012) interpreted Facebook’s lack of transparency of its censored content, “They generally do not want to draw attention to the presence of so much obscene content on their sites, or that they regularly engage in “censorship” to deal with it. So the process by which content is assessed and moderated is also opaque”. Therefore, our research will aim to expose and examine this invisible infrastructure as best we can.

Facebook censorship process

Leaked guidelines for Facebook moderators led to an investigation series called “Facebook Files” conducted by The Guardian newspaper (Hopkins 2017) revealed that content moderator would use these leaked manuals and rely upon their own linguistic and cultural knowledge to make a decision on removing or keeping the content, or moving the report for further review. Besides the content moderation triggered by complaints, Facebook employees also proactively look at content that potentially “violates their Community Standards” (Gillespie 2012).

In addition to Facebook’s internal appeals process, we should also consider the role of users in content censorship. Facebook users can flag certain posts that they think should be moderated by Facebook, which means users are included in the power of censoring from Facebook. However, we should take into consideration if flagging really gives users certain power over the content or if it is a way for Facebook to justify the removal of certain content (Crawford & Gillespie 2016).

Undoubtedly, Facebook has the right to control the type of content shared on its platform through its terms and conditions which users agree upon using it. Moderation on social media networks is also very important as the platforms could be used for the worst reasons imaginable, i.e. recruiting fighters for terrorist organisations. However, such moderation has the potential to be misapplied or abused. For example, social network websites might take down content that they believe could harm their business practices or those of their partners. Furthermore, these platforms face external pressure, especially from repressive regimes, to suppress content that the regimes believe could have consequences to their political agendas or offend the cultural traditions of the countries (Jackson 2011). These moderation practices are no longer neutral or for the social good but a kind of censorship as they are undertaken to obscure speech, political opinions, and debate (Roberts 2017).

While scholarship has studied the impact of Facebook’s censorship on society (Jackson 2014, Heins 2013-2014, Dencik 2014), empirical research of types of content restricted on this social network site is still rare. Thus, our research is an effort to fill in this gap and from there reassesses the role of Facebook in facilitating freedom of expression.

Methodology

Data has been collected in the year 2015, 2016 and 2017 via Google searches and www.onlinecensorship.org. This website reports on censored content and weekly takedowns as reported by users. The data was collected via the “News and Analysis” tab under the “Resources” header. It was then filtered by platform (Facebook), (Weekly Takedown), and with the theme being unspecified to allow us to explore all the takedowns. Due to the site only having gone live since 2016, there is no information before that period. For this reason, we turned to use Google with the following term “Facebook censor 2015”. We read the news and selected those that mentioned specific content taken down by Facebook. The content that was removed and then reinstated due to pressure from the community is also included in the research because the similar content would be the potential subject of censorship.

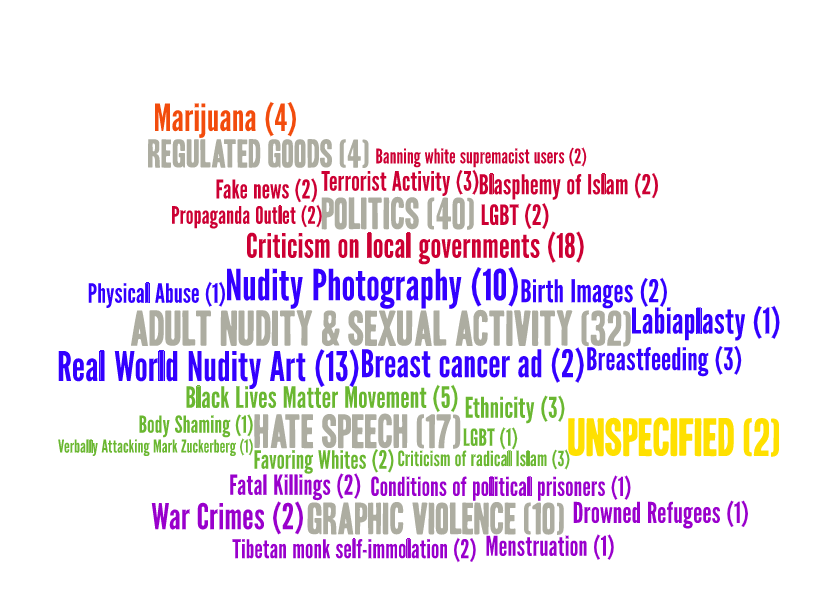

The data we found was then categorised largely according to Facebook’s community guidelines, by means of textual analysis. Categorisation was done according to both the community standards and the leaked documents detailing the moderation standards. These categories were later combined into a word cloud where the words were colour coded for example grey for the main categories from Facebook community guidelines and the subcategories that we elaborated from our analysis are in strong colors to highlight the obscurity of the platform’s Community Standards. To enable further use of our research materials, the links to all reports of content takedowns that we found from 2015 – 2017 are placed on our website www.facebookcensorship.wordpress.com.

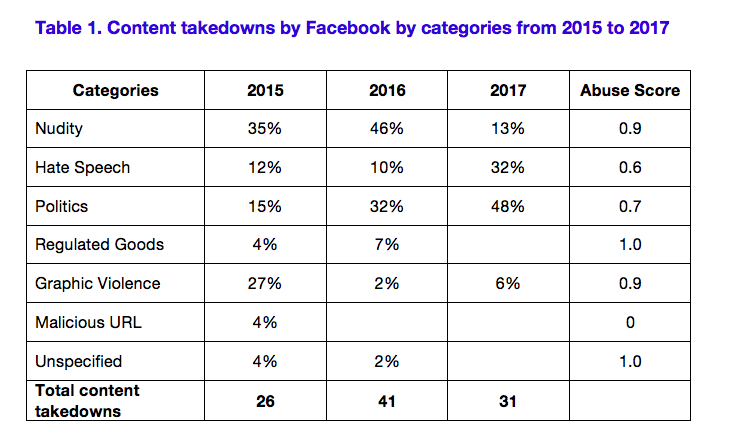

We use a scoring system called ‘abuse score’ to find out how much content moderation was abused or misapplied on Facebook. In order to compute the abuse score of the data gathered, we used this formulation Z1/Z2. (Z1: total of content takedown as a kind of censorship; Z2: total of content takedown in the category)

Our research difficulty was that as Facebook lacks transparency with its censorship devices, we found the categories bleeding into one another complicating the categorisation process.

Our findings and discussion

The research is conducted to re-examine the common assumption that social networks in general, and Facebook in particular, are forums for free speech and public discourse. By analysing over 90 examples of Facebook’s content takedowns and putting them together in a word cloud, we see the following implications of Facebook’s content censorship.

Word Cloud specifying content categories taken down by Facebook. The numbers next to the categories indicate how many content were censored in those categories. The unspecified category include content that do not fit any topic in Facebook’s Community Standards.

Firstly, this content control brings some social good such as removing malicious URLs, fake news, terrorist activities, hate speech, and banning white supremacist users. Scholars also acknowledge the role of social networks in protecting users from phishing attacks (Jackson 2011) and maintaining some level of respect and decency on their platforms (Zimmer 2011, Dencik 2014, Heins 2013-2014).

However, the most prominent finding in our research is that Facebook tends to play it safe and thereby over-censoring, especially of nudity content and graphic violence. From table 1, we can see a significant decline in disputable nudity content removal in 2017, which probably reflects the company’s censorship policy changes due to public criticism over the removal of “Napalm Girl” photo in late 2016. However, Facebook’s censorship of nudity art photographs, breast cancer advertisements, and black lives matter movement in 2017 shows that the company still struggles with making the right calls on nudity and hate speech. According to LaRue (cited in Chander 2016), when in doubt, intermediary platforms, i.e. Facebook, are inclined to remove the suspected unlawful content to avoid the risk of being held financially or even criminally liable.

Furthermore, Facebook even proactively censors politically sensitive content. For example, videos of Tibetan monk’s self-immolation were taken down with an explanation from Facebook that it violated the community standards on graphic violence. However, by deleting the videos, Facebook blocked one of the discussions of protests against China’s invasion of Tibet, and it is no secret how much Zuckerberg wants to reintroduce his social network website into China (Isaac 2016). In obliging to governments’ requests, Facebook is helping to normalise political censorship and diminish an open and free forum for discourse. It is clear that the cherished principles of free speech which Facebook has claimed to protect on multiple occasions (Ingram & Volz 2017, Dewey 2016, Rappler 2015) vanish when they do not align with business interests. Jackson (2011) warned us that online censorship could translate into offline harassment or intimidation; therefore, the cooperation between Facebook and governments should be taken more seriously.

Another important finding is that Facebook’s censorship rules and execution show how they saw themselves as the public arbiters of cultural values. It is especially conspicuous when they removed nudity, sexuality, and religion-related content, e.g. nudity art and criticism of radical Islam. Zuckerberg (2017) acknowledged the limitation of their practice due to three reasons: “cultural norms are shifting, cultures are different around the world, and people are sensitive to different things” and promised to make a system in which users can determine their own cultural norms. This is a righteous reflection and a positive change that hopefully will enhance freedom of expression on the social network.

Nevertheless, our abuse scores show that Facebook’s censorship of disputable content on nudity, hate speech, politics, and graphic violence has obscured or eradicated a certain artifact (sometimes a valuable and newsworthy one), opinion, debate, or conflict ever existed. This is to say that their censorship has a chilling effect on freedom of speech despite their claims to advocate for free speech. Their content censorship, whether due to user’s appeal, or market or government pressure, is problematic as it “shapes and mediates our relationship with the world around us and, over time, we come to perceive the world through the lenses that our artifacts create” (Verbeek, cited in Yeung 2017, p.129).

Conclusion

The exploration of censored posts has led to the conclusion that on many occasions, Facebook censors more than is necessary. Although Facebook took down some content that is detrimental to its users and the platform itself, the removal of disputable content on nudity, hate speech, politics, and graphic violence, which is highlighted in our findings, shows that Facebook can distort our perception of the world we live in.

In order for clarity to be achieved in this issue, Facebook should release the internal documents used to train and guide their moderation staff, as well as the parameters set for their algorithms. Without transparency, there will always be cases of parties being victimized. In our research, we devised more inclusive subcategories so that users know what was kept from them. This could be an approach for Facebook to make their censorship more transparent.

Recently, Zuckerberg (2017) announced that artificial intelligence is being developed to assist in judging content and allow Facebook users to set their own cultural standards. Hence, we might see a more inclusive social network site. However, we would suggest for a mechanism in which users can give feedback on social networks’ censorship decisions or a form of community moderation that is based on a more agonistic ethos. This being said, it would be interesting for further research to examine content censorship on other social media networks, i.e. Twitter, Instagram, etc. to have a more comprehensive understanding of social networks’ censorship.

(Word Count: 2197)

P/S: If you are interested in specific details of the content takedowns or need this resource for your own research, please visit our website www.facebookcensorship.wordpress.com

References:

Chander, A. (2016) ‘Internet Intermediaries as Platforms for Expression and Innovation’, Centre for International Governance Innovation. Available at: https://www.cigionline.org/publications/internet-intermediaries-platforms-expression-and-innovation (Accessed: 19 October 2017).

Crawford, K. and Gillespie, T. (2016) ‘What is a flag for? Social media reporting tools and the vocabulary of complaint’, New Media & Society, 18(3), pp. 410–428. doi: 10.1177/1461444814543163.

Dencik, L. (2014) ‘Why Facebook Censorship Matters – Journalism, Media and Cultural Studies’, 13 January. Available at: http://www.jomec.co.uk/blog/why-facebook-censorship-matters/ (Accessed: 6 October 2017).

Dewey, C. (2016) ‘The big myth Facebook needs everyone to believe’, The Washington Post, 28 January. Available at: https://www.washingtonpost.com/news/the-intersect/wp/2016/01/28/the-big-myth-facebook-needs-everyone-to-believe/ (Accessed: 19 October 2017).

Diamond, L. (2010) ‘Liberation Technology’, Journal of Democracy, 21(3), pp. 69–83. doi: 10.1353/jod.0.0190.

Facebook (2017) Government Requests Report July 2016 – December 2016. Available at: https://govtrequests.facebook.com/ (Accessed: 8 October 2017).

Gillespie, T. (2012) ‘The dirty job of keeping Facebook clean’, Culture Digitally, 22 February. Available at: http://culturedigitally.org/2012/02/the-dirty-job-of-keeping-facebook-clean/ (Accessed: 7 October 2017).

Gillespie, T. (2015) ‘Facebook’s improved “Community Standards” still can’t resolve the central paradox – Culture Digitally’, Culture Digitally, 18 March. Available at: http://culturedigitally.org/2015/03/facebooks-improved-community-standards-still-cant-resolve-the-central-paradox/ (Accessed: 19 October 2017).

Heins, M. (2013-2014) ‘The Brave New World of Social Media Censorship’, Harvard Law Review Forum, 127, pp. 325–330.

Hopkins, N. (2017) ‘Revealed: Facebook’s internal rulebook on sex, terrorism and violence’, The Guardian, 21 May. Available at: http://www.theguardian.com/news/2017/may/21/revealed-facebook-internal-rulebook-sex-terrorism-violence (Accessed: 18 October 2017).

Ingram, D. and Volz, D. (2017) ‘Facebook fights U.S. gag order that it says chills free speech’, Reuters, 3 July. Available at: https://www.reuters.com/article/us-facebook-court/facebook-fights-u-s-gag-order-that-it-says-chills-free-speech-idUSKBN19O2IB (Accessed: 23 October 2017).

Ingram, M. (2016) ‘Here’s Why Facebook Removing That Vietnam War Photo Is So Important’, Fortune, 9 September. Available at: http://fortune.com/2016/09/09/facebook-napalm-photo-vietnam-war/ (Accessed: 5 October 2017).

Isaac, M. (2016) ‘Facebook Said to Create Censorship Tool to Get Back Into China’, The New York Times, 22 November. Available at: https://www.nytimes.com/2016/11/22/technology/facebook-censorship-tool-china.html (Accessed: 7 October 2017).

Jackson, B. F. (2014) ‘Censorship and Freedom of Expression in the Age of Facebook’, New Mexico Law Review, 44, pp. 121–168.

Onlinecensorship (2017) OnlineCensorship. Available at: https://onlinecensorship.org/ (Accessed: 8 October 2017).

Pham, M. (2017) ‘Vietnam says Facebook commits to preventing offensive content’, Reuters, 27 April. Available at: https://www.reuters.com/article/us-facebook-vietnam/vietnam-says-facebook-commits-to-preventing-offensive-content-idUSKBN17T0A0 (Accessed: 7 October 2017).

Rappler (2015) Mark Zuckerberg: Free speech forever on Facebook, Rappler. Available at: https://www.rappler.com/technology/social-media/80274-facebook-mark-zuckerberg-jesuischarlie (Accessed: 17 October 2017).

Roberts, S. T. (2017) in Content moderation. California Digital Library – University of California. Available at: https://escholarship.org/uc/item/7371c1hf#main (Accessed: 23 October 2017).

Shirky, C. (2011) ‘The Political Power of Social Media: Technology, the Public Sphere, and Political Change’, Foreign Affairs, 90(1), pp. 28–41

Statista (2017) Facebook: global penetration by region 2017, Statista. Available at: https://www.statista.com/statistics/241552/share-of-global-population-using-facebook-by-region/ (Accessed: 8 October 2017).

Time (2016) ‘The Story Behind the “Napalm Girl” Photo Censored by Facebook’, Time, 9 September. Available at: http://time.com/4485344/napalm-girl-war-photo-facebook/ (Accessed: 7 October 2017).

Yeung, K. (2017) ‘“Hypernudge”: Big Data as a mode of regulation by design’, Information, Communication & Society, 20(1), pp. 118–136. doi: 10.1080/1369118X.2016.1186713.

Zimmer, M. (2011) ‘Open Questions Remain in Facebook Censorship Flap’, 25 April. Available at: http://www.michaelzimmer.org/2011/04/25/open-questions-remain-in-facebook-censorship-flap/ (Accessed: 6 October 2017).

Zuckerberg, M. (2017) ‘Building Global Community’, 16 February. Available at: https://www.facebook.com/notes/mark-zuckerberg/building-global-community/10154544292806634 (Accessed: 23 October 2017).

Zuckerberg, M. (2017) ‘As of this morning, the Facebook community is…’, 27 June. Available at: https://www.facebook.com/zuck/posts/10103831654565331 (Accessed: 8 October 2017).