Facebook’s new “two more big steps”. A responsible resolution or a surge for more self-regulation?

Facebook has recently come under attack. Much can be written about the controversies that the social media platform is currently facing. Questionable subjects are, to name a few, tax evasion, live streaming of violence, the effects of social media addiction, privacy issues and third-party website tracking users. A particular hot topic, however, is the role of Facebook in the 2016 US presidential race. This blog examines how Facebook responded to the tumult with introducing two new technologies to prevent future misconduct and how the company addresses calls for regulation.

Although academics have discussed possibilities to regulate online speech extensively, Tarleton Gillespie stresses the need to update these regulations. Technological difficulties challenge authorities to penalize users who post or view online content (Kreimer 13), as most regulation predates the emergence of social media platforms (Gillespie 254). Facebook, Twitter, but also MySpace, Friendster, Napster and Google were not even founded when legislation was implemented (Gillespie 259). The prevailing governance is designed for traditional information providers, but this is not operative for our current online methods of communication. Realising suitable legislation for social media platforms is demanding as these platforms endlessly offer new updates that make it hard to outline straightforward restrictions (Obar and Wildman 746). We should question how these powerful companies accommodate speech and social behaviour and with which rights and duties this comes (Gillespie 254). Facebook is an essential element to digital communities and its role cannot be ignored and needs to be controlled (Denardis and Hackl 769). Debates about Internet governance mostly explore the governance of, for instance, social media, but academics are now also examining regulation by social media, known as the social media challenge to Internet governance (Denardis and Hackl 769). Social media platforms, such as Facebook, present themselves as accessible, unbiased and neutral entities (Gillespie 257). For numerous reasons, Facebook regulates information that is shared by its users. Not only because insulted users would otherwise leave, the company also desires to satisfy advertisers, look after its appearance, to be lawful, and evade compulsory regulations (Gillespie 255). By claiming to be a neutral host, or intermediary, the industry aims to avoid from being held accountable for content that users publish that is discriminating, violent, provocative or fake. By saying that users are responsible for what they post, Facebook refutes liability (Tushnet 986). Facebook enjoys having the right, but not the obligation, to intervene (Gillespie 272). Facebook should be required to provide more information and transparency about how they try to regulate themselves, and why and how the regulatory procedures are implemented (Gillespie 273).

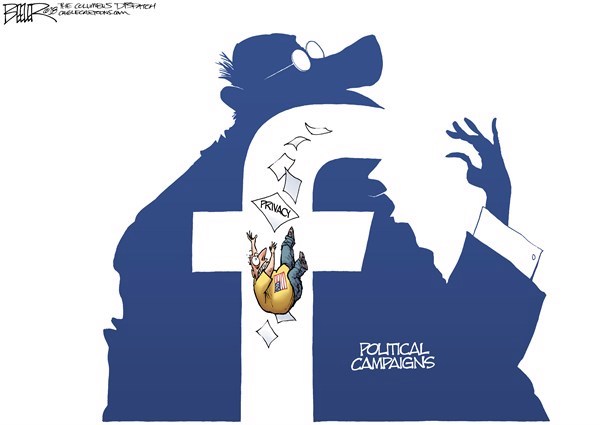

‘Faceful’ by Nate Beeler is a satirical cartoon

Two months ago, it was reported that the company Cambridge Analytica abused personal data of Facebook users and influenced the elections (Lee). While Facebook took notice of the misconduct two years prior, it failed to take appropriate actions. Although Facebook demanded to remove all user data, it did not inspect whether this was actually done. Cambridge Analytica advised political parties to tune their campaigns accurately regarding the preferences and rejections of users. Initially, upon release of this controversy, Facebook threatened news agencies trying to prevent publication. As the media attention continued to rage, Facebook founder Mark Zuckerberg introduced new technologies in a Facebook post. These technologies are, as he claims, “two more big steps” to prevent wrongdoing in the future (Zuckerberg). Zuckerberg aims to avert the spread of misinformation by increasing transparency and employing self-regulation guidelines. Firstly, Facebook will require verifications from accounts that posts political advertisements. Secondly, admins of large pages need to be verified too. Facebook’s response with these two measures to stop the abuse of personal information by third parties has been limited. It is important to mention that experts do not agree on the all-decisive role that Cambridge Analytica played during the 2016 presidential elections. It remains difficult to conclude to what extent the detailed profiling of millions of Facebook users influenced the actual outcome. According to Shane Goldmacher, Hillary Clinton had an even more comprehensive data-based strategy which should have given her a significant strategic lead (Goldmacher). Another critic, Helen Margetts, is convinced that social media influence politics, but you cannot explain Trump or Brexit with it (Margetts). Instead of blaming social media, we should think about how we can positively improve the role that it plays in our lives (Margetts).

For this reason, it is important to closely examine Zuckerberg’s response and his attempt to increase transparency of political advertisements. Zuckerberg’s track record of responding sincere and decisive is, thus far, not very positive. Past decisions of Zuckerberg have taught us to be cautious about his motives. While Zuckerberg claims to base decisions with the Facebook community always in mind (Solis), past actions do not confirm this promise. For example, the introduction of News Feed stunned users (Fowler and Esteban). In 2006, 2007, 2008 users complained about new features that compromised their privacy by making more information public and not providing settings to control it (Fowler and Esteban). Additionally, just after the election, the founder stated that it was unlikely and foolish to believe that Facebook influenced the US elections (Solon). Furthermore, the founder also only reacted accordingly, when a whistle blower stepped forward. But back to Facebook’s current steps. The promise is positive; however, Zuckerberg only takes half measures. From the 376 words post about Facebook’s new technologies, Zuckerberg only used 202 words to explain the two steps. Primarily, advertisers who would like to post political content need to be verified and ratify their identity and location. It is a good thing that the company committed to expanding its workforce with thousands of employees to ensure this verification roll-out. Critics, such as Farivar, doubt whether Facebook’s new steps will actually recognize or intercept misinformation, because authentication could be avoided with fake IDs and fake email addresses. Furthermore, it is challenging to pinpoint what it takes to be recognized as political content. In the 2016 elections, most controversial content played on feelings and did not necessarily carry a pronounced political character (Farivar, ‘Facebook’). In addition, although Zuckerberg indicates that Facebook is going to require a verification of admins of large pages, most regular pages and accounts evade this procedure. Certainly, Zuckerberg calls for more transparency, however, he himself is far from transparent. For example, he fails to explain the requirements for such a verification. He omits to explain how the technology works of “a tool that lets anyone see all of the ads a page is running” (Zuckerberg). In the hearing with the US Senate last month, Zuckerberg was ambiguous with his responses. He agreed that Facebook has a greater responsibility than the law now requires. However, he did not want to go into details of possible legislation and avoided requests to fully express his support for proposed legislative amendments. Zuckerberg claimed that Facebook is not against regulation, but wonders what the right framework of legislation can be. Facebook would welcome regulation that is right, however, Zuckerberg did not empathize what Facebook believes is right. Facebook is committed to improve the privacy of its users. And although Zuckerberg promised to apply the stricter European regulations to all users worldwide, he did not promise to apply all the rules of that legislation (Farivar, ‘CEO Says Facebook Will Impose New EU Privacy Rules “Everywhere”’). Not to mention that, two weeks after this promise, Facebook moved the data of one-and-a half billion users to the US to avoid legal liability from these stricter Irish laws (Farivar, ‘Facebook Removes 1.5 Billion Users from Protection of EU Privacy Law’). The company reformed its terms of service, urging us to question what Zuckerberg’s promises are worth.

Since its beginning, Facebook has apologized for abusing personal information, proving that his implementation of regulation has, thus far, not been very successful. However, several steps can be taken. Firstly, Facebook could be made advertisement free by allowing users to pay for the platform. This way, Facebook will not profit from user data. This will ensure that the principals of the company change from over-exploitation of user data to servicing its users. Secondly, instead of Facebook benefiting from our personal data, an alternative should be found to require Facebook to pay for accessing our data. Or at least users should be offered, in my opinion, the possibility to own and delete their personal data. Thirdly, to ensure a healthy, competitive market and prevent Facebook from becoming even bigger and more powerful, a judge could decide that Facebook is a monopoly and force the company to split Instagram, WhatsApp and Messenger. Another possibility is to implement a wall and evaluate all published content beforehand. It is questionable whether all users will accept this delay of information as a result. Private data is too sensitive and the chance of deliberate deception of billions of users is too great. This requires strict rules and proper supervision for providing data to third parties. Zuckerberg’s proposed steps are not firm enough. In order to restore the confidence in social media, stronger, legal measurements need to be implemented. Facebook itself would benefit from this too.

References

Beeler, Nate. ‘Faceful’. The Columbus Dispatch, 21 Mar. 2018, http://www.dispatch.com/opinion/20180321/beeler-cartoon-faceful.

Denardis, L., and A. M. Hackl. ‘Internet Governance by Social Media Platforms’. Telecommunications Policy, vol. 39, no. 9, 2015, pp. 761–770. lib.uva.nl, doi:10.1016/j.telpol.2015.04.003.

Farivar, Cyrus. ‘CEO Says Facebook Will Impose New EU Privacy Rules “Everywhere”’. Ars Technica, 4 Apr. 2018, https://arstechnica.com/tech-policy/2018/04/ceo-says-facebook-will-impose-new-eu-privacy-rules-everywhere/.

—. ‘Facebook: If You Want to Buy a Political Ad, You Now Have to Be “Authorized”’. Ars Technica, 6 Apr. 2018, https://arstechnica.com/tech-policy/2018/04/facebook-if-you-want-to-buy-a-political-ad-you-now-have-to-be-authorized/.

—. ‘Facebook Removes 1.5 Billion Users from Protection of EU Privacy Law’. Ars Technica, 20 Apr. 2018, https://arstechnica.com/tech-policy/2018/04/facebook-removes-1-5-billion-users-from-protection-of-eu-privacy-law/.

Fowler, Geoffrey A., and Chiqui Esteban. ‘Analysis | 14 Years of Mark Zuckerberg Saying Sorry, Not Sorry’. Washington Post, https://www.washingtonpost.com/graphics/2018/business/facebook-zuckerberg-apologies/. Accessed 28 Apr. 2018.

Gillespie, Tarleton. ‘The Regulation of and by Platforms’. The Sage Handbook of Social Media, edited by Jean (Jean Elizabeth) Burgess et al., Sage Publications, 2018.

Goldmacher, Shane. ‘Hillary Clinton’s “Invisible Guiding Hand”’. POLITICO Magazine, https://www.politico.com/magazine/story/2016/09/hillary-clinton-data-campaign-elan-kriegel-214215. Accessed 15 Apr. 2018.

Kreimer, Seth F. ‘Censorship by Proxy: The First Amendment, Internet Intermediaries, and the Problem of the Weakest Link’. University of Pennsylvania Law Review, vol. 155, no. 1, Nov. 2006, pp. 11–101. lib.uva.nl, doi:10.2307/40041302.

Lee, Timothy B. ‘Facebook’s Cambridge Analytica Scandal, Explained [Updated]’. Ars Technica, 20 Mar. 2018, https://arstechnica.com/tech-policy/2018/03/facebooks-cambridge-analytica-scandal-explained/.

Margetts, Helen. Of Course Social Media Is Transforming Politics. But It’s Not to Blame for Brexit and Trump. 9 Jan. 2017, https://www.oii.ox.ac.uk/blog/of-course-social-media-is-transforming-politics-but-its-not-to-blame-for-brexit-and-trump/.

Obar, Jonathan A., and Steve Wildman. ‘Social Media Definition and the Governance Challenge: An Introduction to the Special Issue’. Telecommunications Policy, vol. 39, no. 9, 2015, pp. 745–750. lib.uva.nl, doi:10.1016/j.telpol.2015.07.014.

Solis, Brian. ‘In Mark Zuckerberg We Trust? The State and Future of Facebook, User Data, Cambridge Analytica, Fake News, Elections, Russia and You’. ReadWrite, 5 Apr. 2018, https://readwrite.com/2018/04/05/in-mark-zuckerberg-we-trust-the-state-and-future-of-facebook/.

Solon, Olivia. ‘Facebook’s Fake News: Mark Zuckerberg Rejects “crazy Idea” That It Swayed Voters’. The Guardian, 11 Nov. 2016. www.theguardian.com, http://www.theguardian.com/technology/2016/nov/10/facebook-fake-news-us-election-mark-zuckerberg-donald-trump.

Tushnet, Rebecca. ‘Power without Responsibility: Intermediaries and the First Amendment.(Access to the Media-1967 to 2007 and Beyond: A Symposium Honoring Jerome A. Baron’s Path-Breaking Article)’. George Washington Law Review, vol. 76, no. 4, June 2008, pp. 986–1016.

Zuckerberg, Mark. ‘Two More Big Steps to Prevent Interference in Elections’. Facebook, 6 Apr. 2018, https://www.facebook.com/zuck/posts/10104784125525891.