Researching Wikipedia: Activity Streams & Document Network Location

While I was working on adding information to an existing Wikipedia entry I noticed that there is a well maintained ‘History’ tab present. In this history tab one finds all the different ‘revisions’ of a document. With this history present in the system one could look at the last made changes but also how the information contained within the document has expanded or shrunk over time.

One obvious usage would be to perform a so called ‘rollback’ which reinstated an earlier version as the current version. This is particularly helpful in the case of spam attacks. One could also consider the history of a document being an activity stream, which in turn offers interesting research possibilities.

Activity Streams

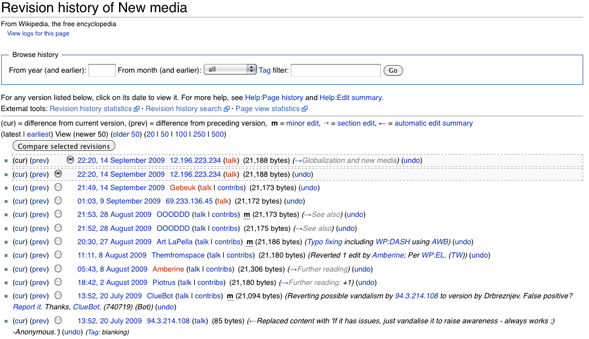

Let’s consider as an example the history of the new media entry on Wikipedia:

Note that what is shown here is only a small part of the total history of this document. If someone would write an automated program to extract the relevant metadata from this activity stream we could (among other things) find out for example which are the topics that are continuously being debated. Since we can compare earlier and later versions of a page we could see which sections are often altered significantly and manually map those into topic that need more researching to settle the argument. This could prove a very effective way to underline the value of a research proposal to the work field!

Another analysis that comes to mind is tracking the activity of multiple Wikipedia users and relate that to the amount of ‘clashes’ these users encounter. This could help track down users who are underlining the accuracy of Wikipedia, but could also prove a valid research subject in the sense that it gives a start in analyzing the role of ‘power’ in the Wikipedia control mechanism. Is it so that the world view of power-users gets trough more often? And if so; what remains of the argument that “given enough eyeballs, all bugs are shallow?”[1]

Document Network Location

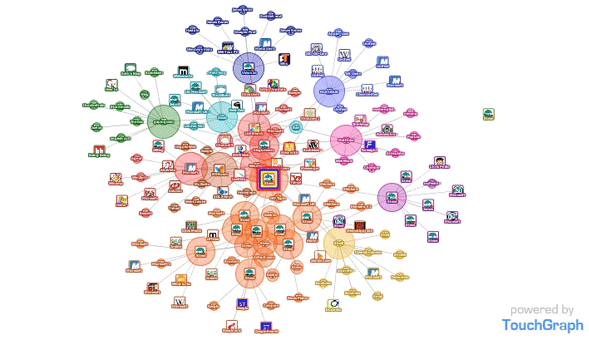

Another possibility for interesting research is looking at the combination of activity streams and document network location. Though not a new technique, I do not believe there has been any serious effort in contextually mapping Wikipedia entries. What I am proposing is that we use a specifically tailored webspider to index all of Wikipedia. The next step would be to automatically analyze all internal linking so we may see what documents are closely interlinked and which are not. This will not only give a good understanding of which the ‘core themes’ are that are discussed on Wikipedia but also what ‘outer regions’ may be considered fraud sensitive.

The resulting visualization would look similar to this:

If we add the activity streams to the equation one could narrow down the topics of interest more tightly. What topics are often altered (so to say; what are the most ‘hot’ themes on Wikipedia right now?) and which are left almost entirely untouched? Here too such outcomes would provide fertile ground for further research. On could look at the involvement of political standpoints on the ‘discussion topics’ for example by adding ethnographic research to the mix.

More to explore

Of course there are many more possibilities, as others a have also outlined on the Masters of Media blog. Using network theory is but one research option, be it an interesting one which could quickly give some more insights in the dynamics of Wikipedia in ways impossible based sole upon human labor. I strongly feel that Wikipedia is a research object requiring multidisciplinary research.

References

[1] The Cathedral & the Bazaar, Eric S. Raymond, O’Reilly Media, February 2001.