Tesla’s Autopilot Function in the Light of Liability and Assistance

One of the newest tools, that not only the multinational car companies but also companies like Google and Apple are assumed to work on, is the introduction of the self-driving car. Telsa has recently introduced a Tesla car with an autopilot function. When this is enabled, drivers are assisted by remaining their lane and speed. This development is revolutionary simply because it leads to a big debate around safety and the evolution of the cyborg culture. This trend leads to some interesting debates like: who’s responsibility is it when an accident occurs? In other words, you could ask how software (flaws) can be responsible for the life of human beings? On June the 30th, Tesla published a blog post in which they stated that on the 29th of June a driver of a Tesla car died due to an accident which happened while the autopilot function was turned on (A Tragic Loss). Another interesting issue is how this development is leading to a new trend in which software can take over some very diverse and ‘intelligent’ functions of mankind. These two issues are very closely connected to each other which makes it important to discuss both. It raises the question on how the introduction of the self-driving car, and thus a technological extension of human beings, leads to a debate around safety issues and liability.

Autonomous or not?

Autonomous or not?

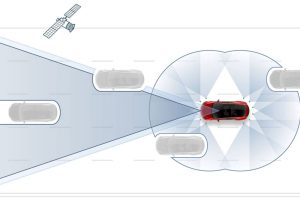

It is important to stress out whether and to what extent the autopilot function can be seen as a form of software that takes away some functions of mankind. In the blog, announcing that there has been a lethal accident, Tesla makes a few interesting statements involving the car and the degree of autonomy. One of the most interesting statements they make is that the auto pilot function is still a very new piece of software and can only be seen as a an assist feature (A Tragic Loss). It is therefore important that the driver stays alert and needs to maintain control and responsibility. The fact that this statement is published is in line with what Eve Harold claims in her book Beyond Human. She states that “the more advanced the personal robot becomes, the greater the danger that users will come to over-rely on them” (Herold 229). In the article of Gordan Jolson, a Volvo engineer also critiques Tesla for introducing the autopilot function to fast because some things operate so smoothly that the user will think it can handle any situation (Golson). This is also why Tesla probably decided to update the autopilot software with an advanced warning system for its driver. When the autopilot function is enabled and the driver is not placing his hands on the wheel and thus not paying attention or ready to take over, audible and visual warnings are activated. After these warnings and no the car needs to be parked in order for the driver to re-enable the function (Lee). This makes it clear that the autopilot function of the Tesla is nowhere near the autonomous status it is often assigned with.

Liability

Ever since the first car was invented car accidents occurred. According to Claes Tingvall the automobile was first viewed as a dangerous form of transportation. Later on it was approached as “controllable by sensible and responsible individuals” (Tingvall 489). However lethal and other severe car accidents kept occurring. The person in control of the car was always responsible and thus liable for accidents if it was ‘their’ fault. The introduction of self-driving cars is leading to a fierce debate concerning legal liability. What if software is in control instead of human beings? This will not lead to no accidents at all, simply because there will always remain some bugs within software, but towards a shift in the paradigm around liability. What if it is ‘the software’s mistake’? It is, therefore, nt the question whether these autonomous machines will make mistakes but what the consequences are in the light of liability. Autonomous machines, like self-driving cars, that function within society could be assigned with legal personhood. This would make it possible to grant legal liability to the robot and then also insure this robot. The robot would be insured by the companies that build them (Barfield 26). Despite this solution, liability will maintain a debate that is hard to agree upon. It will be hard to decide who’s or what’s fault it is when several actors are in play. For instance, an accident with a self-driving car and a human driven car. Can software react to all kinds of actions by human? And how much time does the ‘driver’ of a self-driven car have to react?

All in all, these more or less autonomous functions raise some very interesting questions for civilian life in general. It is expected that more advanced software will appear in the near future which will intervene in human life and also extend human functions. It is however dangerous to fully rely on these functions (at least for now). Besides that, we need to have a look at liability. Being able to insure the robots and thus make them liable could be a reasonable approach to self-acting software extensions.

Bibliography

“A Tragic Loss.” Tesla. 30 June 2016. 14 Sept. 2016. <https://www.tesla.com/nl_NL/blog/tragic-loss?redirect=no>.

Barfield, Woodrow. Cyber-Humans: Our Future with Machines. Chapel Hill: Springer, 2015. Print.

Golson, Jordan. “Tesla Driver Killed in Crash with Autopilot Active, NHTSA Investigating.” The Verge. 30 June 2016. Web. 14 Sept. 2016. <http://www.theverge.com/2016/6/30/12072408/tesla-autopilot-car-crash-death-autonomous-model-s>.

Herold, Eve. Beyond Human: How Cutting-Edge Science Is Extending Our Lives. New York: St. Martin’s Press, 2016. Print.

Lee, Dave. “Tesla Autopilot Update Seeks Better Safety.” BBC News. 2016. 14 Sept. 2016. <http://www.bbc.com/news/technology-37335212>.

Tingvall, Claes. “The History of Traffic Safety: Describing 100 Years.” Technology and Culture 56.2 (2015): 489–492.