Time and Algorithmic Music Composition

Time and Algorithmic Music Composition

Information and communications technologies proliferate today, and with them, the continuous generation, processing, recording, circulation, and visualization of ever more data. The societies of control theorized by Deleuze and others are said to exert power by acting as universal modulators that give or restrict access to this information (Deleuze, 1997), regulating its complexity at multiple scales and in multiple ways to make it more manageable, and thereby actionable. Algorithmic music composition is one kind of technology expressing particularly well this logic of control as it organizes or generates the complexity of noise and sound. Whether algorithmic musical compositions are predominantly created by human artists, machinic computation, or some undecidable mixture, they confer an unprecedented scope of power to the creative tinkerings that produce patterns of aesthetic novelty, a key accepted element of genuine artistic creativity (Eno, 2006). The introduction of the logic of control into musical projects like Google’s Project Magenta, a subdivision of Google’s Deep Mind or Brian Eno’s popular, algorithmically-assisted experimental albums which explore generative music making creates new kinds of affordances for artistic labor and intelligence, human and non-human alike. In order to begin investigating what these affordances might be, and their potential significances, we’ve decided to narrow our focus upon time and the way music, interface, and computational processes intersect. Technical operations like visualizing time in interfaces, or the provision of finer temporal granularity for sonic analyses and modulations, point towards emerging developments for human and non-human relationships to time, perhaps even a new concept of time, as mediated by computationally-generative music technologies.

Methodologies

Our research, therefore, offers a critical examination of how time has come to be digitally modeled and experienced by means of algorithmic music technologies, in the hopes that these changes illuminate key features of aesthetic production in societies of control. We primarily use conceptual analysis, interface analysis, and design theory to investigate this problem. Conceptually, this research is primarily aimed at framing algorithmic composition, not only in its history but in its current academic relevance. We look at the works of prominent figures in the field, look at their methods of constructing algorithmic compositions, and apply those insights into the analysis of L-systems, MIDI Controls, and interfaces.

We wonder: How is techno-aesthetic composition accustoming us to new models for thinking about time? How do these new models transform the creative process itself, for human and machine artists, as well as audiences? How is time related to the incorporation of contingency into pre-decided parameters, a crucial component of creative and machine learning, and the synthesizing of aesthetic novelty?

Relevance

This idea of control over granular time as it is visualized by new algorithmic compositional interfaces suggests an evolution, or better a co-evolution of both algorithmic and human grammars. On one hand, audiences develop an appreciation and, more important, get use to an inhuman computational aesthetics. Indeed algorithmic music is no more the mere reproduction of an artistic effort, but “it has become an integral part of a multi-aesthetic environment that extends our perception into the realm of immersion” (Essl, 124). On the other hand, user experience seems to be spoiled by some of its previous features. The notion of control doesn’t keep up with times. There is a constant interaction between the human and the machine, constantly reinforced in a real-time conversation between the two entities. As the interactive artist David Rokeby suggests, “interaction transcends control…if performers feel they are in control of an environment, then they cannot be truly interacting, since control is not an interaction”(Lippe, 2).

Human artists find their available scope of musical time greatly enlargened, placing more informational complexity at their fingertips. But the computational prowess of the algorithms that human users interact with can pressure users with time constraints that can be different from other forms of aesthetic production. There can be less time to interiorize or reflect musical trends or processes the algorithms are producing, and more of an incentive to, as an artist, quickly find one’s bearings amidst the musical generation. It’s like the “conversation with a clever friend” where you have to think and answer more quickly to external stimuli, leading to transformations in users’ grammars of aesthetic organization. As Moon (1997) suggests, the co-evolution of human and machinic relations to time changes as the “potential changes in the behaviors of computers and performers in their response to each other” find their historical parameters of music making shifting. Rokeby brings our attention to “interactivity” as a further step beyond mere control: a mutual control, a co-controlling that brings up questions and problems of authorial intent or origin.

Google’s Magenta project is leading towards scenarios of art-making where human are increasingly restricted to mathematical, design, and computer scientific knowledges–for ex, coders doing coding labor on Github–as the AI gains increasingly more autonomy in generating aesthetic novelty that may come to be appreciated as art. This signal of a possible divergence of co-evolving series is opening up the possibility of machine-made art largely out of the hands of humans as a direct result of the transformations of time these technologies afford.

But the notion of control is necessary to define the degree of authoriality and intentionality related to an artistic product. While the granularity and compression of time within an algorithmic compositional interface might open previously unimaginable doors for the user, which is now more able to mold compositional time, it opens the same doors for the algorithm, more prepared to diversify its outputs. And so the question about authoriality remains open: who is the artist? the human or the algorithm?

Context

As stated by Stelios Manousakis in Musical L-Systems (2006), “[i]n the past few decades, increasing processing speed allowed experimentation with complex dynamic systems – iterated function systems, fractals, chaos and biological metaphors like cellular automata, neural networks and evolutionary algorithms have entered the vocabulary of the electronic musician” (Manousakis, 16). As opposed to universal grammars (also known as Chomsky grammars), the algorithmic composition vocabulary that Manousakis refers to is associated to the grammars of L-systems (or Lindenmayer Systems). L-systems were proposed by the biologist Aristid Lindenmayer in 1968, initially in order to provide a mathematical “foundation for an axiomatic theory of biological development” (Ochoa, 2). However, by the 20th century, L-systems had branched out of the biological department and into the computer sciences. Today, L-systems are used as the predominant method in computer graphics as well used by a large portion of the algorithmic composition community.

Przemyslaw Prusinkiewicz was a Polish computer scientist who first expanded upon the notion of using L-systems in algorithmic composition. Prusinkiewicz argued that, “the proposed musical interpretation of L-systems is closely related to their graphic interpretation,” and that these L-systems are “very powerful in spatial representations…The act of designing this space and its resolution – or perceptual grid – is in itself a major part of the compositional procedure” (35). The composer Gary Lee Nelson adopted the theories of Prusinkiewicz, yet he went one step further by stating that “[n]ote duration is extracted by computing the spatial distance between the vertices that represent the beginning of lines realised as notes” (Manousakis, 36).

Manousakis expands on this notion by applying Nelson’s emphasis on time, stating: “L-systems operate in discrete time steps. Each derivation step consists of a, conceptually parallel, application of the production rules which transforms the string to a new one. The fundamental concept of the algorithm is that of development over time.” (Manousakis, 20-21) Therefore, through Nelson’s expansion of Prusinkiewicz’s theory, the role of ‘time’ and spatial mapping in L-systems become inextricably linked. As shown in Figure 1 below, this linkage is made possible through the variable of ‘composer’ or ‘musical interpreter’ (see Figure 1).

Figure 1 Prusinkiewicz’s L-system interpreter concept.

Through the time scale formalisation proposed by Roads (2001) (see Figure 2), Manousakis summarises this specific relationship between time, spatial mapping and the variable of the composer by stating that, “[i]t is up to the composer to define the musical parameter space and the grids of the respective vectors and to program L-systems that generate trajectories, musical structures and control mechanisms in all the time levels of a composition – macro, meso, sound object, micro, sample level.” (Manousakis, 17) Sequentially, the algorithm then translates the string output through the interpretation rules in order to generate the music via MIDI output. (Manousakis, 21) Thus, in a crude nutshell, the core concepts of musical L-systems include a unique interaction with, and dependency on, time segmentation, an alliance with spatial mapping, as well as an ambivalent relationship with MIDI interfaces.

The argument set forth by Prusinkiewicz and Nelson implies that the composer has full autonomy over the mapping and the duration of each segment within the piece, as well as over the piece as a whole. Two opposing responses are made for and against this notion: 1) The composers autonomy over wavelength and time segmentation entails a Bergsonian revolution regarding free-will and the defining of consciousness or 2) The final output source is the MIDI interface (see Figure 1) and since this engenders numerous limitations for the composer, they thus do not have “true” autonomy over the final production of the piece.

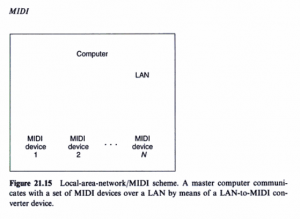

Figure 2 “Local-area-network/MIDI scheme. A master computer communicates with a set of MIDI devices over a LAN by means of a LAN-to-MIDI converter device.” (Roads, 1013)

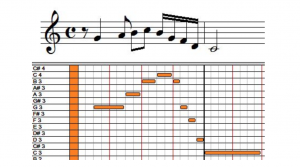

You can record directly in MIDI the performance of a musician on a MIDI keyboard, using the MIDI programming interface of Windows and Mac and store it into your data structures.You can also create a graphic interface like the standard “piano roll” editor, that represents the notes as horizontal bars that the user can add, resize, move, delete, copy/paste, transpose,…Combined with notation, it looks like this: (Vandenneucker, 2012)

Figure 3 MIDI programming interface showing the visual comparison between notation and wavelength segments in granular time

The scope set by the MIDI thus limits the soundscapes the composer is able to achieve, similar to how a graphic interpretation of a tree model could never be accurate in a 128×128 pixel resolution. (Manousakis, 42) Hence, the opinion being that regardless of whether the software’s source of input is digital, real-time, graphic, algorithmic coding, or audial, and despite the display of time or wavelength segments, the MIDI interface will essentially always have the final say.

The more optimistic side of this debate disregards this technical restraint. Instead, they stand with the notion that it is primarily the composer’s control over the sequential time of the piece alongside the interface’s unique segmentation of timbre and time that allows for an unprecedented musical revolution. Furthermore, much of the academic dialogue pleading this case then comes to questions such as: What does this mean for society as a whole? Does this revolution extend beyond the musical sphere? If so, how?

Conclusion

Henri Bergson argues that in order to define consciousness and freedom, a differentiation, or “un-mixing,” of time and space need to occur.’ (Leonard, 2016). This Bergsonian point of view aids the favoring dialogue concerning the questions stated above: through an algorithmic composition that utilizes L-systems in its production, it provides to the user the power of re-segmenting both time as well as spatial mapping of sound in an unprecedented way thus restoring free-will to the artist. If this tactic is then adopted by the creative community on a mass scale, these unconventional consciousnesses can be recorded and spread among the mass public.

Jonathan Murthy

Felix Navarrete

Megan Phipps

Giovanni Scala

Works Cited

Bovermann, Till. Musical Instruments in the 21st Century: Identities, Configurations, Practices. Springer, 2016.

Chapel, Rubén Hinojosa. Realtime Algorithmic Music Systems From Fractals and Chaotic Functions: Toward and Active Musical Instrument. Universitat Pompeu Fabra, Sept. 2003, http://mate.dm.uba.ar/~umolter/materias/geo-fractal-2-11/papers%20fractales%20y%20musica/fractal%20algorithmic%20music.pdf.

Collins, Nick. “The Analysis of Generative Music Programs.” Organised Sound, vol. 13, no. 3, Dec. 2008, pp. 237–48, doi:10.1017/S1355771808000332.

Deleuze, Gilles, and Martin Joughin. Negotiations: 1972-1990. Columbia University Press, 1997.

Edwards, Michael. “Algorithmic Composition: Computational Thinking in Music.” Commun. ACM, vol. 54, no. 7, July 2011, pp. 58–67, doi:10.1145/1965724.1965742.

Essl. “Algorithmic Composition.” Cambridge Companion to Electronic Music, Jan. 2008, pp. 107–25.

Fernandez, Jose David, and Francisco Vico. “AI Methods in Algorithmic Composition: A Comprehensive Survey.” arXiv:1402.0585 [cs], Feb. 2014, doi:10.1613/jair.3908.

Gray, Jonathan, et al. “Ways of Seeing Data: Toward a Critical Literacy for Data Visualizations as Research Objects and Research Devices.” Innovative Methods in Media and Communication Research, 1st ed., Palgrave Macmillan, 2016, pp. XXVII, 330, https://drive.google.com/drive/folders/0B3PVkKLx5rg8TDFVQzdzeE1XRFk.

Lawlor, Leonard, and Valentine Moulard Leonard. “Henri Bergson.” The Stanford Encyclopedia of Philosophy, edited by Edward N. Zalta, Summer 2016, Metaphysics Research Lab, Stanford University, 2016, https://plato.stanford.edu/archives/sum2016/entries/bergson/.

Lippe. Real-Time Interaction among Composers, Performers, and Computer Systems. Oct. 2017, https://www.researchgate.net/publication/228967400_Real-time_interaction_among_composers_performers_and_computer_systems.

Manousakis, Stelios. Musicial L-Systems. The Royal Conservatory, June 2006, http://carlosreynoso.com.ar/archivos/manousakis.pdf.

Ochoa, Gabriela. On Genetic Algorithms and Lindenmayer Systems. http://www.cs.stir.ac.uk/~goc/papers/GAsandL-systems.pdf. Accessed 24 Oct. 2017.

Roads, Curtis. The Computer Music Tutorial. MIT Press, 1996.

Vandenneucker, Dominique. “Music Data Structures For Music Software Development.” Music Software Development, 2012, http://www.music-software-development.com/music-data-structures.html.

Venturini, Tommaso. “Visual Network Analysis.” Sciences Pomedialab, http://www.medialab.sciences-po.fr/publications/ visual-network-analysis. Accessed 6 Oct. 2017.