Facebook AI protects life from Live Streaming

In an era when everyone lives online, Facebook, the biggest social platform, adds constantly new tools for the user. One attractive feature is live streaming. Despite chatting and concerts that we are used to see, live video shows us now its dark side: A troubling trend where teenagers use this feature to commit suicide. With suicide rates rising to an alarming rate, Facebook includes AI to its existing suicide prevention tools.

The user’s engagement with the live streaming

“No matter where you are, Live lets you bring your friends and family right next to you to experience what’s happening together”, says Facebook in its release on December 2015. However, Facebook was not the first to include live-video feature, became highly used as the streamer and the viewer can interact during the broadcasting with comments and feedback.

What makes live streaming so appealing? In their research, Haimson and Tang identified four dimensions that make it engaging: Immersion (the feeling of “being there,”), immediacy, interaction, and sociality. Moreover, the fact that the video is live and something could happen at any moment, keeps the viewer watching with interest. In another research of Microsoft Research Lab, during the interviews, most of the streamers described that they were motivated to live stream to develop their personal brand through streaming activities. Finally, this feature is an important resource for breaking news offers what Andén Papadopoulos calls “citizen camera-witnessing”. Users spread their point of view of the events and participate actively in them.

Live Video/ Facebook

The Dark Side of Live Steaming

More than 10 deaths have been Live-streamed on social platforms the past 2 years. Public suicide is not new, but the fact that people choose to do it through live video, making the other “witness”; is a troubling trend.

Is it a cry for help? Are they hoping that someone will help them? Do they want to share their pain with the world and make their own statement? The reasons why someone commits suicide are plenty, and especially when one witnesses the death of another can motivate people to do the same. Suicide is the second-leading cause of death for teens and young adults ages 15–34, according to the Center for Disease Control and Prevention. Between 2007 and 2015, deaths by suicide increased 31% for boys and 50% for girls (for teens 15–19).

With Facebook Live having more than 3.5 billion broadcasts in the past two years, detection of harmful context is challenging. With user videos online for hours and sometimes days, they become viral with wide-spread sharing. That was the case of Katelyn Nicole Davis. A 12-year-old girl, in a 40-minute live video she hung herself outside of her home. This video was on various websites for several days.

“We built this technology platform, so we can go and support whatever the most personal, emotional and raw ways people want to communicate are as time goes on,” said Zuckerberg. Therefore, they may use live video to self-harm and act of violence with hundreds of witnesses. Facebook build tools, but they are not used always for good.

Facebook Artificial Intelligence and Tools Today

tips and support

After this alarming phenomenon, Facebook started a campaign with suicide prevention tools. The company hired an additional 3000 experts to join the Operations Team and review quickly the reports. They added live chats with crisis support organizations so the user can contact immediately and ask for help. Facebook created its own page online

“Facebook Safety” with videos to promote the suicide prevention tools, the 24/7 reviewing report and emergency services. The user can reach out partners like National Suicide Prevention Lifeline, which directly responds to reports of self-harm, and connect the user with the help he needs

https://www.youtube.com/watch?v=K4dVz8XkHvw

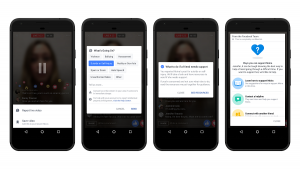

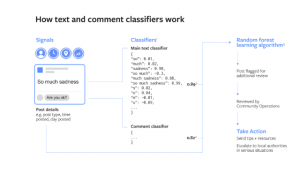

Before the use of the AI, Facebook relied on its users to report a video in order to remove it and contact the streamer. Sometimes, videos weren’t reported at all or reported delayed. In many cases this content causes the “bystander effect” (J. Darley & B. Latané,1968), a social psychological phenomenon in which individuals are less likely to offer help to a victim when more people are watching. To fill this gap, on February 2018, they made an expansion of the existing suicide prevention tools that use artificial intelligence to identify posts expressing suicidal thoughts. Once someone flags a video of a friend, the platform receives the sign; Community Operations team reviews it to determine if the person is at risk. Meanwhile, a machine learning classifier runs constantly to recognize posts that include keywords or phrases indicating thoughts of self-harm.

Live Reporter/ Facebook

How classifier examines the post/ Facebook

A complex issue that they had to deal is that many phrases that might indicate suicidal intent -“kill,” “die”- are commonly used in other contexts. So, the algorithm examines not only the context of the post, but also the comments of the post to check for relevance. When the Operation team confirms that a person is at risk, a window opens in the screen which asks if he wants to contact a friend and provide help lines. If the case is more urgent, Facebook contact with the local authorities.

Facebook could easily forbid the feature of Live streaming or cut off the video directly when it was reported for self-harm. Instead of that, Facebook “nudges” (Thaler & Sunstein, 2008) the person threatening suicide to alter his opinion and provide help. “We learned from the experts and what they emphasized to us is that cutting off the stream too early removes the chance of someone being able to reach out and provide help,” said Jennifer Guadagno, Facebook’s lead researcher in an interview.

Food for Thought

Today, our lives rely on the digital media platforms. Facebook, with 2.2 billion monthly active users, tries to bring the world closer together. In the cases of acts like self-harm, suicide users can help and support each other. With the input of Artificial Intelligence, Facebook improves the efforts of detecting such activities in order to build a safer community.

AI based on data usage, is a constant surveillance on the information, a “ big brother is watching you” theory (Agre, 1994), trying to gather as much data as possible. But the question is: could AI intelligence prevent this social phenomenon? Could AI replace people with technology? Could AI ever replace the emotional connection and support that man provides in a period of crisis? Technology through innovations could bring closer patients with help health care providers, friends and family. However, a basic limitation of AI is that it lacks common sense and will. Factors that drive people to perform social acts. Let’s AI help us improve our lives and achieve goals, but in the meantime, let us look at social infrastructures the causes and concepts of phenomena.

References

Agre, Philip E. Surveillance and Capture: Two Models of Privacy. The Information Society, 1994. 101-127.

Haimson, Oliver L. and Tang, John C. What Makes Live Events Engaging on Facebook Live, Periscope, and Snapchat. Microsoft Research Redmond, 2017 https://www.microsoft.com/en-us/research/wp-content/uploads/2017/05/CHILiveEvents.pdf

Tang, John C. and Venolia, Gina and Inkpen Kori M. Meerkat and Periscope: I Stream, You Stream, Apps Stream for Live Streams. Microsoft Research Redmond, 2016 http://www.audentia-gestion.fr/MICROSOFT/PeriKat.pdf

Yeng, Karen. ‘Hypernudge’: Big Data as a mode of regulation by design, Routlegde, 2017. 119-132.

Gillespie, Tarleton. The politics of ‘platforms’. New Media Society, 2010. 355-359.

Facebook Head of Global Safety, Building a Safer Community With New Suicide Prevention Tools, Facebook News, 2017 https://newsroom.fb.com/news/2017/03/building-a-safer-community-with-new-suicide-prevention-tools/

Card, Catherine. How Facebook AI Helps Suicide Prevention, Facebook News, 2018 https://newsroom.fb.com/news/2018/09/inside-feed-suicide-prevention-and-ai/

Lavrusik,Vadim and Tran,Thai. Introducing Live Video and Collages, Facebook News, 2015. https://newsroom.fb.com/news/2015/12/introducing-live-video-and-collages/

National Suicide Prevention Lifeline, https://suicidepreventionlifeline.org/help-someone-else/safety-and-support-on-social-media/

Darle, J. and Latané, B. Bystander intervention in emergencies: diffusion of responsibility, Journal of Personality and Social Psychology, 1968. 377-383. https://pdfs.semanticscholar.org/432a/51ae6e67a9c7fdb7b97c4917da96bb3140cf.pdf

Cieciura, Jack. A Summary of the Bystander Effect: Historical Development and Relevance in the Digital Age,Inquire Journal. 2016 http://www.inquiriesjournal.com/articles/1493/a-summary-of-the-bystander-effect-historical-development-and-relevance-in-the-digital-age

Papadopoulos, Kari-Anden. Citizen camera-witnessing: Embodied political dissent in the age of ‘mediated mass self-communication, New Media & Society, 2014. 753-769

Guynn, Jessica. Facebook takes steps to stop suicides on Live, USA TODAY, 2017. https://eu.usatoday.com/story/tech/news/2017/03/01/facebook-live-suicide-prevention/98546584/

Griffin,Andrew. Facebook Live suicides call attention to dark sideof lviestreaming. 2017, https://www.independent.co.uk/life-style/gadgets-and-tech/news/facebook-live-streaming-app-mobile-suicide-death-harm-self-murder-danger-a8018621.html

Centers for Disease Control and Prevention. QuickStats: Suicide Rates for Teens Aged 15-19 Years, United States, 1975–2015, 2017. https://www.cdc.gov/mmwr/volumes/66/wr/mm6630a6.htm

Kleiman, Evan M and Lui, Richard T, Social support as a protective factor in suicide: Findings from two nationally representative samples, Journal of Affective Disorders. 2013 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3683363/