Bubble Trouble – Venture Out of Your Filter Bubbles

The world we live in today has been bestowed with epithets such as fake news era, post-truth world and misinformation society in the press as well as academic literature. These discussions call attention to how the news we encounter online is manipulated and how this, in turn, distorts our worldview. One of the main culprits held responsible for this is the phenomenon called filter bubbles. In this article, we explore what filter bubbles are, its causal factors, how it affects our news consumption habits and propose an intervention.

What are filter bubbles?

There is an overwhelming abundance of information online that is diverse in terms of sources, content, and viewpoints. This makes it hard for individual readers to choose which articles to read at the expense of others. Journalists, writers, and editors used to be responsible for carrying out this curatorial function in the mass media era, but now readers depend on algorithm-based systems that filter huge amounts of information and deliver personalized recommendations for each user.

This filtering can be explicit, wherein users select what topics they are interested in, or implicit, whereby their online behavior is used without solicitation to predict what they might be interested in (Haim et al. 332). Google News, for example, offers personalized news recommendations based on web history of users’ past click behavior. Based on a data profile of the user, the algorithmically recommended stories are supposed to meet the preferences of the user, and adapt to possible changes to predict their future behavior (Liu, Dolan and Pedersen 31).

Most of these machine-learning models that deliver personalized news recommendations are constructed with the end goal of getting the user to click through. Feedback loops ensure that content and sources that are similar to the articles the user has visited are included and those that she is apathetic to are left out. This runs the risk of creating “filter bubbles” – a phenomenon caused by algorithms recommending content that the user is likely to agree with (Flaxman et al. 299). With minor variations in definition, these are also called “echo chambers” or “informational cocoons”.

Why is this a cause for concern?

Are filter bubbles as big a problem as it is made out to be? One can argue that reading news about topics users are interested in is only human. People tend to avoid media content that do not match with their interests (Borgesius et al. 6).

According to the empirical research carried out by Haim et al., the claim that algorithmic personalization of Google News leads to filter bubbles is an overestimation (339). For instance, even users who explicitly chose to get more stories about sports received recommendations for important stories on politics too (even though they did not pick that category). However, the research design had a limited focus that analyzed only interest categories. They do point out considerable source bias arising out of the susceptibility to click-bait and other SEO workarounds in Google News recommendations.

Even if scholars do not agree on the extent to which these bubbles exist, there is a widespread concern over the lack of transparency. The new gatekeepers of public opinion are algorithms that are stowed away in “black boxes” that are inaccessible or incomprehensible to the reader. If people do not realize that they are seeing pre-selected content, they might think that everybody sees the same content, and this might have negative consequences on the democratic opinion-forming process (Borgesius et al. 4). This is because users that regularly read partisan articles are almost exclusively exposed to only one side of the political spectrum (Flaxman et al. 300). With social media becoming an important source of news recommendations for many people, it gets even harder to see the other side.

This was evident from the aftermath of Brexit and the 2016 US Presidential Election. Hern’s article in the Guardian published ahead of the 2017 election in the UK is a case in point. While experts agreed that the filter bubbles created by social media algorithms would have a significant impact on how the country voted, they were scrambling for solutions to avoid “mistakes” caused by misinformation such as the Brexit vote and Trump being elected. Hern points to a 2015 study to make the case that sensitizing the public about how filter bubbles might be manipulating their opinion becomes more of a challenge as a majority of Facebook users are unaware of the curation that goes into what they see on the platform.

Research Objective

Based on this discussion, we wanted to propose an alternative recommendation system that sensitizes people about the selectivity of what they see and promotes exposure to a diversity of news topics

Our research objective can be summed up as follows:

To make readers aware of their filter bubbles and encourage them to read more about topics they do not get exposure to via Google News or recommender systems.

Methodology: Finding strategies to venture out of filter bubbles

In order to propose an intervention that achieves this objective, we started with a survey of existing apps in the space. These propose different methods to subvert the extreme personalization of news and burst filter bubbles.

Knowhere plans to burst filter bubbles by using an AI to write unbiased stories based on actual news articles. However, there was little or no information on how the algorithm works. However, we really liked how they integrated user-feedback into training the algorithm (even though it was locked for new users).

Flipfeed and Gobo targets the filter bubbles in social networks. Flipfeed “flips” your Twitter feed – it shows you what the Twitter feed of an actual Twitter user who is on the other end of the political spectrum looks like. Gobo introduces filters so that you can set the ideological range, rudeness, seriousness and virality of the content you see on Facebook and Twitter. The elements we really liked in this app was the transparency in showing why we were shown a particular Tweet and also the option to see what was left out because of these filters.

Read Across the Aisle is another relevant app that tries to expose the ideological filter bubbles readers fall into. They do this by using a combination of visual representation of biases in your reading habits (using a bubble diagram that shows the different sources you get your news from and how much you rely on each) and also by turning the screen blue or red for individual articles. But it does not suggest how you can add more diversity to your news diet.

All four either actively change readers’ news diet or throw light on the partiality of algorithms at work that show users some articles and not others. We wanted to propose a solution that does both.

In addition to this survey of apps, we also looked at some relevant academic literature and trade publications to identify other concerns and strategies that should drive our intervention. A common thread here was an insistence on involving the user, accommodating their interests and increasing awareness and transparency. This is key in light of the other adaptive news access systems our alternative would be competing with. Our solution will have to track readers’ interests, how it is changing and also incorporate their feedback on what we recommend they read (Billsus and Pazzani 148).

While this is usually done in “stealth mode” by observing their click behavior, Piore argues for an alternative that puts onus on the readers to explore content from multiple sources and engage with them. As innovators, we can nudge users to do so (Resnick et al. 97). This can be carried out by making people aware of their filter bubbles and offering possible ways to transcend them. Stray suggests using information maps that portray the universe of information and showing readers the fraction of it they get to see. This way, the possibilities of alternative news consumption can be made clear to them, rather than adopting an autocratic approach.

Stray also raises an important concern that most innovations and research in this space have focused solely on ideological divides and transcending them. Promoting exposure to other countries and cultures is just as important in the global era.

Bringing all these insights together, our project seeks to directly intervene in the news consumption practices of our users. To do so, we want to make people aware of the partiality of perspectives that filtering algorithms lead to and also nudge them to move beyond these bubbles. Increasing users’ awareness of news events that happen beyond squirrels dying in their front yard is a priority for us. As an end goal, we hope that our intervention will lead to greater plurality in topics, sources and regions in our readers’ news diet.

Bubble Trouble – Features and Outcomes

With the abundance of news and information online, algorithms and filters are here to stay. Our approach is to use filters productively to nudge people to recognize their filter bubbles and encourage them to read about a more diverse set of topics. Our project can be realized by using a combination of a browser extension and an app.

Fig 1. The Bubble Trouble app’s log-in screen

Fig 1. The Bubble Trouble app’s log-in screen

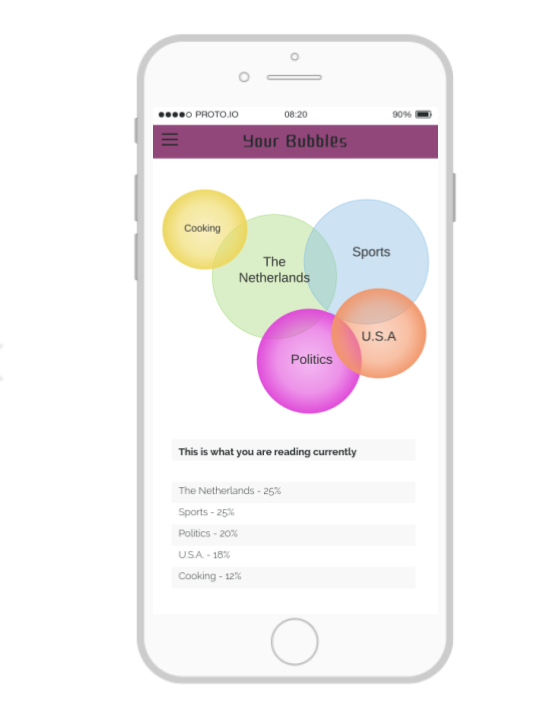

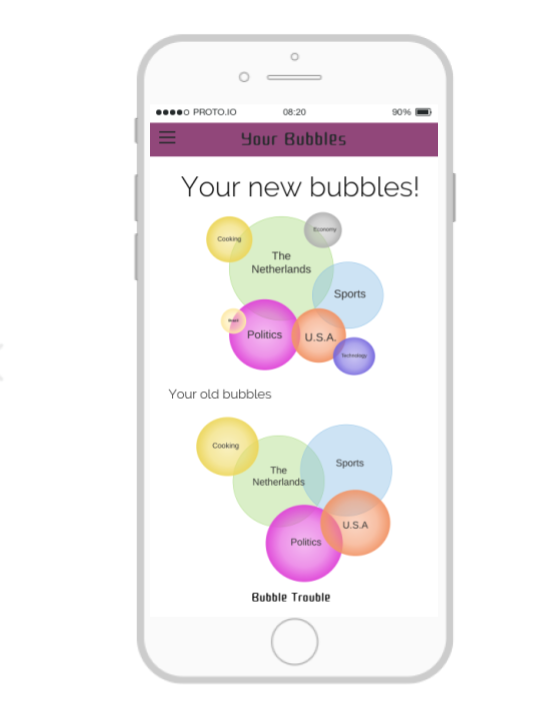

When you sign up, you will have to install a browser extension and download the mobile app. The browser extension studies your browsing history. It analyzes where you get your news from and uses that data to create a visual representation of the filter bubbles you are in. When you open the app for the first time, you are greeted with this diagram. It shows what topics and regions you read about most at that given moment.

Fig 2. The filter bubble visualization

Fig 2. The filter bubble visualization

Then, the user is invited to select topics and regions that lie outside their current filter bubbles they would like to read more about. So, for the user who gets the filter bubble visualization in figure 2, possible topics and regions exclude USA, Netherlands, cooking, sports and politics. She may pick, for instance economy, technology and Brazil. The user can also set the number of recommended stories they would like to receive every day and when.

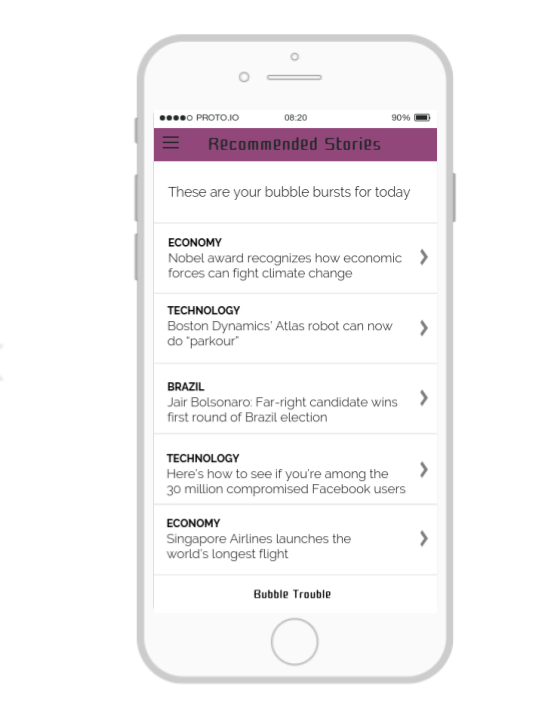

At the chosen time every day, users are sent a specific number of news stories that fall under the categories they have selected. The picture below shows how this might look for a user who has chosen to read more stories on the economy, technology and Brazil.

Fig 3. Recommended news stories for the day

These articles are sourced using a key to the News API. This API indexes articles from over 30,000 publishers worldwide, Google News being just one of the sources. Using this, it is possible to get headlines from all over the world rather than depending exclusively on the Google News API.

Stories are then ranked by our algorithm according to an aggregate of their popularity (number of views), coverage (number of sources that have covered the story) and adjusted for a disparity in global versus local coverage. That is, stories that have been covered extensively by regional media and have not been picked up by global news outlets will be given preference and more visibility. A simple explanation of this process, along with the actual documentation will be made public on the website.

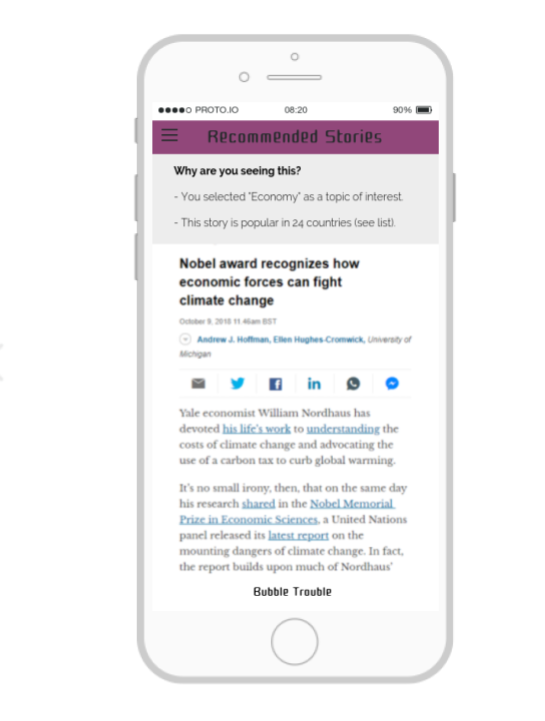

In order to stay true to our emphasis on transparency in the news curation process, readers can click on a button to find out why they are seeing a particular article. If you look at the example below, it specifies the topic category of the user’s choice the article falls under as well as the criteria used to measure the relevance of the story.

Fig 4. ‘Why are you seeing this (story)?’

Fig 4. ‘Why are you seeing this (story)?’

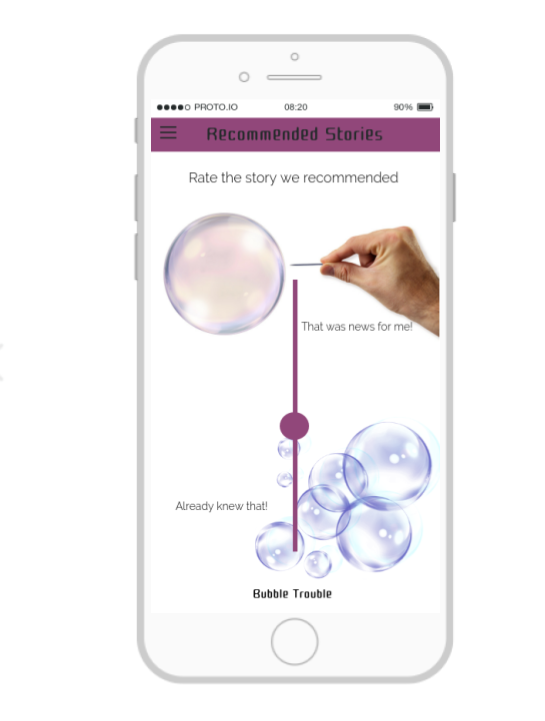

In order to incorporate user feedback and to train our algorithm, users will be able to pick between “I already knew that” or “That was news for me” (see figure 5). This allows us to prioritize novelty in addition to users’ interests in providing recommendations.

Fig 5. Feedback mechanism for recommended articles

Fig 5. Feedback mechanism for recommended articles

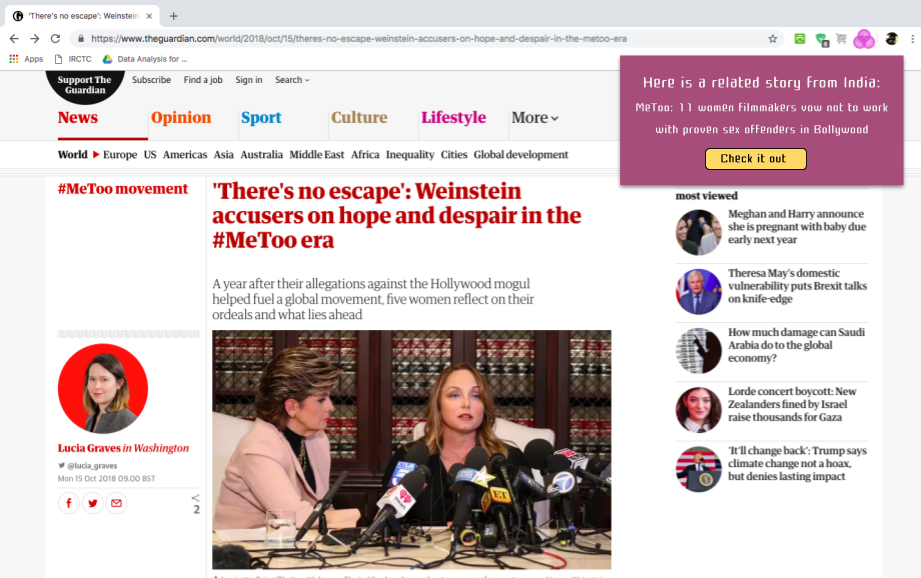

As our browser extension surveys what users read outside the app, we have built in a functionality aimed at increasing exposure as well as app usage and “stickiness”. When a user reads a story on their browser, our extension analyzes the article metadata to ascertain the topic, region and keywords it pertains to. Then it talks to the app and surfaces similar stories, but from a less explored region. Check out this example:

Fig 6. Push notification

Here, a user reading a story on the #MeToo campaign in the US, is shown a notification to explore a similar story that is getting a lot of coverage in India. Clicking on the button takes them to the story housed within the Bubble Trouble app.

After a month of using the app, users are prompted with a new filter bubble diagram that shows their progress. In figure 7, you can see that the user has started exploring some new bubbles in addition to the content they used to read about before. Then they are asked if they want to stick to reading more about the topics that they chose last month or if they want to explore something new.

Fig 7. Updated filter bubble visualization

The app uses filters to counter defective filters. Like the filters we problematize, our filter is also partial and leaves out articles that the user has not chosen to read more about. To make this apparent to the user, a “What am I not seeing” section will be present on the app that has news stories on topics they do not currently read. This will look like any other news feed, but will be arranged on the basis of our ranking system discussed before.

Conclusion

Using recommendations, push notifications, and a visual representation of filter bubbles, Bubble Trouble realizes Stray’s vision of a filtering algorithm that pushes the boundaries of readers’ interests and delivering what they did not know they wanted. Further, this is a way for users to wrest back control over the news articles they see online.

What sets it apart from other apps in this space, primarily, is the intent to promote exposure to news stories from other parts of the world. Also, unlike other interventions we emphasize transparency and involving the user in taking key decisions on their news consumption habits.

Further research (or even the Bubble Trouble 2.0 update) could look at ways in which ideological diversity can also be integrated into this project. While we consider ideological plurality to be an important part of surpassing filter bubbles, operationalizing this is still extremely challenging as we pick up stories from all over the world.

Another limitation is the space for collaboration. The feedback that is captured for every news story is still binary and focuses solely on whether they knew about it or not. This does not leave a lot of room for users to contest the version of the story they were presented or actively boost its visibility among other readers.

Even with these limitations, Bubble Trouble offers a way of reconceptualizing filtering algorithms used by news recommender systems in order to foster healthier and holistic news consumption practices. With the participation of an enthusiastic community of users, researchers, industry experts and developers we believe that this app has the potential to be a first step in the right direction.

References

Billsus, Daniel, and Michael J. Pazzani. ‘User Modeling for Adaptive News Access’. User Modeling and User-Adapted Interaction, vol. 10, no. 2, June 2000, pp. 147–80. Springer Link.

Das, Abhinandan S., et al. ‘Google News Personalization: Scalable Online Collaborative Filtering’. Proceedings of the 16th International Conference on World Wide Web – WWW ’07, ACM Press, 2007, p. 271. Crossref.

Flaxman, Seth, et al. ‘Filter Bubbles, Echo Chambers, and Online News Consumption’. Public Opinion Quarterly, vol. 80, no. S1, Jan. 2016, pp. 298–320.

Haim, Mario, et al. ‘Burst of the Filter Bubble?’ Digital Journalism, vol. 6, no. 3, Mar. 2018, pp. 330–43. Taylor and Francis+NEJM.

Hern, Alex. ‘How Social Media Filter Bubbles and Algorithms Influence the Election’. The Guardian, 22 May 2017. www.theguardian.com, https://www.theguardian.com/technology/2017/may/22/social-media-election-facebook-filter-bubbles.

Liu, Jiahui, et al. ‘Personalized News Recommendation Based on Click Behavior’. Proceedings of the 15th International Conference on Intelligent User Interfaces – IUI ’10, ACM Press, 2010, p. 31. Crossref.

Pariser, Eli. “Opinion | When the Internet Thinks It Knows You.” The New York Times, 22 May 2011, https://www.nytimes.com/2011/05/23/opinion/23pariser.html.

Piore, Adam. ‘Technologists Are Trying to Fix the “Filter Bubble” Problem That Tech Helped Create’. MIT Technology Review, https://www.technologyreview.com/s/611826/technologists-are-trying-to-fix-the-filter-bubble-problem-that-tech-helped-create. Accessed 1 Oct. 2018.

Resnick, Paul, et al. ‘Bursting Your (Filter) Bubble: Strategies for Promoting Diverse Exposure’. Proceedings of the 2013 Conference on Computer Supported Cooperative Work Companion – CSCW ’13, ACM Press, 2013, p. 95. Crossref.

Stray, Jonathan. ‘Are We Stuck in Filter Bubbles? Here Are Five Potential Paths Out’. Nieman Lab, 11 July 2012, http://www.niemanlab.org/2012/07/are-we-stuck-in-filter-bubbles-here-are-five-potential-paths-out.

Zuiderveen Borgesius, Frederik, et al. Should We Worry About Filter Bubbles? SSRN Scholarly Paper, ID 2758126, Social Science Research Network, 2 Apr. 2016. papers.ssrn.com, https://papers.ssrn.com/abstract=2758126.