Finally it comes together

When I was writing my thesis at the Theatre School back in 2008, I was really excited in doing research and reading more theoretical and academic work about the theories behind the use of video in art and theatre. I liked it so much, that I chose to follow the BA course of Media & Culture and the MA New Media at the University of Amsterdam. Not because I want to be an academic, nor that I want to be a researcher. It was all for myself, to have a broader background and to use different views on public issues (which are often used to make a performance) to create my own (art) work and use it in other projects. I had the feeling that it should work, that it is possible to use an academic view to create artistic and critical stuff. To moment that it actually did work has finally arrived.

For this course, the New Media Practices course, we had to do a project in teams. The result of the project of our team was, as I like to call it, a critical artwork. Everyone had is part in the project, and together we created and build a great first version of our ideas. In this blog post I will shortly tell our idea and than will tell a bit more about the technical side of it, as I was responsible for that part.

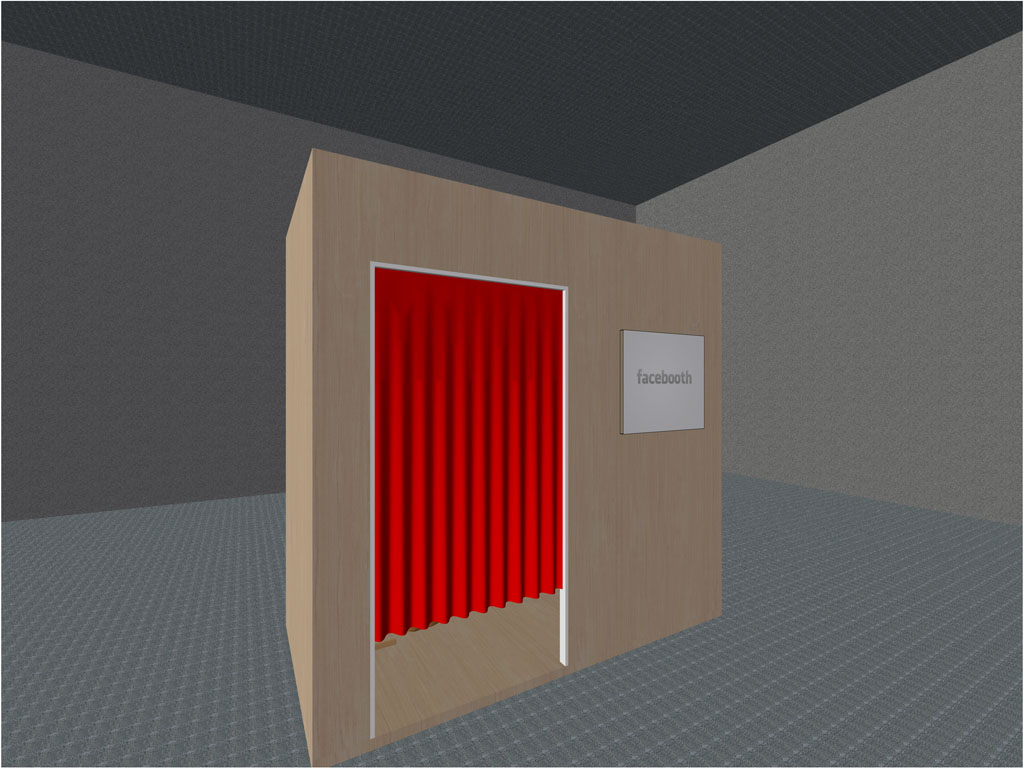

Facebooth

Everyone who is active with social media, shares a lot of (personal) information via websites such as Facebook. But not only the person itself is creating a virtual representation about his or her self, but friends (and maybe enemy’s) and family are also responsible for the digital self of their friends and family. When you are being tagged on a photo or in a text of someone else, your digital identity is growing and getting a better shape. But do we actually know what our digital self does look like? Does it look the same as we ‘really’ are? Are we aware of our digital self?

The Facebooth is more or less a photo booth, which is showing the user his or her digital representation. There is not really a camera inside, but a mirror. When people look in the mirror they will be confronted with a digital layer, which is projected at the mirror. The person in front of the mirror will see his or her reflection with the digital information about him or her flying in the virtual world of the mirror. When the person is moving around, the digital sphere is also moving, it is like it is really a part of the one looking in the mirror. With hand gestures, people can even interact with the content projected.

Facebook

Nowadays a lot of people use Facebook as their main social network site, the information published there is accurate. To use the Facebooth, there is no cash needed. The system is activated as the user logs in with his or her Facebook account. After confirmation of using some information (like photo’s, tagged photo’s and wall posts) the user is able to see the digital counter self. The information is random, and very personal. Users are using Facebook years after years, and almost all the information is somewhere in the world of Facebook. People often don’t really know how their digital self looks like. With the Facebooth, people see different personalized information in the mirror, which may cause a ‘shock’-effect, especially if they see pictures or status updates of ‘that one party a few summers back, which others shouldn’t know about’.

Kinect

This project was the first project for me to use the power of the Xbox360 Kinect. As most people know, it is developed and used as a controller for the game console Xbox360 of Microsoft. The Kinect is able to track people and their movements. In this way, the body of the player is becoming the physical controller of the game. I can imagine that this will help the player to feel more kinected to the game and the virtual world where the game is about. But luckily for me, some other people liked to get the Kinect to work with a Mac computer, and use it in many different ways to interact with the computer and applications.

One of the most beutufull things of the Kinect is the possibility to see in depth. Because of that, the Kinect is able to see movements in space and tracking them. Distance of the user, but also the distance between the hands of the player can be tracked and can be used to trigger anything. The sky is the limit, or maybe even further than that.

After a lot of research and installing different stuff, I found a few good applications to use for our project. Instead of doing everything ourselves, the biggest part was done by some great guys in the open source circuit. I found two different applications that were able to send information about the tracked user (they call it a ‘sceleton-structure’) via OSC (Open Sound Control) to any other program on the computer. OSC is getting the new standard after MIDI. One of the programs, OSCeleton, is very power full and robust. But is will costs a lot of installing drivers and packages of other software: it costs about an hour of work to get it all up and running. The good thing about it that it’s able to track up to six people! Although we need just one tracked person for this project, for me this program could help me out in some future projects I have in mind. The second program is a lot easier. It is called Synapse. Just download the app and run it. That’s it! The downside is that it is only able to track one person at a time, and that it is asking a lot more of your processor. For me the latter was good enough and easier to work with for the moment.

Quartz Composer

Know I was able to use the Kinect with my Macintosh computer, the next step was to create the virtual world of the person that’s standing in front of the mirror. There were a lot of possibilities, but the fastest way of animating live dynamic content is via Core Animation and Core Image, both part of the Mac OS X operating system. Because of that it is really fast, smooth and not very heavy for the processor. The easiest way to make use of these parts, is to create everything in Quartz Composer. The definition given on Wikipedia is “Quartz Composer is a node-based visual programming language provided as part of the Xcode development environment in Mac OS X for processing and rendering graphical data.” ((http://en.wikipedia.org/wiki/Quartz_Composer))

It wasn’t the first time for me to use Quartz Composer, but it was more complicated than earlier on. So I had to test a lot in Quartz Composer to get everything working. There was one little ‘problem’. The OSC send from Synapse could be received by Quartz composer, but only via a different application (written with Max MSP) to send only the information needed in Quartz composer (e.g. the position of the head). With that number, I was able to activate something within Quartz Composer but the possibilities weren’t very confortable.

Again, I am a bit lucky, some people created a patch for Quartz Composer to use the OSC information right form OSCeleton or Synapse within Quartz Composer. And that’s all I needed. The rest was just a lot of thinking, testing and creating. For those who want to try it out, download Tryplex Toolkit. It contains both the apps (OSCeleton and Synapse), as well as the Quartz Composer plugins and patches. There’s a very good installation guide on the website as well.

I’m very happy with the final result of this project. The mirror is actually there, with the digital information around the mirrored image. But I’m more excited about the possibilities of the Kinect together with Quartz composer. I already have some cool ideas and I hope I will find a way to realize them as well in the near future.