Google’s DeepDream: Algorithms on LSD

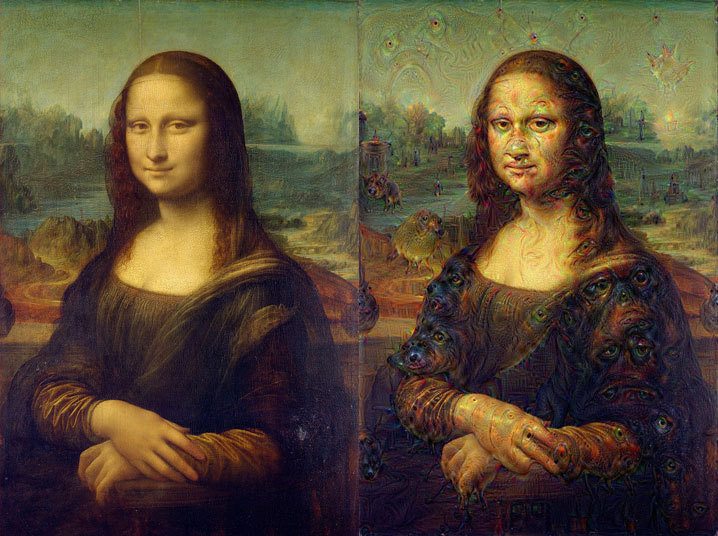

Last July a vast variety of psychedelic strange images flooded the social media. From Edward Munch’s The Scream to Porn and landscapes, surreal hallucinatory depictions of eyes, birds and dogs were generated in every image, recreating almost a LSD experience. Google had just released its artificial neural network program DeepDream. One of the most important elements of AI, as it is also with human intelligence, is the capacity of software and the machines to learn. Neural networks, also known as Deep Learning, “are one tool of machine learning, the field of computer science focused on building trainable systems for pattern recognition and predictive modeling”(Auerbach). Google’s latest attempt in the field shows how far AI has gone and what we can expect from machine’s ‘creativity’.

How do the machines see?

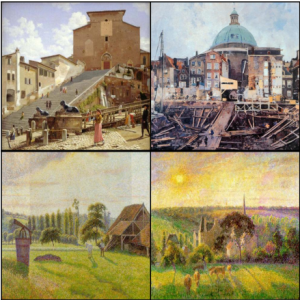

Neural networks are not a new tool of AI, they have been used for quite some time but now all these tries are flourishing due to the huge improvement of processing power. The new aspect is all the capabilities that such networks have. More familiar examples of neural nets are applications of image recognition and classification. For instance, researchers developed software which recognizes accurately the artist and the style of a painting. The researchers have imported more than 80,000 paintings in a database by more than a 1,000 artists spanning 15 centuries, covering 27 different styles, each with more than 1,500 examples and classified by genre (Saleh and Elgammal), in order to train their algorithm.

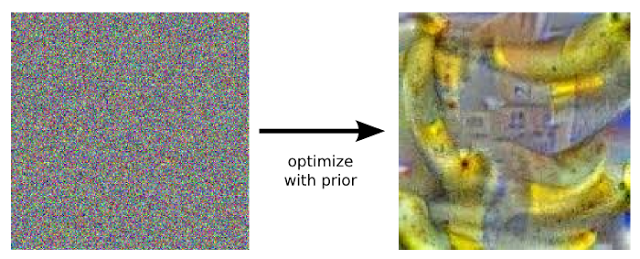

Where the art filter learns to recognize different artistic styles, DeepDream is able to recognise small parts of images that seem to look like other common parts of images and then it makes the original image to resemble more into an archetypical image. What is more striking is the ability of DeepDream to recognise or fit archetype in an image that “is total garbage” (Auerbach). This can be easily be understood in the following pictures where DeepDream had been asked to find bananas in random noise. As can be seen, DeepDream managed to revise the picture and track the archetype picture of a banana.

DeepDream seems able to draw some parallels with our ability to recognize patterns. Humans are also functioning with a priori forms in their minds, with archetypes that use them, in order to conceive and understand- in forms known to them- the world. DeepDream is approaching human cognition “but it doesn’t do so in a particularly human way” (Auerbach). It is based on algorithms and not on biological neurons.

Although DeepDream is functioning by algorithms and codes, the operational neural networks are trained; and this means that they are provided with a vast amount of information from which they should make associations and conditional relationships. Although in the outcome of the neural networks there can be given correct associations, as can be seen on the photo above, the outcome can also be freer, less unsupervised, we could even say more surrealistic. As the researchers described it,

“we train networks by simply showing them many examples of what we want them to learn, hoping they extract the essence of the matter at hand (e.g., a fork needs a handle and 2-4 tines), and learn to ignore what doesn’t matter (a fork can be any shape, size, color or orientation). But how do you check that the network has correctly learned the right features? It can help to visualize the network’s representation of a fork” (Mordvintsev, Olah, and Tyka).

The trained neural network can now use the learned information to apply analysis in order to track down the original features. However, there can be a variety of levels of learning provided and they are dependent on the ‘layers’ of computational neurons. It is possible to even have neural networks with more than 20 layers. Although the learning level that can be reached in such a multi-layered network is outstanding, a danger for the network is lurking. The functions of the network are becoming complex and the relationships/nodes are becoming fairly independent on from the other. The way that the network is functioning becomes unpredictable and unmanageable. The structures and relationships can only be observed and they can no longer be manipulated or changed from the programmer without having severe consequences in other functions of the software.

Machine’s ‘Optical Unconscious’ and Control

Benjamin coined the term ‘optical unconscious’ in 1931, in order to describe the new enhancements that camera contributes to the eye and how those remain unseen and invisible.

“In the ‘expanding field of photography’, the eye is likely to encounter images that exceed its capacities of reading. It has to learn how to read” (Elo).

Google researchers asked how the machine sees. The results of DeepDream’s images are representations of the optical unconscious of the machine. They depict something that now is beyond of machine’s understanding and it has to learn how to read. The progress of machine learning is significant, and despite the aforementioned limitations, DeepDream shows the future of AI.

We shouldn’t forget though that what became viral image-generator is the pursuit of a multinational company to develop better techniques to ‘capture’ automatically the data of photos and images, which can have many political implications from privacy to improved censorship. All these technological advancements of AI should be approached through the general context of control societies (Deleuze).

References

Auerbach, David. ‘Google DeepDream: It’s Dazzling, Creepy, and Tells Us a Lot about the Future of A.I.’ Slate. N.p., 23 July 2015. Web. 30 Oct. 2015.

Deleuze, Gilles. “Postscript on the Societies of Control.” October 59 (1992): 3–7. Print.

Elo, Mika. ‘Walter Benjamin on Photography: Towards Elemental Politics.’ Transformations 15 (2007): n. pag. Web.

< http://www.transformationsjournal.org/journal/issue_15/article_01.shtml >

Mordvintsev, Alexander, Cristopher Olah, and Mike Tyka. ‘Inceptionism: Going Deeper into Neural Networks.’ Google Research Blog. N.p., n.d. Web. 30 Oct. 2015.

< http://googleresearch.blogspot.co.uk/2015/06/inceptionism-going-deeper-into-neural.html >

Saleh, Babak, and Ahmed Elgammal. ‘Large-Scale Classification of Fine-Art Paintings: Learning The Right Metric on The Right Feature.’ arXiv:1505.00855 [cs] (2015): n. pag. arXiv.org. Web. 11 Sept. 2015.