Bad News for Facebook

In the past couple of weeks Facebook and its content-monitoring policy as well as the changes made to its Trending Topics section made the headlines over and over again. These headlines have led to many opinion pieces and several debates around censorship, bias, and use of algorithms with all debates involving the question of Facebook within media.

Facebook has repeatedly denied being a media company and have chosen instead to call themselves a tech company, as they don’t create content but connect people and give them a platform instead. However the news of the past weeks have brought back the debate and despite Zuckerberg’s announcement, the majority of the media world and the public seem to agree that Facebook has in fact become a crucial player in the media field.

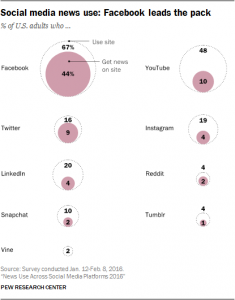

It has 1.7 billion users, two-thirds of whom (66%) are getting their news from the site, making it the biggest player within the field, as can also be seen in the image below with a comparison of other social media platforms. (Pew Research Center)

Let’s first take a look at the events of the past weeks.

The first instance which led to an outburst from the public as well as journalists and academics was about the Napalm Girl photo which was taken by Nick Ut in 1972 during the Vietnam War. The photo depicts a nine year old girl running away naked after a napalm attack, it is remarkable in history as it was this photograph that brought global attention to what was happening in Vietnam and it has also won the Pulitzer Prize. It all started with Facebook deleting several copies of the iconic photograph and suspending the accounts of users “after a Norwegian newspaper editor posted the picture as part of a series on war photography.” (Fortune) This was followed by the editor-in-chief of the paper Aftenposten writing an open letter to Mark Zuckerberg. (Aftenposten) Facebook’s rules had led to the banning of the photo due to nudity of the child involved, however after the outrage on social media, it reinstated the photo saying that in this case “the image’s status as an iconic image of historical importance and the value of permitting users to share it outweighs the value of protecting the community by removal.” (Fortune)

However this brings up questions: Who (or what) gets to decide these “values”? Next time a similar case occurs; will Facebook consider these values beforehand or wait for the public to protest online? Zuckerberg is called “world’s most powerful editor” but is he qualified? Doesn’t this show that Facebook needs to establish itself within the media environment and take on an editorial role in order to prevent future, similar incidents?

Today, control over distribution is just as important as the creation of content and so Facebook needs to realize that a platform for 1.7 billion users which involves elements of media, comes with it’s responsibilities also.

“This photo headlined the New York Times, changed the course of the war. Would it be buried in a Facebook only world?” –Zeynep Tufekci on Twitter

The second instance that brought Facebook a lot of media attention and several problematic headlines followed the change that was made to the Trending Topics section recently. An article on Gizmodo that went viral featured former Facebook workers saying they routinely suppressed conservative news. Facebook denied any bias but promised changes. (Techcrunch) So when the news came in that Facebook had fired many journalists and would no longer have written descriptions of the news but only hashtags relying on an algorithm and tech staff, this was seen as Facebook’s attempt to solve the bias issue they had faced; however Facebook said the change was in order to scale globally. If the problem is in fact about the possible existence of bias it needs to be remembered that algorithms are also biased, they are created by humans who are biased and “these algorithms are not neutral, and that they encode political choices, and that they frame information in a particular way.” (Culture Digitally)

Unfortunately for Facebook the algorithm had many mistakes from the start including false news and conspiracy theories as well as inappropriate content. In the course of a single weekend, the new automated system had “a false story about Fox News host Megyn Kelly, a controversial piece about a comedian’s four-letter word attack on rightwing pundit Ann Coulter, and links to an article about a video of a man masturbating with a McDonald’s chicken sandwich.” (The Guardian) Considering the large outreach of Facebook, such mistakes as false news and accusations can be very dangerous because they could have a real impact on public opinion. All these false news came from non-credible sources which could have been avoided if the process wasn’t the algorithm alone but also involved editors or “news curators” as is the title at Facebook because under the old guidelines the news curators had a list of trusted sources to use and this wasn’t the case with the algorithm. (The Guardian) Facebook, if its planning to continue with this automated system, should come up with a quality-control mechanism and they have announced that they are working to make “detection of hoax and satirical stories quicker and more accurate.” (CBS News)

After all these instances, Facebook needs to admit the large role it plays in media and has to follow up with the responsibilities this brings such as journalistic principles, quality control, morality, accuracy, ethics and fairness. Can all this be achieved with an algorithm is another question.

Bibliography

Coldewey, Devin. “Facebook Denies Bias in Trending Topics, but Vows Changes anyway.” TechCrunch. N.p., 23 May 2016. Web. 18 Sept. 2016. https://techcrunch.com/2016/05/23/facebook-denies-bias-in-trending-topics-but-vows-changes-anyway/.

Gillespie, Tarleton. “Can An Algorithm Be Wrong?” Culture Digitally. N.p., 19 Oct. 2016. Web. 18 Sept. 2016. http://culturedigitally.org/2011/10/can-an-algorithm-be-wrong/.

Gottfried, Jeffrey, and Elisa Shearer. “News Use Across Social Media Platforms 2016.” Pew Research Centers Journalism Project RSS. N.p., 26 May 2016. Web. 18 Sept. 2016. http://www.journalism.org/2016/05/26/news-use-across-social-media-platforms-2016/.

Gunaratna, Shanika. “Facebook Apologizes for Promoting False Story on Megyn Kelly in #Trending.” CBSNews. CBS Interactive, 29 Aug. 2016. Web. 18 Sept. 2016. http://www.cbsnews.com/news/facebooks-trending-fail-news-section-reportedly-highlights-fake-news-on-megyn-kelly/.

Hansen, Espen Egil. “Hansen, “Dear Mark. I am writing this to inform you that I shall not comply with your requirement to remove this picture.” Aftenposten. N.p., 8 Sept. 2016. Web. 18 Sept. 2016. http://www.aftenposten.no/meninger/kommentar/Dear-Mark-I-am-writing-this-to-inform-you-that-I-shall-not-comply-with-your-requirement-to-remove-this-picture-604156b.html/.

Nunez, Michael. “Former Facebook Workers: We Routinely Suppressed Conservative News.” Gizmodo. N.p., 09 May 2016. Web. 18 Sept. 2016. http://gizmodo.com/former-facebook-workers-we-routinely-suppressed-conser-1775461006.

Thielman, Sam. “Facebook Fires Trending Team, and Algorithm without Humans Goes Crazy.” The Guardian. Guardian News and Media, 29 Aug. 2016. Web. 18 Sept. 2016. https://www.theguardian.com/technology/2016/aug/29/facebook-fires-trending-topics-team-algorithm.

Tufekci, Zeynep (zeynep) “This photo headlined the New York Times, changed the course of the war. Would it be buried in a Facebook only world?” 9 September 2016. Tweet.

Zillman, Claire. “Sheryl Sandberg Apologizes for Facebook’s Removal of ‘Napalm Girl’ Photo.” Fortune Sheryl Sandberg Apologizes for Facebooks Removal of Napalm Girl Photo Comments. N.p., 12 Sept. 2016. Web. 18 Sept. 2016. http://fortune.com/2016/09/13/facebook-sheryl-sandberg-napalm-girl-photo/.