Artificial Intelligence Defeats People’s Privacy

Last week a Tweet by The Guardians’s writer David Shariatmadari showed how Google Maps tried to take a bullock’s privacy serious by blurring its face. The bullock was thereby treated the same as humans when it comes to their privacy. Blurring a human’s face is something cops started to do in 1989 to guarantee human’s privacy, but last week researchers of the University of Texas also published that the privacy of humans and the privacy of this bullock is not guaranteed with the existing blurring technique. These researchers found out that it’s child’s play to identify blurred faces when one makes use of artificial intelligence (AI). As a result, the shelf life of existing privacy software technologies may be scrutinized.

Blurring technique

The blurring or sometimes called pixelating technique is used to make sure people can’t see through the deformation of a photo, text or license plate. While on the one hand we made fun of Google’s concern about a bullock’s privacy, on the other hand the unsustainability of one’s privacy online has become clear. The blurring technique is used by multiple platforms, such as YouTube and Google, of which the latter claims that they blur faces in Streetview pictures to protect one’s privacy (Petersen 340). Despite that, it is a technique that has been used for 27 years and now researchers have undermined this technique’s privacy benefits. One of the researchers, namely Vitaly Shmatikov, mentioned that the researchers were surprised that the easiest thing they tried worked (Popular Science). For the result, they used artificial intelligence, a science that is often referred to as the activity of “programming computers to cary out tasks that would require intelligence if carried out by human beings” (Kumar 6).

Machine-learning techniques for dummies

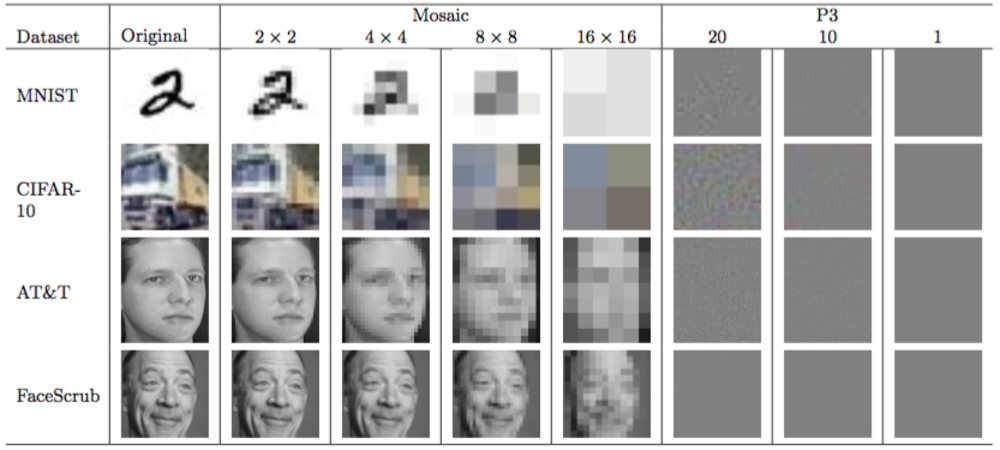

Although the researchers have made use of machine-learning techniques, they mentioned that it is really easy to program your computer to reveal the blurred person’s identity. An example of the found technique can be seen on image 1.1. Wired describes that the team of researchers “found that mainstream machine learning methods—the process of“training” a computer with a set of example data rather than programming it—lend themselves readily to this type of attack” (Newman). Summarized, the techniques are also available to average people like you and me. Several persons, such as IT professional Vladimir Yuhzikov, already showed a few years ago that it is possible to restore a blurred photo. Yuhzikov even disclosed the codes that are needed for the restoration.

Image 1.1. Reconstrction of photos by blurring technology researchers Richard McPherson, Reza Shokri and Vitaly Shmatikov.

Therefore, new media scholars Hoelzl and Marie argue justly that the result of a blurred photo is “still a medium reflexive image, an image that reflects the means by which the image has been processed (algorithms) and distributed (The Web)” (90). Their explanation stresses exactly why it is easy to reconstruct the photos, because algorithms are used for the blurring technique. Algorithms are often described as a technology that operates through easily repeatable rules and instructions that, after several attempts lead to a similar result (Verhulsdonck and Limbu 72). These rules and instructions are written by humans and that’s the main reason why it’s easy to learn a computer to reconstruct the photo: you only have to think the other way around.

Reconstruction of photos

However, the reconstruction technique is not (yet) so extensive that it is possible to reconstruct the photo from scrape. For this reason, Wired mentions that the researchers haven’t found a way to actually recreate photos (AI can recognize). For now it is only possible to identify the object or faces on the photo, but it seems like it’s only a matter of time before researchers find a way to do so. This claim is inter alia supported by the machine learning researcher Lawrence Saul, who also mentions that the blurring technique should be given up, because the researchers can guess the right face out of the photo for a minimum of 40 percent of the time (AI can recognize).

Although a percentage of 40 percent doesn’t sound threatening, it actually does undermine the techniques used for among others Google Maps and YouTube. Shmatikov emphasizes this by mentioning that his team of researchers was able to overthrow three privacy protection technologies, of which YouTube uses one (AI can recognize). Luckily for us, the researchers wanted to use their research as a wake up call for privacy and security platforms, because AI shouldn’t be ignored when it comes to those topics.

Problem-solving

Despite that, the researchers were kind enough to mention a short-term solution, namely the use of black boxes in privacy technology. This solution, which is already being used with criminals, is also an algorithmic technology. Once again, the danger in the current technology is based on the use of algorithms. It is only a matter of time before a (new) group of researchers can defeat this newer technology. Therefore, the development of privacy technologies is a big problem that cannot be solved with one, easy solution. Perhaps it is time to let go the idea of privacy on the Web.

References

Hess, Peter. “Researchers Traing AI To Defeat Face Blurring Technologies.” Popular Science. 2016. 15 September 2016. <www.popsci.com/researchers-train-ai-to-defeat-face-blurring-technologies>.

Hoelzl, Ingrid, and Rémi Marie. “The Operative Image (Google Street View: The World as Database).” Softimage: Towards a New Theory of the Digital Image. Chicago: The University of Chicago Press, 2015. 81 – 110.

Kumar, Ela. “Introduction.” Artificial Intelligence. New Delhi: I.K. International Publishing House, 2008. 1 – 27.

McPherson, Richard, Reza Shokri and Vitaly Shmatikov. Defeating Image Obfuscation with Deep Learning. 2016. 15 September 2016. <https://arxiv.org/abs/1609.00408>.

McPherson, Richard, Reza Shokri and Vitaly Shmatikov. Defeating Image Obfuscation with Deep Learning. Cornell: Cornell University Press, 2016.

Newman, Lily Hay. “AI Can Recognize Your Face Even If You’re Pixelated.” Wired. 2016. 15 September 2016. <www.wired.com/2016/09/machine-learning-can-identify-pixelated-faces-researchers-show/#slide-1>.

Verhulsdonck, Gustav, and Marohang Limbu. Digital Rhetoric and Global Literacies: Communication Modes and Digital Practices in the Networked World. Hershey: IGI Global, 2014.