Inquiring polarisation: re-imagining Youtube as a diplomatic mediator

The extent of political polarisation in large parts of the Western world seems to be such that disparities between one and another point of view is not just difference, but enmity. Hillary Clinton has been ordered to be put in jail while Donald Trump is, to some, representative of one the greatest decadences of American politics. Wilder’s and Hofer’s rise to power is the sign of a liberation to some, and the failure to suppress Europe’s most violent political ideals to others. The term “peace” in Colombia seems to be associated with as much hope as it is with revenge. Parties experience perfectly parallel versions of history, and thus of reality (Schmitt 1962).

To think of this phenomenon as something “new” leaves us to wonder about its contemporary causes, to name, for instance, new aspects of our very access and engagement to ideas and information. New media, and particularly major platforms such as YouTube, have often been seen as posing new challenges to traditional ways of tackling this problem (Rieder 2009).

As an affiliate of Google, for instance, YouTube is the second-largest search engine in the world, and just as its neighbors in Silicon Valley, often embraces its role as a global infrastructure to allow richer democratic dialogue (Gillespie 2010). But with the success of Google’s PageRank, Facebook’s NewsFeed and custom-made interfaces to access and post information, the platform has, since 2010, specialised in engineering a personalised access to consume and post information (Levene 2010).

The fact that information be processed by extremely sophisticated algorithms delivering tailor-made results to every user’s search queries — and with such astute mastery of circumstances — leaves us to question the extent and the responsibility that YouTube has in impeding users to be exposed to politically differing, if not purely random, information.

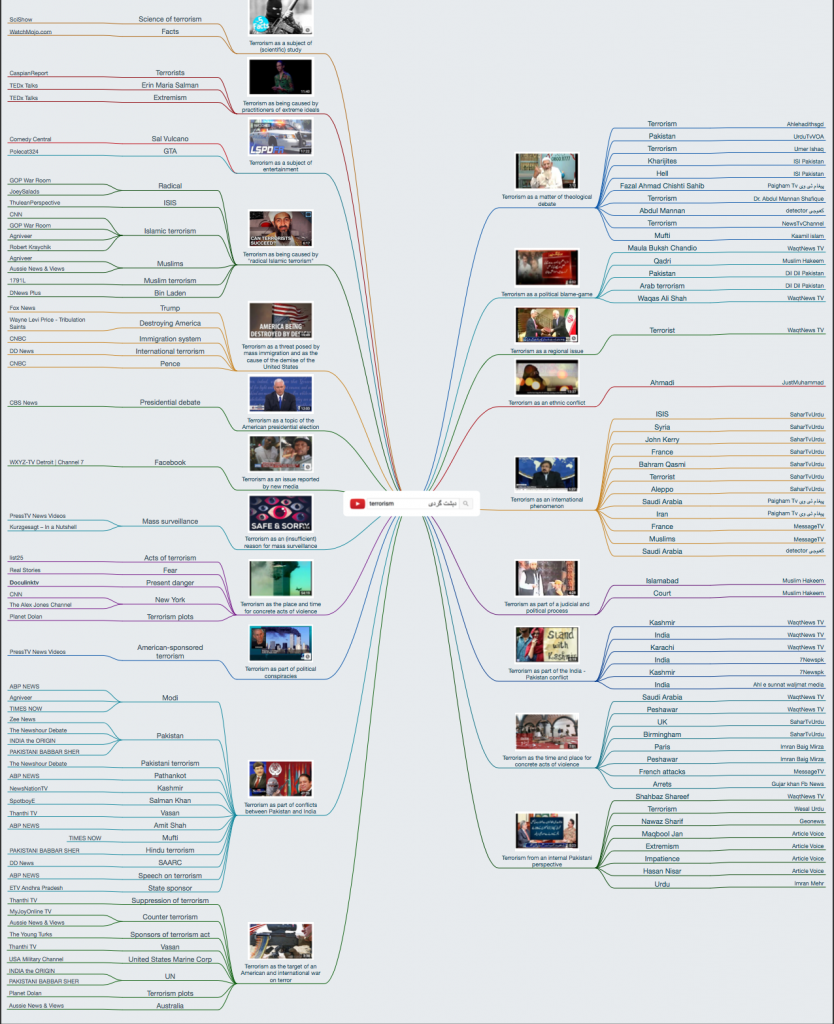

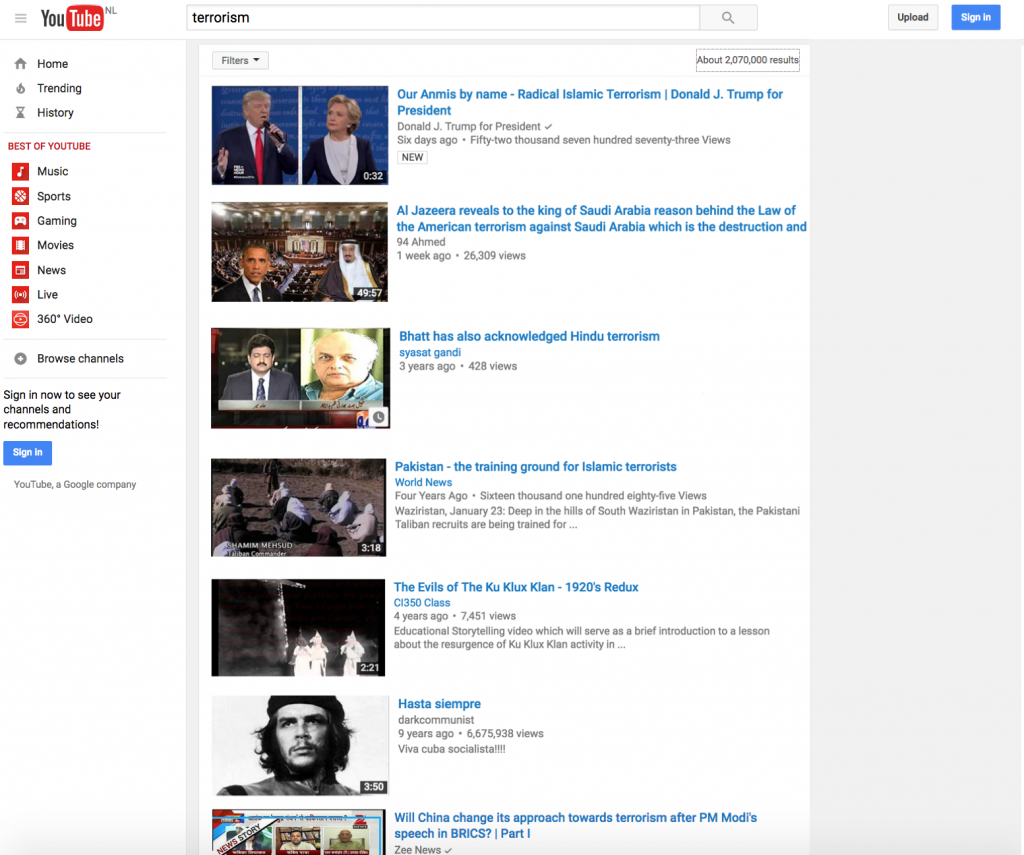

This project seeks to examine the role of YouTube’s search ranking algorithm in polarising political dialogue, and how it could be “tweaked” in such a way that information be accessed in a more diplomatic fashion. By “diplomatic fashion”, we intend that search results be suggestive of more diverse and less partial ideas. To do so, we compare a YouTube query on “terrorism” in Urdu and in English respectively, and then envisage how the platform could alter this algorithm to distribute information in such a way that Urdu and English-speaking parties would be able to mediate each other’s points of view on the issue of terrorism.

The issue of terrorism is especially polarising of Urdu and English speakers. In a sense, the difference between both accesses to information on terrorism perpetuates the geo-political differences so divisive of Western and Muslim populations, and the issue of terrorism, as organised by YouTube’s search ranking algorithm, is as vague and unpredictable as the very definition of the term.

Methodology and documentation

Our methodology was not without shortcomings. To have a clear picture of how YouTube’s search ranking algorithm functions is a notoriously difficult task to accomplish; Gillespie and Sandvig comment on how secretive of its internal mechanisms the platform can be in the face of other competitors (Gillespie 2009, Sandvig 2014). Since releasing a paper on their then-coined recommendation system in 2010, hard and fast information on YouTube’s principle algorithms themselves were conspicuously absent. (See Davidson et al. 2010 for the original paper) The information we collected on the algorithm are thus inferences taken from the company’s own paper releases and assessments from third party experts and consultants who have had to study the platform for various reasons. (third-party sources included Snickars and Vondereau 2009, blog posts such as Gielen and Rosen’s, Giamas’, and an interview with YouTube’s chief engineer Cristos Goodrow).

Collecting data on Urdu and English search results with the Digital Method Initiative’s YouTube Data Tools and the Issue Discovery Tool allowed us to fragment video metadata (titles, channels) into more concise terms constitutive of video metadata discourses. However effective these tools have proven to be, some terms do go omitted and require a manual re-construction of the discourses in question in a visualisation we judged was most appropriate.

We also grappled with the notion of providing ‘diplomatic solutions’ without attempting to be paternalistic or having an active editorial stance.

How, then, can YouTube’s search ranking algorithm be deemed to be polarising? Answering this question led us to examine how this algorithm functions and deduce its role in shaping our English and Urdu search results to the query on “terrorism”.

The role of YouTube’s search ranking algorithm in polarising results

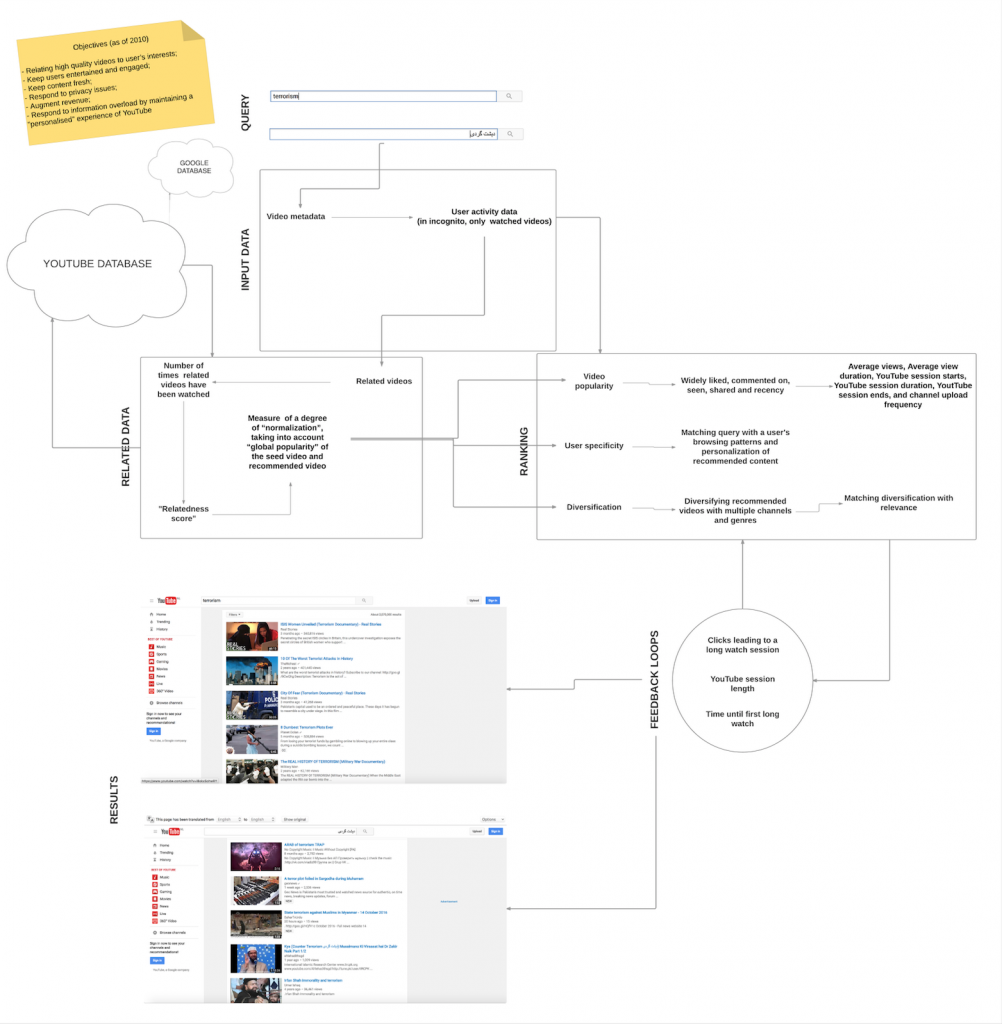

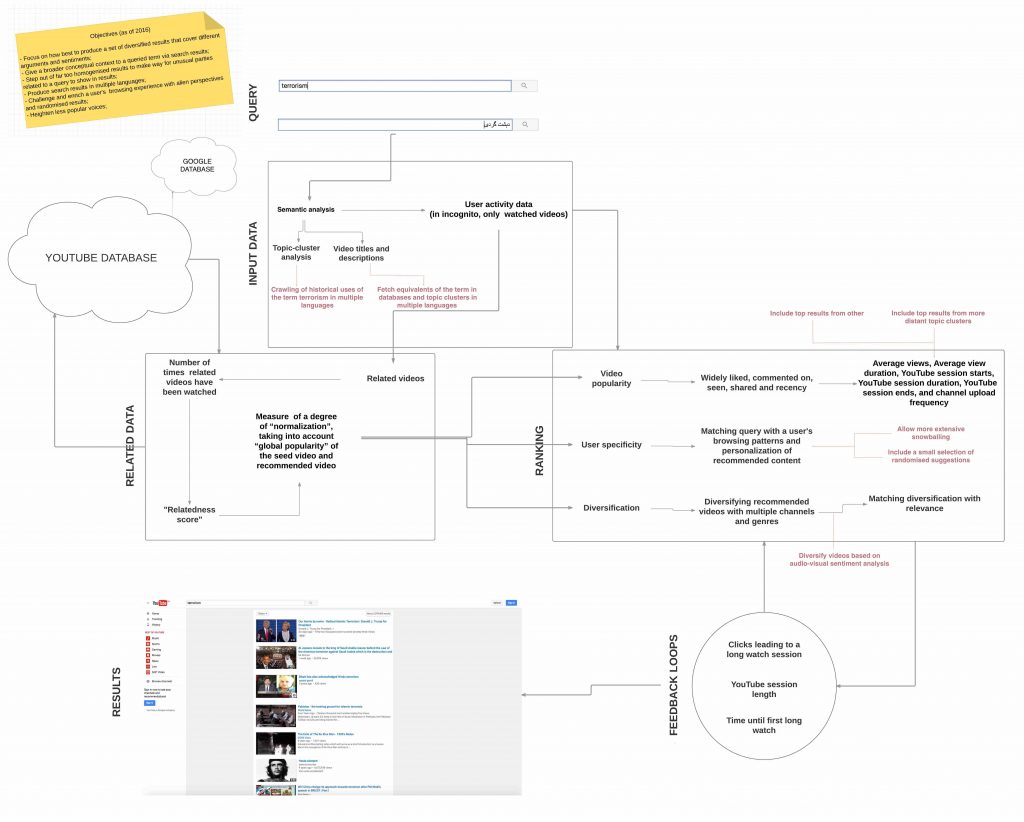

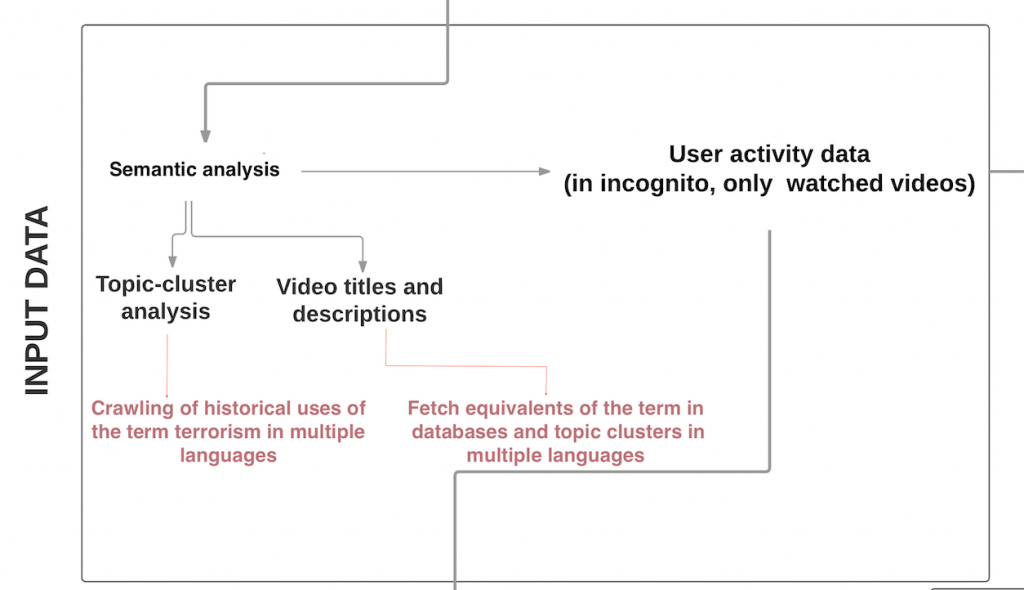

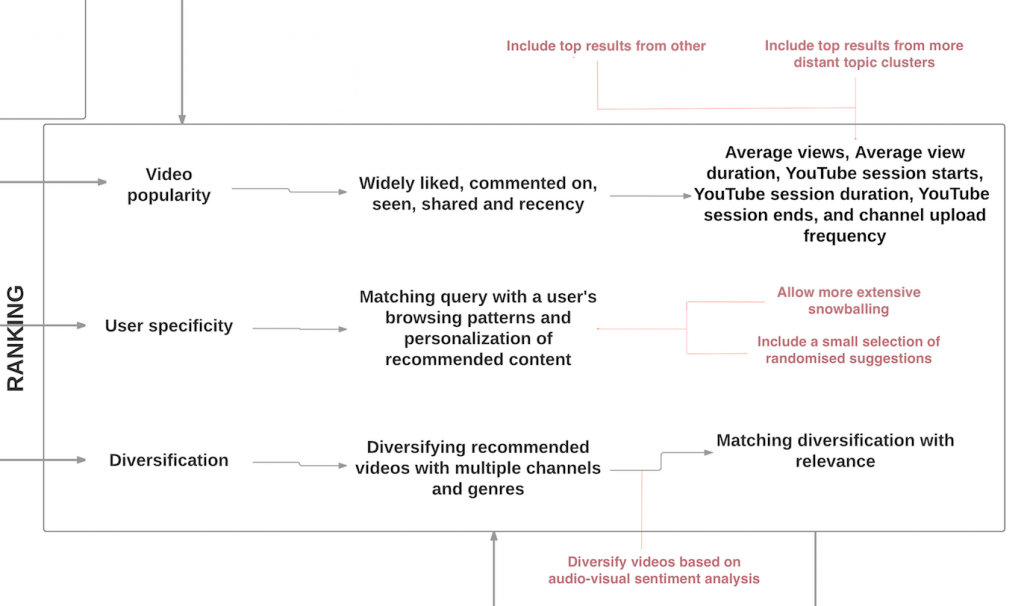

Some of YouTube’s engineers purport that the platform’s search ranking and recommendation system is an important realisation of a few new objectives they have set out in 2010 (Davidson et al. 2010). To prevent that information be too scattered and irrelevant, search ranking needs to associate users to the results most relevant to them. (Ibid, 293)

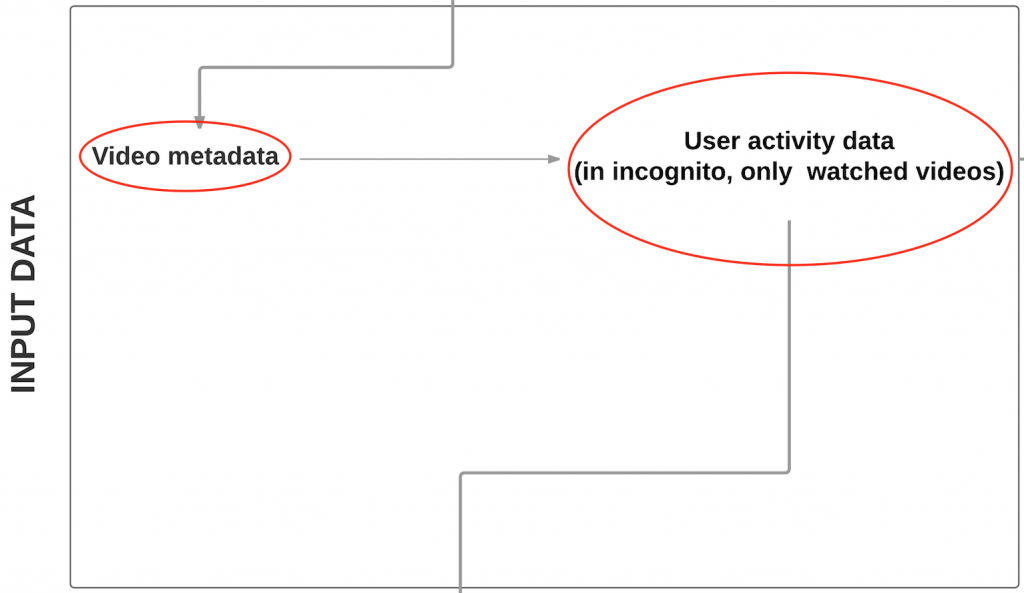

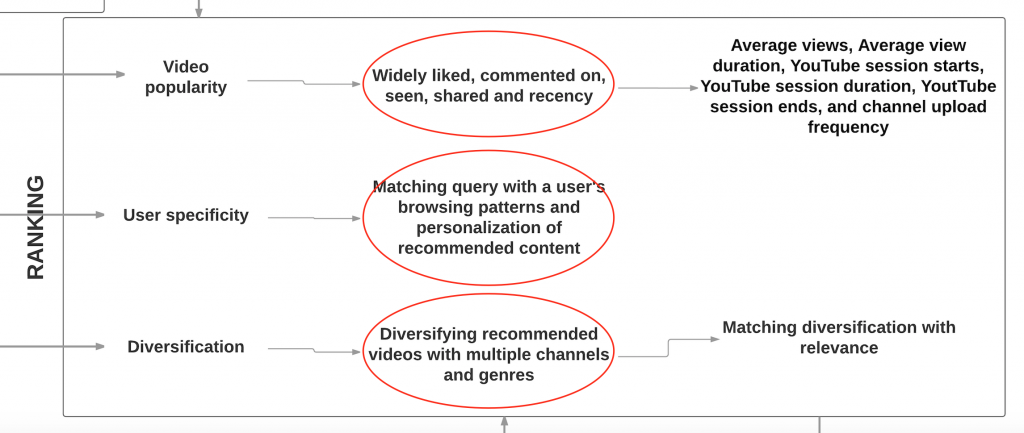

Pinpointing such results by sorting through the semantic data of myriads of videos closest to the query term is the first of many steps the algorithm takes to choose which videos to rank (Davidson et al. 2010, 293). On a second instance, videos are ranked in an order of relevance and affinity with a user’s browsing history, which, being associated to Google’s database, includes data tracked on the entire Google platform. Videos are then sorted based on the global popularity (watch time) amongst users and are diversified across genres and channels (Davidson et al. 2010, 294).

Fig 1. A sketch of YouTube’s search ranking algorithm

Spot the terrorist: polarisation between English and Urdu video discourses on terrorism

The first 50 search results for the search query ‘terrorism’ in English and Urdu were polarised in ways that reflected the intellectual history of the term “terrorism” peculiar to each of these languages and their speakers, and which are seemingly obstructed from crossing-over in inoffensive terms.

English search results were predominantly framed around the notion of a dangerous and violent global phenomenon caused and perpetrated by “radical Islam”, the opinion of which is often articulated by American conservative (news-)channels (Fox News, GOP War Room.) Other left- and centre- leaning channels (CNN, CNBC, CBS) insist that terrorism only be attributed to “extremist” thinkers, and other outliers included results that framed terrorism as part of political conspiracies or as subjects of entertainment.

Another voice to take considerable space in our English results — itself particularly antagonistic to our Urdu results — is that articulated by Indian channels tending to link terrorism to Pakistan. These results often bore a slant against Pakistan, articulating a predominantly Indian perspective of events and ideologies.

https://www.youtube.com/watch?v=e9aMSUFeslU

Urdu results “respond” on a more defensive stance. “Terrorism” is less of an issue of who, when and why, than of an attempt to understand a concept somewhat ambiguous and alien to Pakistani history. The term was contextualized in a debate rooted in the geo-political conflicts involving Pakistan, referring to domestic terrorist attacks and addressed these as an internal issue, sometimes on localized ethnic or denominational grounds, sometimes on theological grounds.

https://www.youtube.com/watch?v=80pbv4MirdU

Still, some videos almost manifested a direct response to Indian allegations that Pakistan be involved in cross-border terrorism, while others included a rhetoric of terrorism as a war against the West.

Fig 2. Search results to the query “terrorism” in English (left) and Urdu (right)

How does YouTube’s search ranking system contribute to this polarisation?

YouTube’s search ranking algorithm may have a role in organising such results in a number of ways, but it is important to note that YouTube’s sorting and filtering mechanisms are not necessarily problematic in themselves. Their particular implementation and their consequences is what we focus on.

The fact that YouTube’s search ranking algorithm sorts videos based on semantic data (titles, tags, descriptions) make it such that language is a first element of discrimination. A query in English will give us a range of ideas and information belonging to some worldview organised and articulated by English speakers (who, in this case, were American and Indian) (Rogers 2013, Kavoori 2011, Danet and Herring 2010, Levene 2010, Holt 2004).

Additionally, the very term “terrorism” is itself discriminatory: the fact that it came to be most widely used in the context of the US’ war on terror places uses within paradigms proper to this historical instance, its actors, allies and enemies. (Wolfsfeld 2004) A term like “terrorism” is a profoundly complex ensemble of concepts, and such nuances are often lost in search results due to the ranking system’s favouring of popularity metrics.

Fig 3. One of the first ranking mechanisms sorts videos on the basis of semantic data and user activity data

Prioritising user’s personal affinities to sort search results may have also contributed to polarising our results. Ever since Google celebrated the success of PageRank in 1998, the “echo-chamber effect” has been a problem long documented and attributed to the fast emergence of personalised accesses to data (Barberá 2015, Pariser 2012, Jamieson 2010). The danger in this effect lies not only in being shut off to differing viewpoints, but also lacking the critical disposition to confront one’s own thoughts (Morozov 2013). And, because personalising mechanisms encompass not only search results but browsing videos through YouTube’s (and Google’s) personalised recommendations over the web, the chances that a user steps out homogeneity are simply hard to find, and shield users from engaging with purely random and different content (Helmond 2015).

The categorisation of search results by order of popularity extends this problem. Aggregating videos based on a greater number of people engaging with it for a period of time suggests that dominant and popular discourses will always dominate one’s results, pushing “quieter” voices out of sight, perpetuating a vicious cycle of dominance and well-established power-plays (Yardi and boyd 2010).

Fig. 4 The second ensemble of mechanisms to rank search results give priority to video popularity, a user’s personal affinities and limited diversification

And, although the algorithm’s diversification technique is a notable effort not to enclose users into far-too homogenised information spheres, it does not respond to our need to have information be distributed equally across channels and languages.

Diversifying the YouTube algorithm and interface

To date, both academic and digital actors have come with various solutions to the problem of political polarisation online across multiple disciplines (communication studies, political science, diplomacy, and media studies), but have not aimed particularly at how algorithms could be remediated to address this problem. (Prina et al. 2013, Ahmed and Forst 2005, Holt 2004, Anderson et al. 2004, Prosser 1985).

Most academic debates on the issue have often been left stalled as to whether political polarisation does or does not occur (Bakshy et al. 2015, Barberá 2015, Gentzkow and Shapiro 2010, Prior 2013). Of those that do indicate a rise in polarisation, some focus exclusively on general, political reform (Berman 2016, Prior 2013, Pisani-Ferry 2016), and some other in experimental international initiatives that do not quite take into account the interest of more dominant international actors. Sandvig et al. propose that algorithms be “audited” via multiple strategies “both anti-capitalist and pro-competition”, involving researcher, user and platform in a collaborative process to prevent that algorithms discriminate users based on gender, race and other identity categories, but ignore the problem of polarisation (Sandvig et al. 2014, 13). Google itself has come with its own suggestion to counter terrorism online, but only to redirect users lured by ISIS into content consisting in “showing them a better way”.

Minor tweaks for greater solutions

As (hypothetical) third, academic parties, we propose that YouTube tweaks algorithm’s semantic, user and popularity-centered sorting be changed to realise new “diplomatic” objectives without significantly obstructing with YouTube’s own commercial interests. Such modifications are an effort to make way for diversity (plurality in viewpoints) and better historical contextualisation, as described below.

Fig 5. Our tweaking of YouTube’s search ranking algorithm as giving way to diplomatic solutions via new search results

To the problem of semantic analysis we addressed above, we seek to allow that other video metadata be sorted through multiple languages automatic title translations and video subtitling in the language the user is using, or is known to have used (Danet and Herring 2010). A multi-linguistic solution attempts to broaden the ideological and cultural context of a specific search query without favouring any specific actor or ideology (Alatis 1993). To nuance already-discriminating query terms such as “terrorism”, we suggesting that semantic analysis should allow crawling other historical uses and associations of the queried term (Rogers 2013).

Fig 6. Our tweaking of YouTube’s semantic analysis and user activity data sorting mechanisms

As to prevent that results be an ongoing reflection of a user’s browsing history, we propose that the algorithm’s user specificity sorting mechanism make way for a small margin of randomised results and more extensive snowballing crawling techniques. Here, ultimately, we propose that YouTube encourage a user’s pursuit of curiosity, allowing them to break free from the blinkered worldview that they receive from their general YouTube browsing (Morozov 2013).

Fig 7. Our tweaking of YouTube’s video popularity, user specificity and diversification sorting mechanisms

This proposition is extended to our tweaking of the video popularity sorting mechanism; here, we propose that other results in other automatically translated languages and results belonging to more distant topic clusters be given a place. The same stands for our alteration of the algorithm’s “diversification” mechanism: instead of uniquely diversifying videos across genres and channels, an additional audio-visual sentiment analysis allows the very content of the video to be diversified (Kacimi and Gamper 2011).

Fig 8. A “diplomatic” YouTube interface

From “tech” to “media”: bearing civic responsibility for better political dialogue

In a sense, such solutions would also help substantiate YouTube and Google’s vision for a more open and transparent world if, at least in this case, YouTube, embraces more transparency regarding its own activities and socio-technical role in our informational environments (Rieder 2009). Doing so would be a constructive solution to engage civil society and political actors into critiquing the digital mediums they use and access information from, and would be the first of many steps towards fostering a less fragmented world.

References

Ahmed, Akbar S, and Brian Forst. After Terror: Promoting Dialogue among Civilizations. Cambridge; Malden, MA: Polity Press, 2005. Print.

Alatis, James E. Georgetown University Round Table on Languages and Linguistics (GURT) 1992: Language, Communication, and Social Meaning. Georgetown University Press, 1993. Print.

Anderson, Rob, Leslie A Baxter, and Kenneth N Cissna. Dialogue: Theorizing Difference in Communication Studies. Thousand Oaks, Calif.: Sage Publications, 2004. Open WorldCat. Web. 25 Sept. 2016.

Bakshy, E., S. Messing, and L. A. Adamic. “Exposure to Ideologically Diverse News and Opinion on Facebook.” Science 348.6239 (2015): 1130–1132. CrossRef. Web. 25 Sept. 2016.

Barberá, Pablo. “How Social Media Reduces Mass Political Polarization. Evidence from Germany, Spain, and the US.” Job Market Paper, New York University (2014): n. pag. Google Scholar. Web. 18 Sept. 2016.

Barbera, Pablo (New York University); Jost, John (New York University); Nagler, Jonathan (New York University); Tucker, Joshua (New York University); Bonneau, Richard (New York University). “Replication Data for: Tweeting from Left to Right: Is Online Political Communication More Than an Echo Chamber?” 2015. DataCite. Web. 12 Sept. 2016.

Berman, Russell. “What’s the Answer to Political Polarization in the U.S.?” The Atlantic 8 Mar. 2016. The Atlantic. Web. 25 Sept. 2016.

Bessi, Alessandro et al. “Users Polarization on Facebook and Youtube.” Ed. Tobias Preis. PLOS ONE 11.8 (2016): e0159641. CrossRef. Web. 21 Oct. 2016.

Brasil, B. B. C. “Discurso de Bolsonaro Deixa Ativistas ‘estarrecidos’ E Leva OAB a Pedir Sua Cassação.” BBC Brasil. N.p., n.d. Web. 21 Oct. 2016.

Bryant, Roland Hughes, Nick. “US Election 2016: Six Reasons It Will Make History.” BBC News 29 July 2016. www.bbc.com. Web. 21 Oct. 2016.

Chozick, Amy. “Hillary Clinton Calls Many Trump Backers ‘Deplorables,’ and G.O.P. Pounces.” The New York Times 10 Sept. 2016. NYTimes.com. Web. 21 Oct. 2016.

Computerphile. YouTube’s Secret Algorithm – Computerphile. N.p., 2014. Film.

Danet, Brenda, and Susan C Herring. The Multilingual Internet: Language, Culture, and Communication Online. Oxford; New York: Oxford University Press, 2007. Print.

Davidson, James et al. “The YouTube Video Recommendation System.” Proceedings of the Fourth ACM Conference on Recommender Systems. ACM, 2010. 293–296. Google Scholar. Web. 25 Sept. 2016.

Gentzkow, Matthew, and Jesse M. Shapiro. Ideological Segregation Online and Offline. National Bureau of Economic Research, 2010. National Bureau of Economic Research. Web. 14 Sept. 2016.

Giamas, Alex. “How YouTube’s Recommendation Algorithm Works.” InfoQ. N.p., 23 Sept. 2016. Web. 21 Oct. 2016.

Gillespie, T. “The Politics of ‘Platforms.’” New Media & Society 12.3 (2010): 347–364. CrossRef. Web. 25 Sept. 2016.

Gorvett, Zaria. “The Reasons Why Politics Feels so Tribal in 2016.” N.p., n.d. Web. 12 Sept. 2016.

Graham, David A. “‘Lock Her Up’: How Hillary Hatred Is Unifying Republicans.” The Atlantic 20 July 2016. The Atlantic. Web. 21 Oct. 2016.

Hayes, Danny. “Echo Chamber: Rush Limbaugh and the Conservative Media Establishment by Kathleen Hall Jamieson and Joseph N. Cappella.” Political Science Quarterly 124.3 (2009): 560–562. Wiley Online Library. Web. 21 Oct. 2016.

Helmond, Anne. “The Platformization of the Web: Making Web Data Platform Ready.” Social Media + Society 1.2 (2015): 2056305115603080. sms.sagepub.com. Web. 21 Oct. 2016.

Holt, Richard. Dialogue on the Internet: Language, Civic Identity, and Computer-Mediated Communication. Westport, Conn.: Praeger, 2004. Open WorldCat. Web. 25 Sept. 2016.

Kacimi, Mouna, and Johann Gamper. “Diversifying Search Results of Controversial Queries.” Proceedings of the 20th ACM International Conference on Information and Knowledge Management. New York, NY, USA: ACM, 2011. 93–98. ACM Digital Library. Web. 21 Oct. 2016. CIKM ’11.

Kavoori, Anandam P. Reading YouTube: The Critical Viewers Guide. New York: Peter Lang, 2011. Print.

Kroet, Cynthia. “Geert Wilders Tells US He’s Set to Become next Dutch Prime Minister.” POLITICO. N.p., 20 July 2016. Web. 21 Oct. 2016.

Levene, M. An Introduction to Search Engines and Web Navigation. 2nd ed. Hoboken, N.J: John Wiley, 2010. Print.

Matt Gielen and Jeremy Rosen. “Reverse Engineering The YouTube Algorithm.” Tubefilter. N.p., n.d. Web. 25 Sept. 2016.

“New World Summit: About.” New World Summit. N.p., n.d. Web. 21 Oct. 2016.

País, Ediciones El. “Diario de Colombia.” EL PAÍS. N.p., 9 Dec. 2012. Web. 21 Oct. 2016.

Pariser, Eli. The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think. Reprint edition. New York, NY: Penguin Books, 2012. Print.

Pisani-Ferry, Jean. “Comment répondre à la polarisation politique en Europe.” France Stratégie. N.p., 1 Nov. 2016. Web. 12 Sept. 2016.

Prina, Federica et al. Minorities, Media and Intercultural Dialogue. N.p., 2013. Open WorldCat. Web. 25 Sept. 2016.

Prior, Markus. “Media and Political Polarization.” Annual Review of Political Science 16.1 (2013): 101–127. CrossRef. Web. 18 Sept. 2016.

Prosser, Michael H. The Cultural Dialogue: An Introduction to Intercultural Communication. Washington, D.C.: SIETAR International, 1985. Print.

Rieder, Bernhard. “Democratizing Search? From Critique to Society-Oriented Design.” Deep Search. The Politics of Search beyond Google. (2009): 133–151. Google Scholar. Web. 25 Sept. 2016.

Rogers, Richard. Digital Methods. Cambridge, Massachusetts: The MIT Press, 2013. Print.

Sandvig, Christian et al. “Auditing Algorithms: Research Methods for Detecting Discrimination on Internet Platforms.” Data and Discrimination: Converting Critical Concerns into Productive Inquiry (2014): n. pag. Google Scholar. Web. 21 Oct. 2016.

Schmitt, Carl, and G. L Ulmen. Theory of the Partisan: Intermediate Commentary on the Concept of the Political. New York: Telos Press Pub., 2007. Print.

Snickars, Pelle, and Patrick Vonderau, eds. The YouTube Reader. Stockholm: National Library of Sweden, 2009. Print. Mediehistoriskt Arkiv 12.

“The Redirect Method.” Redirect Method. N.p., 2016. Web. 21 Oct. 2016.

Wolfsfeld, Gadi. Media and the Path to Peace. Cambridge; New York: Cambridge University Press, 2004. Print.

Yardi, Sarita, and danah boyd. “Dynamic Debates: An Analysis of Group Polarization Over Time on Twitter.” Bulletin of Science, Technology & Society 30.5 (2010): 316–327. bst.sagepub.com. Web. 19 Sept. 2016.