Game of Fakes: An Entertaining Approach to Analyzing How Awareness of Fake News Affects Trust in News Media

‘Fake news’: to Donald Trump, it’s a well-loved, catch-all phrase for the mainstream news media, but to scholars and politicians, it’s a trend of great concern. Fake news, and dis-, mis-, and mal-information is becoming harder for the average media consumer to detect and harder still for news media organizations to combat. In this project, we ask media consumers to challenge their ability to judge the authenticity of videos through our Game of Fakes; an interactive web-based game. We also investigate further steps to use this game to find answers to the questions:

- How confident are people in their ability to judge the authenticity of videos that may or may not be ‘fake’?

- How does challenging a person’s confidence in their ability to identify disinformation affect his or her overall trust in news media?

To challenge your ability to judge the authenticity of videos, click here to play the Game of Fakes!

What is ‘Fake News’?

As cries of ‘fake news’ in the media and political spheres grow louder, the meaning behind the term has become increasingly distorted. In order to accurately analyze the effect of disinformation awareness on media trust, it is essential to define key terms and phrases. The term ‘fake news’ is particularly problematic, in that it does not adequately encompass the variety or breadth of information pollution in today’s digital world (Wardle & Derakhshan 5). Furthermore, the term ‘fake news’ has been appropriated by politicians attempting to diminish the credibility of news media organizations they dislike (Wardle & Derakhshan 5). Due to the lack of consensus in defining ‘fake news,’ we prioritize the use of ‘disinformation’ in our project, which is “when false information is knowingly shared to cause harm,” (Wardle & Derakhshan 5).

Disinformation in the Digital Age

The ubiquitous technologies we depend upon today play an influential role in the proliferation and persistence of disinformation. Many scholars have noted how the Internet has increased the availability of disinformation, reduced “gatekeeping mechanisms” that previously restricted falsehoods from being reported, and obfuscated the ways in which authentic, intellectual information is communicated from experts to the public (Lewandowsky et al. 110; Loss of Trust?). Such reliance on online platforms for disseminating news, knowledge and research is leading to what scholars label “context collapse,” in which the trustworthiness of a media object cannot be discerned due to the lack of context (Loss of Trust?).

Social media, with its limited source context, is of particular interest to media scholars in understanding how disinformation spreads. Some researchers have suggested that the viral nature of social media enables false news content to reach people up to ten times faster than true news content (Vosoughi et al. 259). Further complicating the virality of disinformation on social media is the claim that efforts to debunk or correct disinformation can actually provide greater amplification of the false content (Wardle & Derakhshan 19). In turn, the disinformation receives higher engagement on social media and becomes more prominent than the correction.

With the increased daily use of social media around the world, more and more people receive at least some of their news content from social media platforms. According to the Pew Research Center, a majority adults in the United States and Western Europe (excluding Germans) get news daily from social media platforms everyday (Mitchell). Yet while more adults receive news via social media than ever before, it is crucial to acknowledge what news they are not receiving. Algorithms employed by social media platforms serve users content that confirms their already-held beliefs, ultimately enabling users to “stay encased in [their] safe, comfortable echo chambers,” (Wardle & Derakhshan 49). In the context of disinformation on social media, echo chambers can reinforce an individual’s belief in disinformation through repeated exposure to articles and videos that double down on the false claim, and can similarly prevent exposure to factual content that negates the disinformation. Moreover, researchers have found that even when disinformation is corrected, people are unlikely to fully believe and accept that correction (Lewandowsky et al. 111). Social media platforms, then, can be viewed as the perfect environment for the self-perpetuating cycle of disinformation persistence.

On the other hand, some scholars argue that despite the occurrence of echo chambers, social media can serve as a valuable tool in debunking disinformation claims when the correcting is done in an unobtrusive manner (Bode & Vraga 633). In analyzing Facebook’s related content feature, researchers found that users who viewed the related content of a post containing disinformation and were shown corrective information by Facebook’s recommendations algorithm were likely to change their initial belief in the disinformation (Bode & Vraga 632). In this sense, corrective content curated by Facebook’s algorithm and neutrally served to a user were seen to have a positive effect for disinformation debunking (Bode & Vraga 632). However, the limited scope of this experiment leaves much up for debate over social media’s role as a boon or bane in quelling the spread of disinformation.

Debunking, Disinformation Awareness & Trust in News Media

In today’s rapidly changing media landscape, there has been limited research about the specific effects of disinformation awareness and debunking on overall trust in news media. A 2017 survey of 8,000 people living in France, Brazil, the United Kingdom and the United States found that people placed less trust in online-only news sources after becoming aware of ‘fake news’ in the context of politics and election coverage (Kantar 5). The decreased trust in news media coverage on social media was most significant, with 58 percent of those surveyed reporting they were less trustful of news reports they found on social media in the wake of learning about fake news (Kantar 32). These results indicate that although many adults get their news from social media, they seem to be taking such content with a grain of salt.

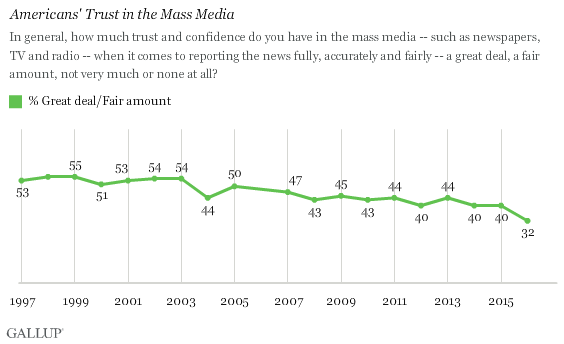

On the other hand, however, distrust in news media outlets across the spectrum has been in decline for years, particularly within the United States. A 2016 Gallup poll found that Americans’ trust in news media “to report the news fully, accurately and fairly” had fallen to its lowest level in polling history, with only 32 percent of respondents reporting they have a great deal or fair amount of trust in the media (Swift). This level of distrust is further polarized across political party lines, as only 14 percent of Republicans reported they believe the news media accurately and fairly reports the news, whereas 51 percent of Democrats surveyed indicated they trust news media (Swift). Media trust polling in other politically polarized countries such as Italy and Hungary reveal a similarly strong relationship between distrust and alleged political bias in news media (Martens et al. 13). However, with so many variables in play including political leanings and preconceived notions of bias in news reporting, it is difficult to assess just how strongly disinformation awareness impacts perceptions of trust in news media.

Fig. 1: According to a 2016 Gallup poll, only 32 percent of Americans trust the mass media to fully, accurately and fairly report the news (Swift).

Disinformation awareness also impacts news consumers’ self-perceived ability to accurately identify disinformation, as well as beliefs about how disinformation impacts society. In a 2016 Pew Research Center survey, 64 percent of American adults reported that disinformation causes a “great deal of confusion about the basic facts of current issues and events,” (Barthel et al. 3). Yet when asked about their own confidence and ability to identify ‘fake news,’ Americans reported they were quite confident, with 39 percent of respondents reporting they were “very confident” and 45 percent reporting they were “somewhat confident” (Barthel et al. 3). Similarly, a 2018 Eurobarometer survey of more than 25,000 people from all 28 EU member states found that 71 percent of EU citizens report they were either totally or somewhat confident in their ability to identify false information in news media (Fake News and Disinformation Online). Despite this confidence, 83 percent of EU citizens surveyed reported they were quite concerned about the impact of ‘fake news’ on democracy (Fake News and Disinformation Online). This indicates that although Europeans are confident in their own ability to spot disinformation and resist its effects, they are less confident in the ability of their fellow citizens to do so. Finally, in a survey of Singaporean adults, 80 percent of respondents (n=750) reported they were confident in their ability to identify false news, however when presented with a series of true and false news headlines, only 57 percent of respondents were able to correctly identify at least three out of five false headlines (Trust and Confidence in News Sources).

This disconnect between an individual’s belief in disinformation effects and his or her own confidence in identifying disinformation suggests that news consumers may not be as skilled in identifying ‘fake news’ as they believe they are. Some scholars believe this phenomenon is a result of third-person perception, in which a person believes others are more susceptible to media effects than they are themselves (Jang & Kim 296). A study of nearly 1300 American adults found that individuals believed others were more susceptible than they were to the potentially harmful effects of disinformation (Jang & Kim 299). This perception was exacerbated further when analyzed in the context of political identity, with Republican respondents reporting that Democrats were more vulnerable to the effects of ‘fake news’ than they were, and vis-versa (Jang & Kim 299). While the third-person effect is a compelling reason for explaining this phenomenon, pure overconfidence could also reasonably explain why news media consumers are confident yet somewhat incompetent in their ability to identify disinformation (Liu). Regardless of the reason, the overconfidence/incompetence incongruence is cause for concern. If news media consumers believe disinformation is dangerous and increasingly prevalent, yet are confident in their ability to identify it, they may be less likely to educate themselves and improve their media literacy skills.

Project Relevance

The ‘fake news’ phenomenon has accelerated the need for new ways to detect false information online, especially with the rise of “deepfake” videos. Deepfake videos use AI deep learning technology and have been used to superimpose footage of a person’s face onto another (Roose). Fact-checking sites and news literacy projects have emerged as resources for detecting misleading contents. It is therefore relevant to explore how ‘fake news’ detection or debunking affects people’s trust in news media. In addition, several games have been designed to engage people in fake news detection. By comparing them to our project, we hope to examine other aspects of news debunking that might not have been tackled in these games.

Methodology

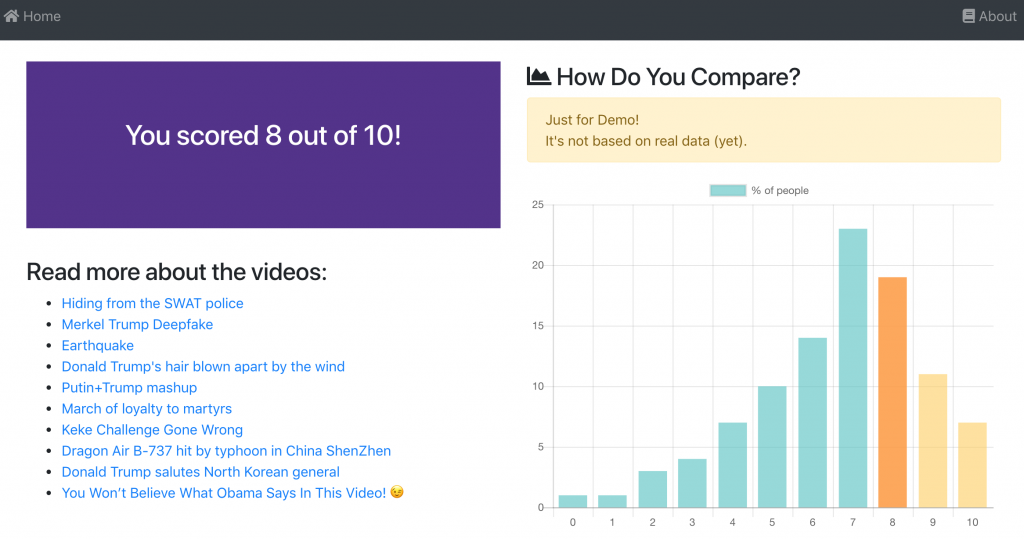

In order to enlighten the disconnect between belief in disinformation effects and confidence in identifying disinformation, we built the Game of Fakes. The Game of Fakes is an interactive, mobile-friendly, web-based game that presents users with a series of ten videos. After watching each short video clip, users are asked if they think the content presented is ‘real’ (non-doctored and genuine) or ‘fake’ (manipulated and edited). Users are informed immediately if their selection is correct or not, a feature which provides a feedback loop that may impact their confidence in their ability to detect fake videos in future rounds of the game.

The game is currently deployed at http://game-of-fakes.herokuapp.com/. Its source code is available at GitHub.

Content Choices

Considering the latest improvements in the technologies of machine learning which “have radically changed the playing field of image and video manipulation” (Guera and Delp 1), we decided to focus on video contents, as it is more likely for our audience to be unfamiliar with the current capability of technology-based video editing.

We tried to achieve a balance between genuine and doctored videos to keep the game challenging, although a few of the fake videos have gone viral and are likely to be familiar for some of our audience. For the last video, we chose a very famous “deep fake” video, in which Jordan Peele, the voice behind the manipulated video, introduces himself and explains that the video is doctored and that “we need to be more vigilant with what we trust from the Internet,” (Silverman). While this video will make our last data point meaningless, we saw it in line with our work and decided to finish the game with this message about being a skeptical news consumer.

Gamification

Gamification in the context of disinformation debunking is not a novel approach, and we were inspired by a number of existing games that seek to teach users how to identify disinformation. For example, the game Factitious was created in 2017 by Maggie Farley and Bob Hone at the American University Game Lab. In the game, users are presented with the first few paragraphs of a news article and must decide if they think the article is fake or real (Farley & Hone). Upon making a choice, users are given information about the source and tips to use in identifying false articles (Farley & Hone). The game is open source and can be modified by newsrooms and educators, which Farley and Hone hope encourages organizations to adopt the game as a tool to increase media literacy (Watson).

Another game attempting to help citizens understand the dynamics of disinformation creation is Bad News, which puts users in the position of an “unscrupulous media magnate,” (“Can You Beat My Score?”). The game, built by researchers at the Cambridge Social Decision-Making Lab, guides users through a series of decisions with the goal of amassing as many virtual Twitter followers as possible by pretending to be a fake news website (Sample). Throughout the game, users earn badges for creating emotional content that goes viral, impersonating well-known Twitter users and creating polarizing online scandals (“Can You Beat My Score?”). Bad News also surveys users after the game to gauge how well a user can identify the strategies employed by fake news generators (Sample).

Further Research Ideas

Given enough time, we would develop the game to further understand how disinformation awareness impacts trust in news media. We could also consider reframing fake news into their specific contexts of distribution and see if a digitally manipulated video from Facebook is more likely to be trusted than a digitally manipulated video from Twitter. It would also be interesting to examine fake news in other media formats such as articles and photos.

In the game we have created, we are currently storing the number of seconds a user spends viewing each video before deciding whether the content is genuine or doctored. In the future, we can use these data to check if there is any correlation between decision time and the precision of a user’s answer.

This project could be used as a springboard to further our understanding of disinformation debunking and its effects on people’s trust. By using the game as a daily activity, will people’s trust in media change? In addition, the game could be used to predict people’s decision making abilities when presented with news content. The data collected could be dissected and analyzed through different categories, such as age or time spent before making a choice, and used to develop other projects that would help us understand the dynamics in content consuming and the spreading of disinformation online.

Conclusion

Our goal in creating the Game of Fakes was to illustrate how challenging it is to discern digital manipulation and ask users to question their own ability to identify disinformation. We chose to gamify this difficult issue to make it more tangible and interactive for a general audience. But does this visibility contribute in the battle against disinformation in a purely positive way? Is there a way to ask people to be critical while consuming content and not ruin their trust in the media in general? We believe these questions are worth investigating more deeply to make sure that awareness and debunking contribute to news media literacy and education rather than further diminish already declining trust in news media.

References

Barthel, Michael, et al. Many Americans Believe Fake News Is Sowing Confusion. Pew Research Center, Dec. 2016.

Bode, Leticia, and Emily K. Vraga. “In Related News, That Was Wrong: The Correction of Misinformation Through Related Stories Functionality in Social Media.” Journal of Communication, vol. 65, no. 4, Aug. 2015, pp. 619–38. Wiley Online Library, doi:10.1111/jcom.12166.

“Can You Beat My Score? Play the Fake News Game!” Bad News, https://www.getbadnews.com/. Accessed 15 Oct. 2018.

Fake News and Disinformation Online. European Commission, Feb. 2018, p. 13, https://ec.europa.eu/digital-single-market/en/news/final-results-eurobarometer-fake-news-and-online-disinformation.

Farley, Maggie, and Bob Hone. “Facticious.” Factitious 2018!, http://factitious.augamestudio.com/#/. Accessed 15 Oct. 2018.

Guera, David, and Edward J. Delp. Deepfake Video Detection Using Recurrent Neural Networks. 2018, p. 6.

Jang, S. Mo, and Joon K. Kim. “Third Person Effects of Fake News: Fake News Regulation and Media Literacy Interventions.” Computers in Human Behavior, vol. 80, Mar. 2018, pp. 295–302. Crossref, doi:10.1016/j.chb.2017.11.034.

Liu, Claire. “Responsibility, Overconfidence, & Intervention Efforts in the Age of Fake News.” Roper Center, 25 Jan. 2018, https://ropercenter.cornell.edu/fake-news/.

Loss of Trust? Loss of Trustworthiness? Truth and Expertise Today. ALLEA, May 2018, p. 1-12. https://www.allea.org/european-academies-publish-discussion-paper-on-loss-of-trust-in-science-and-expertise/.

Martens, Bertin, et al. The Digital Transformation of News Media and the Rise of Disinformation and Fake News. JRC Digital Economy Working Paper 2018-02, European Commission, Joint Research Centre, Apr. 2018, p. 1-57.

Mitchell, Amy, et al. In Western Europe, Public Attitudes Toward News Media More Divided by Populist Views Than Left-Right Ideology. Pew Research Center, May 2018, http://www.journalism.org/2018/05/14/in-western-europe-public-attitudes-toward-news-media-more-divided-by-populist-views-than-left-right-ideology/.

Roose, Kevin. “Here Comes The Fake Videos, Too.” The New York Times, 14 March 2018. https://www.nytimes.com/2018/03/04/technology/fake-videos-deepfakes.html. Accessed 15 Oct. 2018.

Sample, Ian. “Bad News: The Game Researchers Hope Will ‘vaccinate’ Public against Fake News.” The Guardian, 20 Feb. 2018. www.theguardian.com, https://www.theguardian.com/technology/2018/feb/20/bad-news-the-game-researchers-hope-will-vaccinate-public-against-fake-news.

Silverman, Craig. “How To Spot A Deepfake Like The Barack Obama–Jordan Peele Video.” Buzzfeed, https://www.buzzfeed.com/craigsilverman/obama-jordan-peele-deepfake-video-debunk-buzzfeed. Accessed 15 Oct. 2018.

Swift, Art. “Americans’ Trust in Mass Media Sinks to New Low.” Gallup News, 14 Sept. 2016, https://news.gallup.com/poll/195542/americans-trust-mass-media-sinks-new-low.aspx.

Trust and Confidence in News Sources. Ipsos, Sept. 2018, p. 1-25, https://www.ipsos.com/en-sg/susceptibility-singaporeans-towards-fake-news.

Trust in News. Kantar, 31 Oct. 2017, p. 1-48, http://www2.kantar.com/l/208642/2017-10-27/6g28j.

Vosoughi, Soroush, et al. “The Spread of True and False News Online.” Science, vol. 359, no. 6380, Mar. 2018, pp. 1146–51. science.sciencemag.org, doi:10.1126/science.aap9559.

Wardle, Claire, and Hossein Derakhshan. Information Disorder: Toward an Interdisciplinary Framework for Research and Policymaking. Shorenstein Center on Media, Politics and Public Policy at Harvard Kennedy School, 31 Oct. 2017, https://shorensteincenter.org/information-disorder-framework-for-research-and-policymaking/#Executive_Summary.

Watson, Tennessee. “To Test Your Fake News Judgment, Play This Game.” NPR.Org, 3 July 2017, https://www.npr.org/sections/ed/2017/07/03/533676536/test-your-fake-news-judgement-play-this-game.