Terrorism in the Digital Era: The Dark Side of Livestreaming, Online Content and Internet Subcultures

In the space of 26 minutes on March 15th, 2019 Brenton Tarrant, a radical right-wing extremist, opened fire on two mosques in the city of Christchurch in New Zealand killing 51 people during New Zealand’s worst mass shooting in its history. What came to be unique about Tarrant’s attack is that he live streamed the obscenity on Facebook, content which was then proliferated online on numerous platforms – including forums like 8chan and Reddit.

Not only did the livestream expose the limitations of large social platforms such as Facebook in the distribution of acutely violent content, but shows how evolving “media technology and development of features on social media platforms are changing the way that terrorists disseminate their message, ideologies, and their act of violence” (Burke, 2016) through online platforms. Tarrant was a member of various online forums, and actively engaged in trolling – the use of deliberatively offensive speech – meme and ‘shitposting’ subcultures. His “anti-immigration, neo-fascist ideology lamenting the supposed decline of European civilization” (Walden, 2019) was reciprocated by many others in extreme online environments.

Free speech means free speech, but where do platforms and regulation draw the line? What content is deemed appropriate by social platforms and the majority of society?

Before his attack, Tarrant teased his plans on Twitter and posted on the /pol/ section on 8chan, a forum that is widely used by the extreme right – where he linked his 74 page manifesto “The Great Replacement” on file-sharing websites. He incited others on the forum board to stream his live Facebook video and disseminate his alt-right manifesto online. The gruesome live stream footage was then “replayed endlessly on Youtube, Twitter and Reddit, as the platforms scrambled to take down the clips nearly as fast as new copies popped up to replace them” (Roose, 2019).

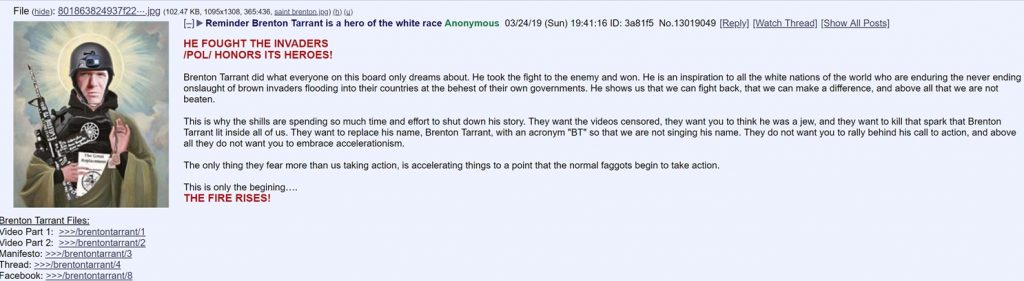

On the particular /pol/ forum board on 8chan, as the image below illustrates, Tarrant was hailed as a hero who fought invaders and is portrayed as a fighter for the extreme right – while users persisted in re-posting links to read his manifesto and watch the full-length video.

Digital technology was “an integral and integrated component of Tarrant’s attack” (Macklin, 2019) and the video was “not so much a medium for his message insomuch as it was the message” (Macklin). This highlights Tarrant’s intent for the video to be amplified online far beyond Facebook and the 8chan forum, igniting a new hybrid of tech-based violence. Although video propaganda within terrorist organizations such as the Islamic State has been exploited to reach the masses in recent years, the perpetual advancement of “smartphones with high processing power, miniature video and camera devices and high speed data networks” (Burke, 2016) has enabled individuals to interact with large numbers of people in real time.

New technology has given the power to individuals to amplify any personal message with the general public at large, instantly, without restrictions. The advancements in communication technology have without doubt benefited our society, but it has created extensive ramifications for the future of violent content and (extremist) mass propaganda.

How then, do platforms like Facebook, Reddit, Twitter, and many others who have progressed social and cultural traditions positively in several ways, take responsibility for the dark content that inhabits their very ecosystem?

Technological developments, in conjunction with the rise of radical internet subcultures on platforms like 8chan, have stimulated the dark side of extremist ideologies and content on online platforms. In recent times, 8chan has become a “go-to resource for violent extremists” (Roose, 2019) where in 2019 alone, the perpetrators of at least three mass shootings including Christchurch and the synagogue shooting in Polway, California were announced on 8chan “in advance, often accompanied by racist writings that seem engineered to go viral on the internet” (Roose). 8chan was at first “marketed as a ‘free speech’ alternative to 4chan… and was already known for its extremely loose content moderation” (Hagen et al, 2019), an imageboard-based forum with very few restrictions.

For Tarrant and other members of the alt-right movement, 8chan and similar platforms accommodate their views that “loathe establishment liberalism and uses extreme speech to provoke anger in others” (Marwick & Lewis, 2017). It gives these individuals an anonymous platform to discuss views which are oftentimes radical and derogatory. These forums that encourage violent content and their distribution within internet subcultures not only conserve the very foundation of extremist ideas, but they constantly harbor their development.

Tarrant’s barbaric live stream shows how easily social platform systems can be overwhelmed by content, and how regulation and vigilance must be addressed in more serious terms. These platforms, used by millions of individuals on a daily basis, wield increasingly growing power in today’s economy, geopolitical events and social habits. Because of this, they have an obligation to use more resources to keep the platforms safe from violent content and extremist, hateful views.

In total, Facebook removed “about 1.5 million videos of the attack globally within the first 24 hours” (Macklin, 2019), but failed to keep track of the multiple re-uploads of the livestream on the platform – and seemed to scramble only once the video was flagged by users. This shows that the algorithms in place may not be as effective as we think to automatically flag inappropriate content. Youtube, similarly, “struggled to cope with the scale of the (livestream) traffic” (Macklin), highlighting the problem of content regulation but also the amount of individuals who willingly wanted to share Tarrant’s actions and beliefs.

8chan, on the other hand, has gone offline after the site’s founder, Fredrick Brennan, called for the shutdown of the site. After the El Paso, Texas shooting in August 2019, Brennan stated that 8chan “is not doing the world any good… it is a complete negative to everybody except the users that are on there” (Roose, 2019). Brennan realized that 8chan’s present-day platform turned into something far worse than he ever envisioned.

In conclusion, as we continue to experiment and deal with the large amount of content online, we must be objective in understanding that even the biggest platforms like Facebook and Youtube dedicate a lot of resources to exclude violent content. Facing criticism in the past, these companies “claimed to be stepping up efforts to use both human employees and artificially intelligent software” (Greenemeier, 2018) to locate and delete violent content.

Nonetheless, non-human algorithms and the design of internet platforms driven by monetization priorities creates a situation where platforms’ violent content and hate speech policies “are weakly enforced, and their practices for removing graphic videos are inconsistent at best” (Roose, 2019). Far-right extremist groups expand techniques of ‘attention hacking’ to increase the visibility of their ideas through calculated use of social media, memes, and bots. These techniques use and often outsmart platforms’ algorithms to their advantage and put a spotlight on this type of content.

We must be aware that extremist actors and evolving geo-political realities, “compounded by the progress in communication and information technology” (Cotrau, 2007) are increasingly using online platforms to shape radical ideas. Features like livestreaming are transforming our online communication and platforms’ affordances are re-designing the fabric of distinct possibilities online. As we continue the efforts of content regulation in our digital sphere, understanding the far-reaching implications of our online technology is a crucial step to securing a safe digital environment.

References:

Biggs, John. “Papa, What’s a Shitpost?” TechCrunch, TechCrunch, 23 Sept. 2016, www.techcrunch.com/2016/09/23/papa-whats-a-shitpost/

Burke, Jason. “The Age of Selfie Jihad: How Evolving Media Technology Is Changing Terrorism.” CTC Sentinel, vol. 9, no. 11, 2016, https://ctc.usma.edu/the-age-of-selfie-jihad-how-evolving-media-technology-is-changing-terrorism/

Evans, Robert. “Ignore The Poway Synagogue Shooter’s Manifesto: Pay Attention To 8chan’s /Pol/ Board.” Bellingcat, 28 Apr. 2019, www.bellingcat.com/news/americas/2019/04/28/ignore-the-poway-synagogue-shooters-manifesto-pay-attention-to-8chans-pol-board/

Greenemeier, Larry. “Social Media’s Stepped-Up Crackdown on Terrorists Still Falls Short.” Scientific American, Scientific American, 24 July 2018, www.scientificamerican.com/article/social-medias-stepped-up-crackdown-on-terrorists-still-falls-short/

Hagen, Sal, et al. “Infinity’s Abyss: An Overview of 8chan.” OILab, 8 Sept. 2019, www.oilab.eu/infinitys-abyss-an-overview-of-8chan/

Macklin, Graham. “The Christchurch Attacks: Livestream Terror in the Viral Video Age.” CTC Sentinel, vol. 12, no. 6, July 2019, https://ctc.usma.edu/christchurch-attacks-livestream-terror-viral-video-age/

Marwick, Alice, and Rebecca Lewis. Media Manipulation and Disinformation Online. Data & Society, 2017, p. 11, Media Manipulation and Disinformation Online. https://datasociety.net/output/media-manipulation-and-disinfo-online/

“NZ Gun Laws Likely to Face Renewed Scrutiny in Light of Friday’s Attack.” Click Ittefaq, 15 Mar. 2019, www.clickittefaq.com/nz-gun-laws-likely-to-face-renewed-scrutiny-in-light-of-fridays-attack/

Roose, Kevin. “A Mass Murder of, and for, the Internet.” The New York Times, 15 Mar. 2019, www.nytimes.com/2019/03/15/technology/facebook-youtube-christchurch-shooting.html?searchResultPosition=277

Roose, Kevin. “’Shut the Site Down,’ Says the Creator of 8chan, a Megaphone for Gunmen.” The New York Times, 4 Aug. 2019, www.nytimes.com/2019/08/04/technology/8chan-shooting-manifesto.html?searchResultPosition=4

Sobiraj, Lars. “8Chan Wird Zu 08Chan: Anonymität Bei Zeronet Gefährdet.” Tarnkappe, 13 Aug. 2019, www.tarnkappe.info/8chan-wird-zu-08chan-anonymitaet-bei-zeronet-gefaehrdet/

Walden, Max. “New Zealand Mosque Attacks: Who Is Brenton Tarrant?” New Zealand News | Al Jazeera, Al Jazeera, 18 Mar. 2019, www.aljazeera.com/news/2019/03/zealand-mosque-attacks-brenton-tarrant-190316093149803.html