The Worrisome Biometrics: Deepfakes, ZAO and Privacy Issue

In early September, a face-swapping app called ZAO attracted universal attention in China. Using the technology of “Deepfakes”, it allows users to swap their faces into famous characters’ in the film and TV show clips. ZAO prevailed like the virus on Chinese social media immediately and was taken down from software stores as quick as it disseminated. Although the longevity of this app is short, it raised the long-time concerned issue about privacy, especially when it relates to the biometrics.

Deepfakes

‘Deepfakes’ initially emerged in late 2017. A Reddit user named Deepfakes used machine learning technology to replace porn star’s face with famous actress’s and posted the edited video to a subreddit (Knight, 2018). Then, a large number of such type of AI-based videos appeared widely on the Internet. In additional to manipulate the pornographic video, the contents of deepfake productions also extended into other realms of social life such as politics.

In the age of fake news, such fake-producing technology that can easily blur the line between truth and fiction has been of a great public concern, which has been calling for the supervision of content producers and technical tools for detection and filtering the fakes. In its early development, as Hao (2019) referred, “deepfakes are currently not mainstream”, the production of deepfake videos still requires certain skills and amount of resources. As there already are several algorithms and tools that are developed to identify the forgers appear (Chesney and Citron, 1), it looks like the wide circulation and application of such high-quality public figures’ face-swapped videos can be under control. However, the technology has advanced day by day at a rapid pace; the forgers update quickly as well. The deepfake softwares still increase in high speed while the amount of data required to produce a fake video dropped dramatically (Knight, 2019), which bring more concerns beyond the original focus on fake and truth.

ZAO

ZAO’s appearance reveals a new developmental direction of deepfake applications. Based on the fundamental deep learning technology, the designers of Zao make this app easier-to-use and more widely accessible. The instruction is as most straightforward as you can imagine: take a selfie or upload a photo, and choose a film or TV show clips you like in the app, then wait for few seconds you will get your synthesised video which let you “act” your favourite work.

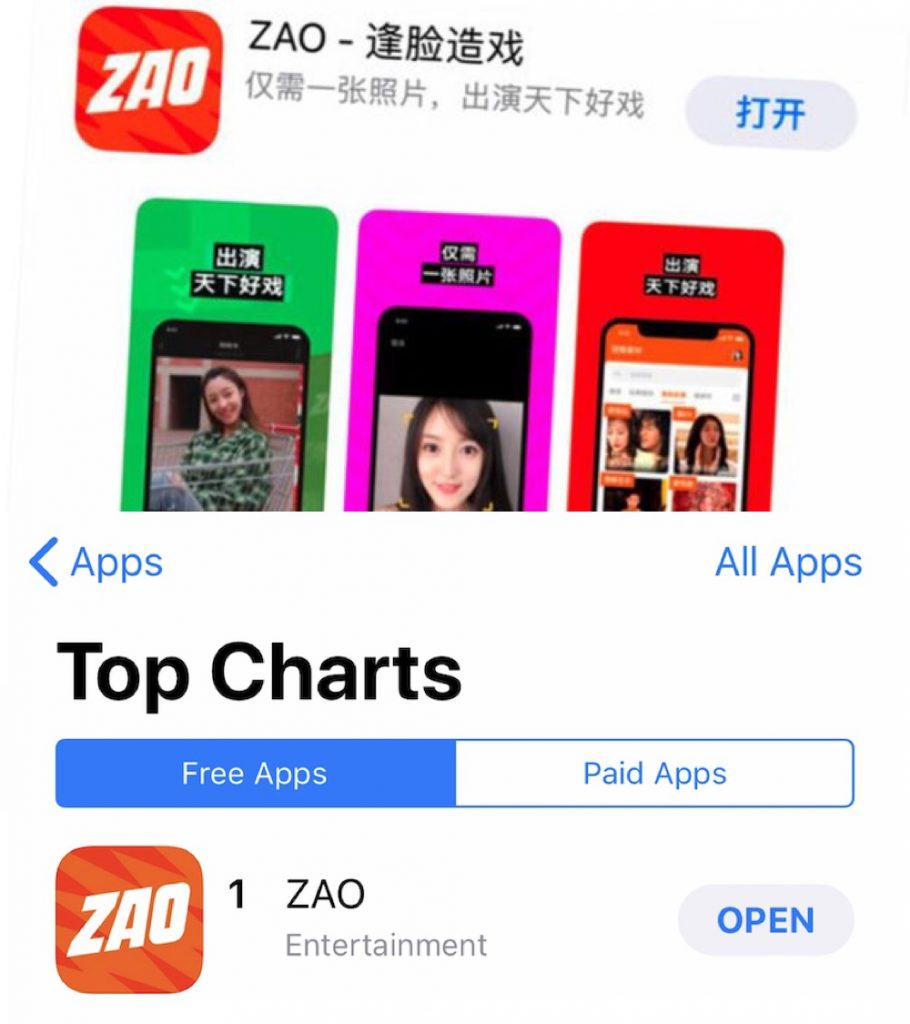

By lowering the threshold of using deepfake technology to a large extent, Zao quickly took Chinese netizens’ Wechat moments (China’s equivalent of Facebook newsfeed) by storm. In the second day after it debuts, hashtags of ZAO had more than 8 million views on Weibo, China’s equivalent of Twitter (Xie, 2019). Meanwhile, it had climbed to the NO 1 on the download charts in the Apple App Store just in few days (Xia, 2019). The popularity of Zao, on the one hand, benefits from the novelty and unusualness of deepfake videos in China (Coleman, 2019); on the other hand, can draw from its exploitation to end-users’ psychology of showing something interesting and unfamiliar as display and self-promotion. As a new application of deepfakes, the emergence of Zao indicates that the use of this technology is extending beyond its original use on celebrities and politicians, as the “fake” contents are free and easily produced by ordinary people in a large scale.

Controversy

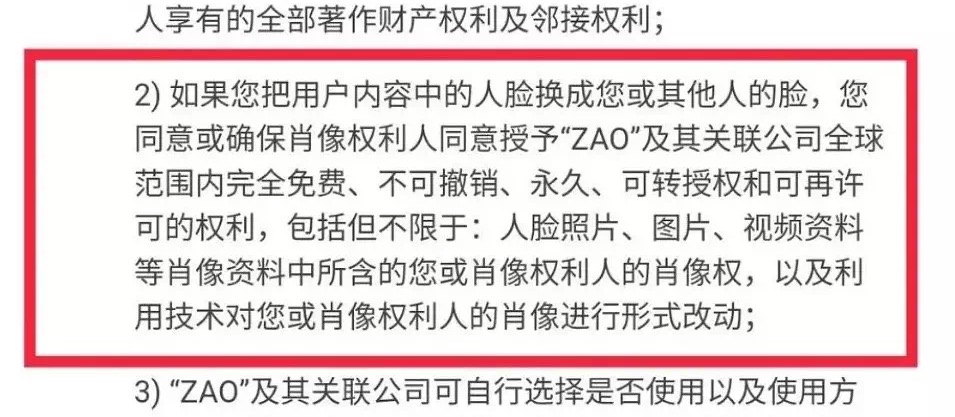

However, the wide circulation of ZAO on Chinese Internet also arouse wide public concern because of its end-user agreements. The app’s terms and conditions “gave the developer the global right to permanently use any image created on the app for free” (Xie, 2019) while the developer had “the right to transfer this authorisation to any third party without further permission from the user” (Coleman, 2019). Such controversial clauses soon were questioned by industry insiders and lawyers; they claimed this “unequal” agreement would have potential risk to users’ personal information safety and heavily conflict their privacy (ibid).

After generating substantial public interest in the first two days of circulation, ZAO’s users began to realise that their faces were no longer under their control. With the intensification of boycotts and condemnation to the unfair clause on social media, the developer company MOMO was forced to apologise for its agreements (Xie, 2019). Moreover, Wechat, where ZAO had become popular, quickly banned videos shared from ZAO, explaining it had “security risks.” The app was also taken down from apple’s app store and cannot be downloaded even now.

The appearance of ZAO is nothing but a flash in the pan, which reveals the current public concern about privacy protection. What important in ZAO’s case is its potential risk to use people’s face, one of the basic categories of biometrics data (Evan et al., 17), illegally. That raises a question about deepfake technology in such a wide-use context: What will happen if we lose our face?

Biometrics

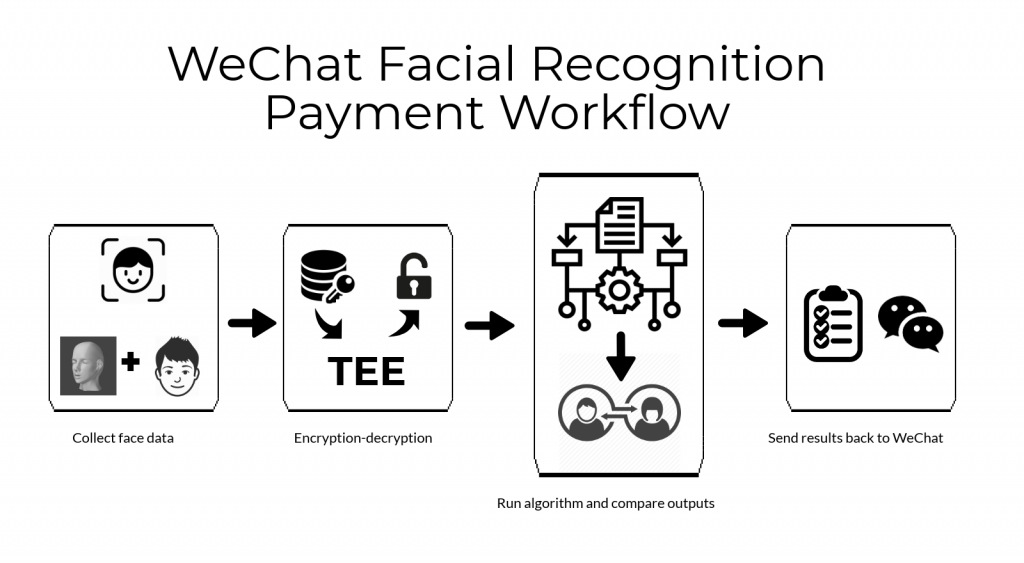

Biometrics is a system that enables automatic personal recognition based on the behavioral and biological characteristics such as face, fingerprints, voice, iris (Evans et al., 17). Nowadays, biometrics-based authentication and identification systems have been more widespread and applied in many aspects of life. People have been gradually getting used to using their biometric traits for secure application and security such as access control, e-commerce, online banking (Zorkadis and Donos, 125). In China, all online payment apps support facial recognition to proceed with the payment (Liao, 2018). Besides, there are plenty of airports around the world also require face scan for travellers’ verification (Winick, 2018). Since the biometric features of individuals are tightly bound with their identities and cannot be forgotten or lost, such authentication system provides high reliability and convenience for personal security. On the contrary, privacy protections of such biometric information are required urgently, especially when those traits cannot be replaced (Piuri and Scotti, 7).

Although the applications of biometrics recognition are accepted extensively, the frequent news about data leak also raise people’s doubts about such technology. A survey researching such unique-to-my-body authentification in China showed that more than half or participants expressed security concerns over using biometrics payments (Liao, 2018). Meanwhile, its use on surveillance systems in public areas also presents new challenges with respect to privacy (ibid). Individual’s biometric data is personal and sensitive and people’s awareness of data protection have been keeping increasing; the case of ZAO is one of the examples.

Here, in the era where facial recognition has the day, the rise of highly fraudulent deepfake technology raises the issue about people’s biometrics privacy again. Can technology truly blur the boundary between truth and fiction with its continuous advancement? If so, how about our personal privacy and security when everyone can fake convincing videos about us as long as they have our photos? In particular, when more and more large companies have access to people’s biometric information to a certain in the data-based economy, will we finally lose control of our body?

Concerns related to such issues around how to use technology and balance its relationship with privacy will constantly emerge, which of great significance for everybody to keep thinking and discussing.

References

Chesney, Robert, and Citron, Danielle. “Deepfakes and the New Disinformation War: The Coming Age of Post-Truth Geopolitics.” Foreign Affairs, vol. 98, no. 1, Council on Foreign Relations NY, Jan. 2019, pp. 147–55, <http://search.proquest.com/docview/2161593888/>.

Coleman, Alistair. “Deepfake’ App Causes Fraud and Privacy Fears in China”. [online] BBC News. 2019. 21 Sep. 2019. <https://www.bbc.com/news/technology-49570418>

Evans, Nicholas, et al. “Biometrics Security and Privacy Protection [From the Guest Editors].” IEEE Signal Processing Magazine, vol. 32, no. 5, IEEE, Sept. 2015, pp. 17–18, doi:10.1109/MSP.2015.2443271.

Hao, Karen. “Deepfakes Have Got Congress Panicking. This Is What It Needs to Do”. [online] MIT Technology Review. 2019. 20 Sep. 2019. <https://www.technologyreview.com/s/613676/deepfakes-ai-congress-politics-election-facebook-social/>

Knight, Will. “Fake America Great Again”. [online] MIT Technology Review. 2018. 20 Sep. 2019. <https://www.technologyreview.com/s/611810/fake-america-great-again/>

Knight, Will. “A New Deepfake Detection Tool Should Keep World Leaders Safe—for now”. [online] MIT Technology Review. 2019. 21 Sep. 2019. <https://www.technologyreview.com/s/613846/a-new-deepfake-detection-tool-should-keep-world-leaders-safefor-now/>

Liao Rita. “11/11 Shows Biometrics Are the Norm for Payments in China”. TechCrunch. [online] 2019. 21 Sep. 2019. <https://techcrunch.com/2018/11/13/biometrics-payment-china-singles-day/>

Piuri, Vincenzo, and Scotti, Fabio. “Biometrics Privacy: Technologies and Applications.” Proceedings of the International Conference on Optical Communication Systems, INSTICC, 2011, pp. 7–7.

Winick, Erin. “Orlando’s Airport Will Require Face Scans on All International Travellers”. [online] MIT Technology Review. 2019. 21 Sep. 2019. <https://www.technologyreview.com/f/611538/orlandos-airport-will-require-face-scans-on-all-international-travelers/>

Xia, Allan. “Deepfakes, Face-Swaps and the Future of Identity: Why the ZAO App Went Viral”. [online] The Spinoff. 2019. 22 Sep. 2019 < https://thespinoff.co.nz/business/05-09-2019/deepfakes-face-swaps-and-the-future-of-identity-why-the-zao-app-went-viral/ >

Xie, Echo. “Chinese Face-Swapping App Sparks Privacy Concerns Soon After Its Release”. [online] South China Morning Post. 2019. 21 Sep. 2019 <https://www.scmp.com/news/china/society/article/3025271/chinese-face-swapping-app-sparks-privacy-concerns-day-after>

Zorkadis, V, and Donos, P. “On Biometrics-Based Authentication and Identification from a Privacy-Protection Perspective.” Information Management & Computer Security, vol. 12, no. 1, Emerald Group Publishing Limited, Feb. 2004, pp. 125–37, doi:10.1108/09685220410518883.