Call to think: Twitter and Facebook urge users to fact-check information

Abstract:

Aiming to nudge users in the right direction, Twitter introduced pop-up notifications if users want to (re-)post a news article that they have not opened yet, prompting them to be more aware of the content they are circulating to their followers. Similarly to the Facebook fact-checking notifications, the feature intends to stop the spread of false information on social media.

The spread of misinformation, or as it is commonly referred to in the media since 2016: “fake news”, is seemingly an unavoidable part of social media (Wendling). People can (re-)post almost everything they like, whether it is factually correct or not and whether they know what they post about or not. Twitter’s guidelines specifically state: “Twitter provides a platform for

its users to share and receive a wide range of ideas and content, and we greatly value and respect our users’ right to expression. Our users are solely responsible for the content they publish […]. Because of these principles, we do not actively monitor users’ content […]” (Twitter Help Center).

However, the social media platform is currently testing a new feature: a pop-up notification which will urge users to read an article before they (re-)post it (Hutchinson). The aim is to stop the spread of potentially false information and try to make users more aware of what they are posting and circulating to their followers. There seems to be an information overload of false information that is difficult to differentiate from the truth by social media users (Lorenz-Spreen, Philipp, et al.). Moreover, research conducted in 2018 concluded that “fake news” spread quicker and broader than real news on Twitter and are more likely to be retweeted (Vosoughi, Soroush, et al., 5).

How does this new Twitter feature work?

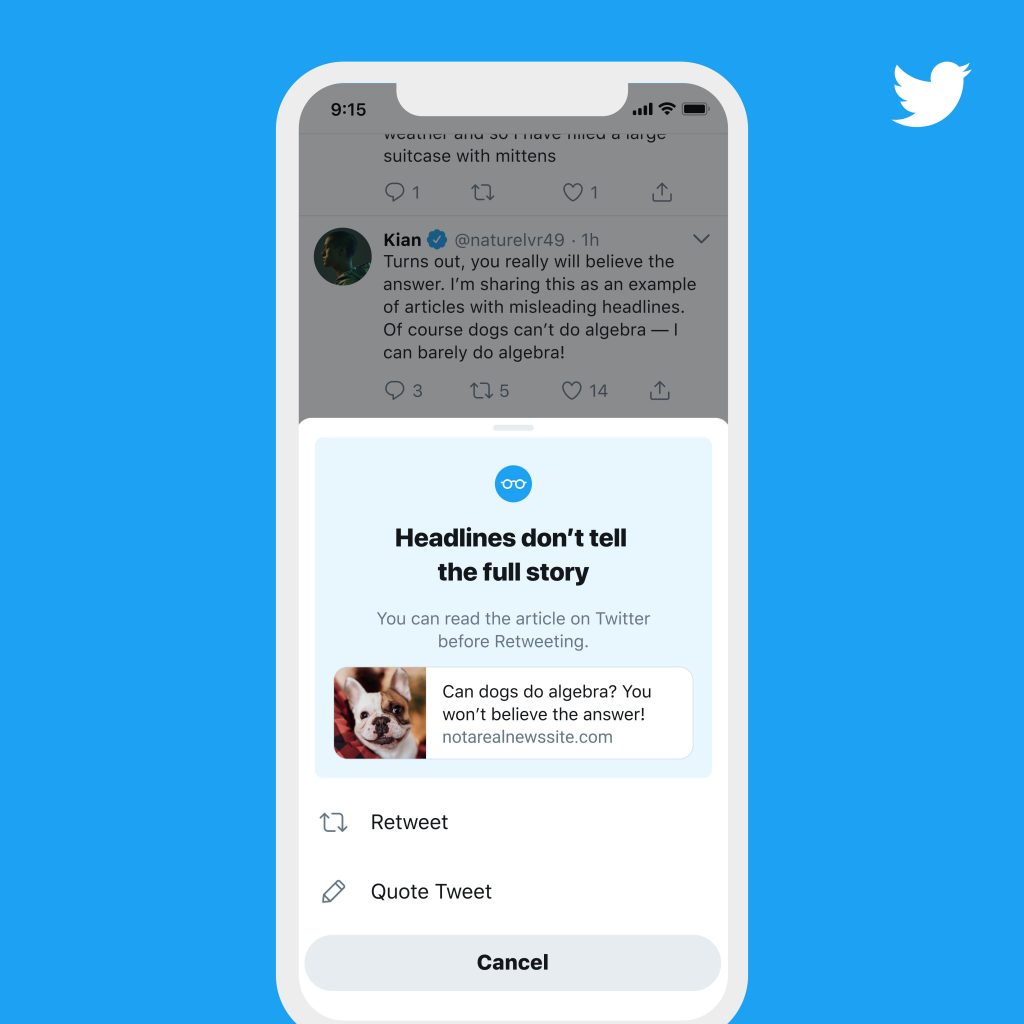

In the test phase, the Android Twitter app is tracking whether a user has recently opened a link to a news outlet domain through Twitter or not. If a user wants to (re-)post an un-opened link, a pop-up notification will show up that asks users if they want to open and read the article before they post it, highlighting that headlines do not always tell the full story. This, however, does not restrict the (re-)posting of an unopened article in any way.

Research by Slate in 2013 already showed why this is important: the majority of people post a link to a slate.com article despite not having read the whole article themselves (Manjoo). Of course, this feature is not fool-proof or able to track a user’s entire browser history to see if someone has read an article on a different browser or device, or perhaps only clicked on it after this pop-up notification and immediately closed it again. Additionally, it is relevant to mention privacy concerns as user data of external links is being collected (Mantelero, 229). If Twitter users do not want the platform to know which links they have opened, they do not have a choice but to leave the platform.

Nonetheless, Twitter reported that there were happy with the results after the testing phase and are working on introducing this concept on a global scale: “People open articles 40% more often after seeing the prompt […], people opening articles before RTing increased by 33%, some people didn’t end up RTing after opening the article” (Twitter Comms on Twitter). These results are rather inconclusive, as they did not share how many people did not post after the reminder and how many people were part of this test in total.

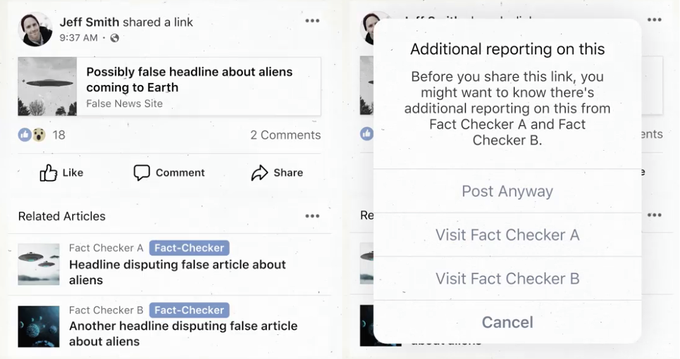

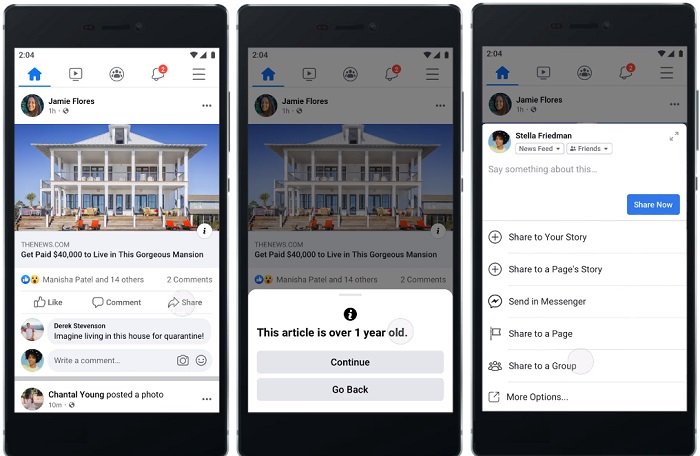

Similarly to this feature, Facebook used third-party fact-checkers on selected posts back in 2016 (Hutchinson). A pop-up notification with two separate sources disputing the one the user was going to post showed up, in hopes of making people more aware of “fake news” and of what they share on the internet. This pop-up notification is currently not circulating anymore. However, Facebook is still trying to raise awareness of false or potentially outdated information. At present, a pop-up notification is shown if a user wants to post a news article which is more than 90 days old (Hutchinson).

Research has shown problems with Facebook’s old pop-up notifications, as it is impossible to fact-check every single news article, so there are always articles with false information that went unchecked and were thus circulating normally, giving users the idea that they were correct. The “implied truth effect” gives users the impression that every article without such a warning pop-up notification is correct, even though they might not be, which led them to be more likely to be shared on Facebook and believed to be truthful (Pennycook, Gordon, et al., 25).

Amidst the current COVID-19 pandemic, stopping the spread of false information is especially important. Despite their usual guidelines highlighting the freedom of speech, Twitter has released new rules in March that enforce the removal of posts containing false and unsolicited ‘advice’ about the virus (Morse). Examples of that include false ‘advice’, such as: “drinking bleach and ingesting colloidal silver will cure COVID-19” or “the news about washing your hands is propaganda for soap companies, stop washing your hands” (Vijaya, Derella). It could be very dangerous for individuals to follow such ill-natured advice and purposefully avoid social distancing and other common measures that are currently in place (Croft).

Privacy concerns play a big role in these warning features, as some people are unhappy that their data is being collected and feel as if their freedom of speech is impacted by these notifications. Indirectly, this is Twitter’s goal; to lessen the amount of thoughtless posting and reposting of information that users have no idea about or that might be false. As mentioned above, this new feature cannot show if someone has read the article somewhere else or not, or if they clicked away after a couple of seconds without reading anything. Besides, this feature is also not fact-checking the posts, so perhaps a combination of fact-checking and controlling if a user has read something will be a social media feature in the future. During the current pandemic, one has to ask themselves if the restriction of total freedom of speech and urging users to know what they are (re-)posting is worth it, in order stop the spread of harmful misinformation, especially knowing that “fake news” is more likely to be seen and spread than real news.

References:

- Croft, Lori B. ‘COVID-19: My Perspective’. JACC: Case Reports, vol. 2, no. 9, July 2020, pp. 1423–25, doi:10.1016/j.jaccas.2020.06.014.

- Hutchinson, Andrew. ‘Facebook Adds New Warning Prompts to Stop Unintended Sharing of Older News Articles’. Social Media Today, 25 June 2020, https://www.socialmediatoday.com/news/facebook-adds-new-warning-prompts-to-stop-unintended-sharing-of-older-news/580576/.

- Hutchinson, Andrew. ‘Twitter Shares Insights Into the Effectiveness of Its New Prompts to Get Users to Read Content Before Retweeting’. Social Media Today, 24 Sept. 2020, https://www.socialmediatoday.com/news/twitter-shares-insights-into-the-effectiveness-of-its-new-prompts-to-get-us/585860/.

- Lorenz-Spreen, Philipp, et al. ‘How Behavioural Sciences Can Promote Truth, Autonomy and Democratic Discourse Online’. Nature Human Behaviour, June 2020, pp. 1–8, doi:10.1038/s41562-020-0889-7.

- Manjoo, Farhad. ‘You Won’t Finish This Article’. Slate Magazine, 6 June 2013, https://slate.com/technology/2013/06/how-people-read-online-why-you-wont-finish-this-article.html.

- Mantelero, Alessandro. ‘Competitive Value of Data Protection: The Impact of Data Protection Regulation on Online Behaviour’. International Data Privacy Law, vol. 3, no. 4, Nov. 2013, pp. 229–38, doi:10.1093/idpl/ipt016.

- Morse, Jack. ‘Twitter Steps up Enforcement in the Face of Coronavirus Misinformation’. Mashable, 19 Mar. 2020, https://mashable.com/article/twitter-cracks-down-coronavirus-misinformation/.

- ‘Parody, Newsfeed, Commentary, and Fan Account Policy (the “Policy”)’. Twitter Help Center, https://help.twitter.com/en/rules-and-policies/parody-account-policy.Accessed 26 Sept. 2020.

- Pennycook, Gordon, et al. ‘The Implied Truth Effect: Attaching Warnings to a Subset of Fake News Headlines Increases Perceived Accuracy of Headlines Without Warnings’. Management Science, Feb. 2020, doi:10.1287/mnsc.2019.3478. world.

- Wendling, Mike. ‘The (Almost) Complete History of “Fake News”’. BBC News, 22 Jan. 2018, https://www.bbc.com/news/blogs-trending-42724320.

- ‘Twitter Comms on Twitter’. Twitter, https://twitter.com/TwitterComms/status/1309178716988354561. Accessed 26 Sept. 2020.

- Vosoughi, Soroush, et al. ‘The Spread of True and False News Online’. Science, vol. 359, no. 6380, Mar. 2018, pp. 1146–51, doi:10.1126/science.aap9559.

- Vijaya, and Matt Derella. An Update on Our Continuity Strategy during COVID-19. 4 Jan. 2020, https://blog.twitter.com/en_us/topics/company/2020/An-update-on-our-continuity-strategy-during-COVID-19.html.

Reference for images:

- Image 1: ‘Twitter Comms on Twitter’. Twitter, https://twitter.com/TwitterComms/status/1309178718456221696. Accessed 26 Sept. 2020.

- Image 2 and 3: Hutchinson, Andrew. ‘Facebook Adds New Warning Prompts to Stop Unintended Sharing of Older News Articles’. Social Media Today, 25 June 2020, https://www.socialmediatoday.com/news/facebook-adds-new-warning-prompts-to-stop-unintended-sharing-of-older-news/580576/.