The Face As An Infrastructure

In media studies, infrastructures are defined as foundational technologies, services and facilities that enable the communication of information and expression. “The face” has become an increasingly significant aspect of many of these infrastructures and thus, we may argue that in many ways it has become an infrastructure in itself.

Group Members: Yihui Z., Yingmu Z., Magdalena L., Lin Z.

Introduction

The face has become a data source and systemic tool

This research paper investigates the role of the face as an infrastructure by contextualising it through the investigation of the topic of the face within new media. There are several ways in which the face can be understood as a new media infrastructure ranging from the face providing the foundation for technological artwork to facial recognition systems enabling the surveillance of specific populations. Consequently, like all new media and technologies, there are ethical considerations that must be taken into account when critically evaluating the use of the face as a data source and systemic tool.

The face as an infrastructure is not a new concept

In Understanding Media (1964), McLuhan raised a theory, which regards our body as a medium. He said, “Man becomes, as it were, the sex organs of the machine world, as the bee of the plant world, enabling it to fecundate and to evolve ever new forms.” And with the development of multimedia, the body plays an important role in content transmission. Aaron (2007) argues that the body should be understood not merely as the controller of multimedia systems, but as a component within these systems and as a unique medium unto itself.

There is a trend that the extension of our body has stopped and our body itself has become the terminals of communication (Peng, 2020). As a part of our body, the face is a unique organ, which carries original functions like seeing, smelling and tasting. However, this era witnesses evolutions happening on the functions of the face. By utilizing facial recognition technology, the face can be digitized, which makes it possible for people to pay for bills and unlock the mobile phone by scanning their face. Therefore, it is obvious that the face’ s function is exceeding the concept of media, especially in the post-Covid period. For Sinitsyna (2020), face, which is thought to be the most personal and distinctive feature used to identify and recognize others, has actually always been a more-than-human project, which can be seen as an infrastructure in contemporary society.

““Facial grids” are now our public spaces”

“[… ].the face is a relational systemic tool more than an individual signifier.”

(Sinitsyna, 2020)

In fact, the word “infrastructure” originated in military parlance, referring to fixed facilities such as air bases (Edwards, 2003).

“By infrastructure……we mean a network of independent, mostly privately-owned, man-made systems and processes that function collaboratively and synergistically to produce and distribute a continuous flow of essential goods and services.”

(President’s Commission on Critical Infrastructure Protection, 1997: p.3)

In the history of media studies, infrastructures are foundational technologies, services and facilities that enable the communication of information and expression, which include printing, telegraph, radio, fax, television, the Internet and the Web, and movie production and distribution. Functions of the body have not been valued by scholars. Since the 1960s, the body has gradually become a hot topic in sociology, anthropology, cultural studies, cognitive psychology, literary studies, and political economy (Liu, 2020). Meanwhile, what is interesting is that in the study of body language, human emotions and situations are the infrastructure, and the targets of study are facial and body muscles, posture and proximity (Negrini, 2002).

Hence, it is not difficult to understand why the face can be seen as an infrastructure in contemporary society.

“Face as infrastructure—or more specifically the face as the central unit of value in planetary technical systems and cultural constructs—has been rendered immediately visible by the spread of COVID-19.”

(Sinitsyna, 2020)

The face as an infrastructure means the use of face images which is an identification source unique to individuals is very extensive now (Gray, 2003). When we think of our faces, it is not only an organ or appearance problem, but also a public issue associated with our daily life. During the pandemic, people have no choice but to wear a mask, which brings obstacles to our life. For example, it is a necessary motion to remove the mask secretly before playing with our phone to unlock it. Online meetings also make people rethink the privacy problem of facial recognition. Therefore, it cannot be denied that face has been an infrastructure. In this article, we explore this assertion from different perspectives to demonstrate the value of this concept in contemporary society. This will be achieved by investigating different examples of the role of “the face” in new media in order to support the concept that the face is an infrastructure.

Face as data source – case study of facial recognition technology

Facial recognition tools have been used by private businesses and law enforcement for years before this technology has been largely and rapidly distributed with the intervention of government aid during the pandemic (Sato, 2021). Yet, although it has been claimed that this technology can help the policies while investigating crime cases and so that can make the public safer (O’ Neil, 2019), facial recognition has still raised many concerns about privacy, false matches and the potentially pernicious use of this technology, “pointing to China, where the government has deployed it as a tool for authoritarian control” (Valentino-DeVries, 2020). Thus, in this section, the essay is going to emphasize the infrastructural aspect of the face by presenting three case studies which all involve facial recognition devices that treat facial features, emotions and movements as data sources.

A further example is that an app named FindFace had been used to harass and intimidate women who were identified by the app in pornographic films and websites in Russia (Russon, 2016). This app, developed by Trinity Digital, enables everyone to identify people through a simple image of them and can point out their name, location and other personal details by the help of its neural network (Ibid.). As such, the face has become a crucial part of modern infrastructure since in the situation where the AI-driven image-targeting cameras and tools have been largely applied, we can be easily recognized after the devices finish analyzing the data extracted from our face.

Besides the raising concerns on privacy problems when the facial recognition related software services have been introduced to the market, such as the case of harassment towards Russian sex workers and actresses mentioned above, another problem that is caused by treating face as data source is the false matches produced by the relevant algorithms. For example, in February 2019, an incorrect facial recognition match detected by US policies falsely sent an innocent black man to jail (General and Sarlin, 2019). Given that law enforcement agencies have been applying facial recognition technology and treating face as the fundamental infrastructure to locate and trace the suspects for a while, it has provoked heated discussion on the police use and caused ethical problems when false matches occurred. It is reported that according to researchers at the MIT Media Lab, the result of facial recognition is much more accurate when the tested skin is whiter (Lohr, 2018). That is to say, the structural discrimination existed within infrastructures in real world has been converted into artificial intelligence algorithms that operate facial recognition by its developers. This bias can manifest itself in the process of data selection, dataset inclusion and exclusion. Thus, the dominant ideologies have left their marks in technologies as well.

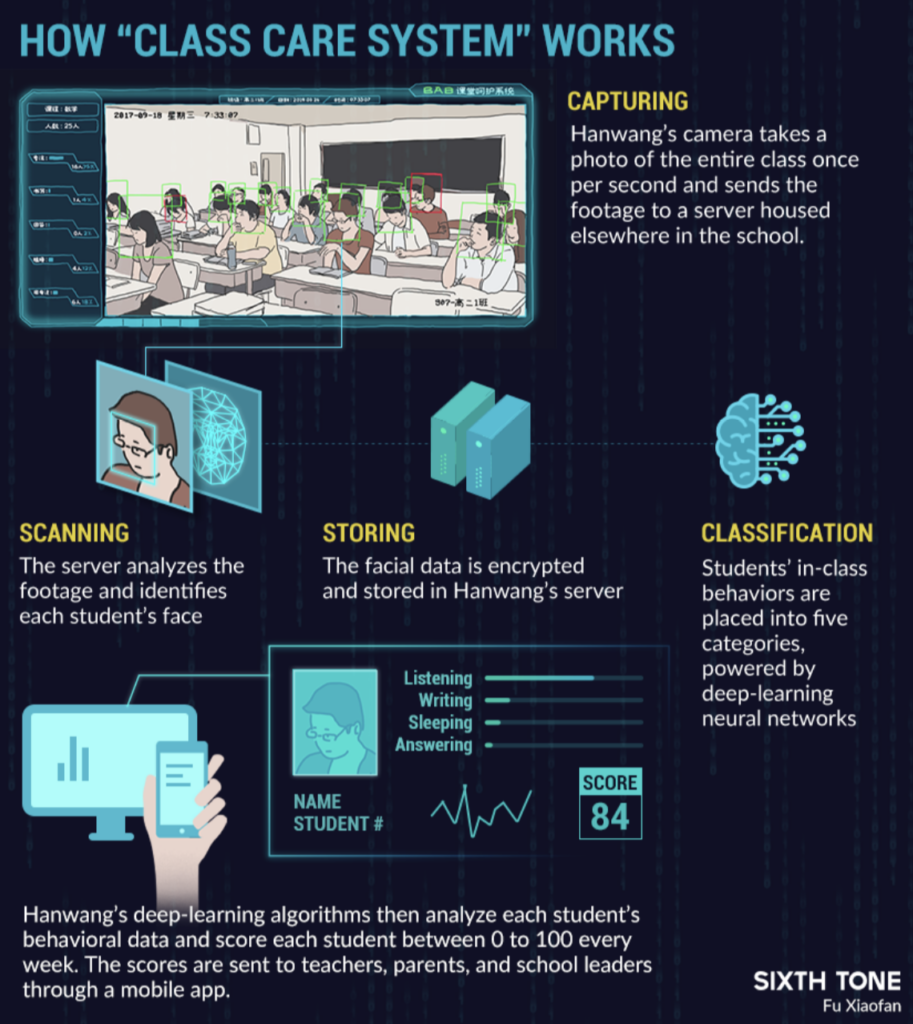

Another controversial example of facial recognition application has happened in Chinese middle schools and Uighur residential areas. According to the news, China has installed facial recognition cameras in classrooms, which function through capturing students’ facial data and analyzing the data by deep-learning algorithms, in order to monitor students’ in-class performance and the real-time grades are going to show up in teachers’ and parents’ mobile app screens (Xue, 2019). Similarly, it is reported by BBC that the “AI emotion-detection software has been tested on Uyghurs” in Xinjiang as well (Wakefield, 2021). Yet, the former one has provoked criticism from educators, students and netizens in China, not only because the students were not asked for consent before installing the cameras, but also the utilization of facial recognition in the education system on every ordinary student is going to cause lots of ethical problems. During China’s aggressive usage of facial recognition technology, a loop contains modern panopticon, data surveillance and the political aspect of technology can be seen and our face is no longer an individual signifier, but the data source of ourselves and our social relations.

Face as artwork – case study of biometric art

Melvin Kranzberg (1986) stated in his “six laws of technology” that “technology is neither good nor bad; nor is it neutral”. On the one hand, recognition technology provides a new affordance in the knowledge production of facial emotions, features and events. On the other hand, from private businesses, law enforcement to the intervention of government aid, there is an acceleration of data capital to the face of the increasing usage of recognition technology as labor. Therefore, as has been mentioned in the previous section of this essay, the face has become a crucial part of modern infrastructure. Furthermore, such data capital and data behaviorism raised the interests of artists who started their experiment by using recognition technology as a tool for artistic creations named biometric art. Through analyzing three biometric art case studies, this section will explore how facial recognition technology translates face as date source reflected in the artistic creations.

In fact, people are often labeled and diagnosed into different boxes in society based on their face. Like how facial recognition cameras in China’s classrooms monitor students’ in-class performance, facial recognition could determine potential interpretations about individuals’ behavior through the attribution of causal laws and the assignment of universal classifications. This is supported by Whittaker et al. (2018, p. 14) in their work AI Now Report 2018, the “idea that AI systems might be able to tell us what a student, a customer, or a criminal suspect is really feeling or what type of person they intrinsically are is proving attractive to both corporations and governments.”

Take Shu Lea Cheang’s biometric artwork 3x3x6 as an example. Inspired by the ideas of “AI gaydar” which is to identify sexual orientation from facial features (nose shape or grooming style), Taiwanese pioneering net artist and filmmaker Shu Lea Cheang customizes a facial recognition system for “internal network of 3-D surveillance cameras [which] transforms the panopticon into a tower of sousveillance” (Cheang, 2019). Moreover, following the standard architecture for industrial imprisonment, which is a 3×3 square meter cell monitored continuously by six cameras, the title of this biometric artwork “3x3x6” is referred to. Furthermore, as the curator of 3x3x6, Preciado argues that the reason facial recognition technology could identify sexual orientation is because humans taught them “the language of techno-patriarchal binarism and racism” (ibid). Therefore, in this artwork, by combining the images of people who are “criminalized by sexopolitical regimes” and exhibition visitors’ selfies, the entire image set is finally transformed to “trans-gender and trans-racialized facial data” (ibid). Consequently, Fedorova (2020) stated that biometric art affords for the function of informational exchanges between images and audiences based on interaction design of facial recognition technology. In the panoptical systems of 3x3x6, visitors are surveiller and surveilled, their faces act upon the artwork and even become the artwork.

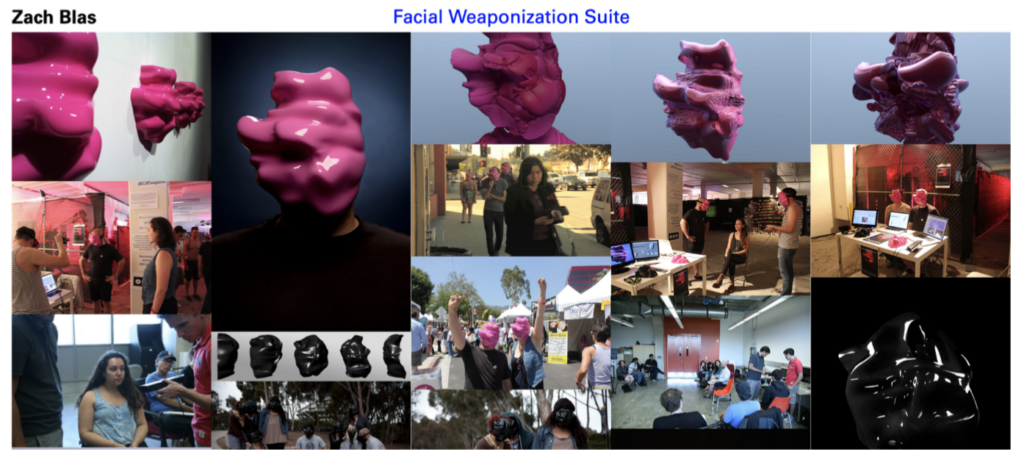

Face as artwork could not only directly present within the ideas of surveillance at infrastructural level, but some artists also chose to cover their face with their artifacts to protest the very issue of data behaviorism. For example, though the aggregation and combination of facial data for creating “collective masks” to prevent human faces detection, Zach Blas’s 2012 biometric artwork Facial Weaponization Suite protests biometric facial recognition and the technologies propagated inequalities from gender and race levels (Blas, 2012). Therefore, creating the superimposition of faces into a physical, wearable mask, Blas critically against big data surveillance in the darkness and conceives of a collective politics through wearing multiple at once. When it comes to the ideas of collecting faces as “masks’ ‘, American media artist Sterling Crispin (2013) experimented how machines perceive human beings when abstracting their actual appearance in her art research Data Masks. Eventually, Crispin (2014) found that the face is conceptualized as data in applied face studies instead of an actual object, it is the efficiency of facial recognition that realizes the face as data to speak for itself. The various critical practices for facial-recognition-based biometric art afford for the conceptual models to classify individuals based on face to be created, manipulated, and examined. In a word, the face could become artwork when the face as data source speaks for itself.

Conclusion

It can indeed be concluded that when considering the role of the face, the extension of the human body in new media has stopped and the face itself has become a terminal of communication as proposed by Peng (2020). The human face has become a unit of value and through its datafication as an identification source turn the arguably most personal identifier of people’s individualities into a commodity and tool. Primarily thanks to biometric technologies, the face has been turned into a tool such as a verification system to unlock devices or applications, into a datafied commodity such as for the creation of algorithms as well as into a surveillance method.

The key issue that affects biometric facial recognition systems and programmes as a whole, is that for the algorithms that each of these systems requires to work efficiently, a large amount of data is needed. According to research by Van Noorden (2020) “In the 1990s and 2000s, scientists generally got volunteers to pose for these photos — but most now collect facial images without asking permission”. The lack of consent and transparency that surrounds facial recognition systems is an ethical concern raised for each of the examples that have been explored. As furthermore highlighted by Van Noorden (2020), the legality of researchers and developers collecting faces without consent is not entirely clear. According to the researchers, the GDPR (European Union’s vaunted General Data Protection Regulation) has not specified any regulations, whereas, in the U.S., several states state that obtaining facial data without explicit consent is illegal when the data is being used by commercial firms (Jasserand, 2018). As the legalities remain unclear, the issue of data being obtained without consent gives even greater rise to the risk of data being misused in discriminatory manners, such as in discriminatory algorithms.

When a large amount of data is collected, there is always a risk that it may be used politically. Data obtained by biometric facial recognition systems are especially vulnerable to this as they can be put to use to identify and analyse entire populations. As touched upon in previous examples, the systems and algorithms behind biometric facial recognition systems are frequently criticised for propagating or at least reinforcing inequalities. It is, therefore, not only the risk of political surveillance and potential misuse of private data but also the ability to discriminate and reinforce biases through the use of that data.

As it has been explored throughout this research paper, the face which is arguably the most personal human characteristic and feature is no longer just emerged in the use of multimedia technology, but a component within their systems and a medium itself. The face has become an infrastructure within new media, and it is likely that this infrastructure will only evolve and grow. One example of the increasing complexity of the face as a new media infrastructure is the rise of Metaverse apps and so-called digital twins. Digital twins allow the realistic digital recreation of anything physical – such as a human face. These models are synchronised and brought to life. When considering the role and power “the face” as an infrastructure has today in both the political and private sector as well as the risks of misuse and the ethical concerns that have been highlighted throughout this paper along with the technological developments that will increasingly enable the recreation of infrastructures of the face, it is impossible to argue against the major ethical, social and political threat the face as an infrastructure may pose in the future.

Bibliography

Blas, Z. (2012). Facial Weaponization Suite. Zach Blas. Retrieved October 25, 2021, from http://zachblas.info/works/facial-weaponization-suite/

Cheang, S. L. (2019). Taiwan in Vanice: 3x3x6. Taiwan in Venice. Retrieved October 25, 2021, from http://www.taiwaninvenice.org/2019/

Crispin, S. (2014). Data-masks biometric surveillance masks evolving in the gaze of the technological other. University of California, Santa Barbara.

Edwards, P., Bowker, G., Jackson, S., & Williams, R. (2009). Introduction: An Agenda for Infrastructure Studies. Journal of the Association for Information Systems, 10(5), 364–374. https://doi.org/10.17705/1jais.00200

Fedorova, K. (2020). Tactics of Interfacing: Encoding Affect in Art and Technology. The MIT Press.

Fu, X. & Sixth Tone. (2019, March 26). How “Class Care System” Works [Illustration]. https://www.sixthtone.com/news/1003759/camera-above-the-classroom#

Gray, M. (2002). Urban Surveillance and Panopticism: will we recognize the facial recognition society? Surveillance & Society, 1(3), 314–330. https://doi.org/10.24908/ss.v1i3.3343

Levisohn, A. (2007). The body as a medium: reassessing the role of kinesthetic awareness in interactive applications. Proceedings of the 15th International Conference on Multimedia, 485–488. ACM. https://doi.org/10.1145/1291233.1291352

Li, Z. (2011). Doreen D. Wu (ed.), Discourse of cultural China in the globalizing age. Hong Kong: Hong Kong University Press, 2008. Pp. x, 262. Pb. $35. Language in Society, 40(5), 649–652. https://doi.org/10.1017/S004740451100073X

Lohr, S. (2018, February 12). Facial Recognition Is Accurate, if You’re a White Guy. The New York Times. https://www.nytimes.com/2018/02/09/technology/facial-recognition-race-artificial-intelligence.html

McLuhan, M. (1964). Understanding Media: The Extensions of Man. Signet Books.

Nautsch, A., Jiménez, A., Treiber, A., Kolberg, J., Jasserand, C., Kindt, E., … Busch, C. (2019). Preserving privacy in speaker and speech characterisation. Computer Speech & Language, 58, 441–480. https://doi.org/10.1016/j.csl.2019.06.001

Negrini, G. (2002). Sorting Things Out. Classification and its Consequences, Geoffrey C. Bowker and Susan Leigh Star. Axiomathes : Quaderni Del Centro Studi Per La Filosofia Mitteleuropea, 13(2), 225–229. https://doi.org/10.1023/A:1021308300809

O’Neill, J. (2019, June 10). Opinion | How Facial Recognition Makes You Safer. The New York Times. https://www.nytimes.com/2019/06/09/opinion/facial-recognition-police-new-york-city.html

Peng L. (2020). Users in the new media era. China Renmin University Press.

Plantin, J. C., Lagoze, C., Edwards, P. N., & Sandvig, C. (2018). Infrastructure studies meet platform studies in the age of Google and Facebook. New Media & Society, 20(1), 293–310. https://doi.org/10.1177/1461444816661553

Russon, M. (2016, April 27). Russian trolls outing porn stars and prostitutes with neural network facial recognition app. International Business Times UK. https://www.ibtimes.co.uk/russian-trolls-outing-porn-stars-prostitutes-neural-network-facial-recognition-app-1557082

Sato, M. (2021, September 30). The pandemic is testing the limits of face recognition. MIT Technology Review. https://www.technologyreview.com/2021/09/28/1036279/pandemic-unemployment-government-face-recognition/

Sinitsyna, A., Myers, P., Crosman, L., & di Leone, C. (2020, September 30). Face as Infrastructure. Strelka Mag. https://strelkamag.com/en/article/face-as-infrastructure

Story & Video by John General and Jon Sarlin, CNN Business. (2021, April 29). Nijeer Parks was arrested due to a false facial recognition match. CNN. https://edition.cnn.com/2021/04/29/tech/nijeer-parks-facial-recognition-police-arrest/index.html

Valentino-DeVries, J. (2020, January 12). How the Police Use Facial Recognition, and Where It Falls Short. The New York Times. https://www.nytimes.com/2020/01/12/technology/facial-recognition-police.html

Van Noorden, R. (2020). The ethical questions that haunt facial-recognition research. Nature, 587(7834), 354–358. https://doi.org/10.1038/d41586-020-03187-3

Wakefield, B. J. (2021, May 26). AI emotion-detection software tested on Uyghurs. BBC News. https://www.bbc.com/news/technology-57101248

Xue, Y. (2019, March 26). Camera Above the Classroom. Sixth Tone. https://www.sixthtone.com/news/1003759/camera-above-the-classroom