Bumble Without Gender: A Speculative Approach to Dating Apps Without Data Bias

By Chloë Arkenbout, Joseph O’Malley, Abraham Oyewo and Olta Parai

Bumble brands itself as feminist and revolutionary. However, its feminism is not intersectional. Apart from the fact that they present women making the first move as revolutionary while it is currently 2021, similar to various other dating apps, Bumble indirectly excludes the LGBTQIA+ community as well. To research this current problem and in an attempt to provide a suggestion for a solution, we combined data bias theory in the context of dating apps, identified three current problems in Bumble’s affordances through an interface analysis and intervened with our media object by proposing a speculative design solution in a possible future where gender would not exist.

Data Bias

Algorithms have come to dominate our online world, and this is no different when it comes to dating apps. Gillespie (2014) writes that the use of algorithms in society is becoming troublesome and has to be interrogated. In particular, there are “specific implications when we use algorithms to select what is most relevant from a corpus of data composed of traces of our activities, preferences, and expressions” (Gillespie, 2014, p. 168). Especially relevant to dating apps such as Bumble is Gillespie’s (2014) theory of patterns of inclusion where algorithms choose what data makes it into the index, what data is excluded, and how data is made algorithm ready. What this means is that before results (such as what type of profile will be included or excluded into a feed) can be algorithmically provided, information must be collected and readied for the algorithm, which often involves the conscious inclusion or exclusion of certain patterns of data. As Gitelman (2013) reminds us, data is anything but raw which means it must be generated, guarded, and interpreted. Normally we associate algorithms with automaticity (Gillespie, 2014), yet it is the cleaning and organising of data that reminds us that the developers of apps such as Bumble purposefully choose what data to include or exclude.

This leads to a problem when it comes to dating apps, as the mass data collection conducted by platforms such as Bumble creates an echo chamber of tastes, therefore excluding certain groups, such as the LGBTQIA+ community. The algorithms used by Bumble and other dating apps alike all search for the most relevant data possible through collaborative filtering. Collaborative filtering is the same algorithm used by sites such as Netflix and Amazon Prime, where recommendations are generated based on majority opinion (Gillespie, 2014). These generated recommendations are partly based on your personal preferences, and partly based on what’s popular within a wide user base (Barbagallo and Lantero, 2021). What this means is that when you first download Bumble, your feed and subsequently your recommendations will essentially be entirely dependent on majority opinion. Over time, those algorithms reduce human choice and marginalize certain types of profiles. Indeed, the accumulation of Big Data on dating apps has exacerbated the discrimination of marginalised populations on apps like Bumble. Collaborative filtering algorithms pick up patterns from human behaviour to determine what a user will enjoy on their feed, yet this creates a homogenisation of biased sexual and romantic behaviour of dating app users (Barbagallo and Lantero, 2021). Filtering and recommendations can even ignore individual preferences and prioritize collective patterns of behaviour to predict the preferences of individual users. Thus, they will exclude the preferences of users whose tastes deviate from the statistical norm.

As Boyd and Crawford (2012) stated in their publication on the critical questions for the mass collection of data: “Big Data is seen as a troubling manifestation of Big Brother, enabling invasions of privacy, decreased civil freedoms, and increased state and corporate control” (p. 664). Important in this quote is the notion of corporate control. Through this control, dating apps such as Bumble that are profit-orientated will inevitably affect their romantic and sexual behaviour online. Furthermore, Albury et al. (2017) describe dating apps as “complex and data-intensive, and they mediate, shape and are shaped by cultures of gender and sexuality” (p. 2). As a result, such dating platforms allow for a compelling exploration of how certain members of the LGBTQIA+ community are discriminated against due to algorithmic filtering.

Discrimination in Dating Apps

In the beginning, when dating apps first emerged, matchmaking was done specifically by the user who would search profiles (Maroti, 2018), answer some questions and depending on those, they would find their soulmate.

Now, dating apps collect the user’s data. The way users interact and behave on the app is based on recommended matches, based on their preferences, using algorithms (Callander, 2013). For example, if a user spends a lot of time on a user with blond hair and academic interests, then the app will show more people that match those characteristics and slowly decrease the appearance of people that differ.

As an idea and concept, it seems great that we can only see people that might share the same preferences and have the characteristics that we like. But what happens with discrimination? The infrastructures of the dating apps allow the user to be influenced by discriminatory preferences and filter out people that do not meet their requirements, thus excluding people who might share similar interests.

According to Hutson et al. (2018) app design and algorithmic culture do only increase discrimination against marginalised groups, such as the LGBTQIA+ community, but also reinforce the already existing bias. Racial inequities on dating apps and discrimination, especially against transgender people, people of colour or disabled individuals is a widespread phenomenon.

People who use dating apps and already harbour biases against certain marginalised groups would only act worse when given the opportunity. Despite the efforts of apps such as Tinder and Bumble, the search and filter tools they have in place only help with discrimination and subtle forms of biases (Hutson et al, 2018). Although algorithms help with matching users, the remaining problem is that it reproduces a pattern of biases and never exposes users to people with different characteristics.

Interface Analysis

To get a grasp of how data bias and LGBTQI+ discrimination is present in Bumble we conducted a critical interface analysis. First, we considered the app’s affordances. We looked at how “they represent a way of understanding the role of [an] app’s” interface in providing a cue through which performances of identity are made intelligible to users of the app and to the apps’ algorithms (MacLeod & McArthur, 2018, 826). Following Goffman (1990, 240), humans use information substitutes – “cues, tests, hints, expressive gestures, status symbols etc.” as alternative ways to predict who a person is when meeting strangers. In supporting this idea, Suchman (2007, 79) acknowledges that these cues are not absolutely determinant, but society as a whole has come to accept certain expectations and tools to allow us to achieve mutual intelligibility through these forms of representation (85). Drawing the two perspectives together Macleod & McArthur (2018, 826), suggest the negative implications related to the constraints by apps self-presentation tools, insofar as it restricts these information substitutes, humans have learnt to rely on in understanding strangers. This is why it is important to critically assess the interfaces of apps such as Bumble’s, whose entire design is based on meeting strangers and understanding them in short spaces of time.

We began our data collection by documenting every screen visible to the user from the creation of their profile. Then we documented the profile & settings sections. We further documented a number of random profiles to also allow us to understand how profiles appeared to others. We utilized an iPhone 12 to document each individual screen and filtered through each screenshot, selecting those that allowed an individual to express their gender in any form.

We followed McArthur, Teather, and Jenson’s (2015) framework for analyzing the affordances in avatar creation interfaces, where the Function, Behavior, Structure, Identifier and Default of an apps’ specific widgets are analyzed, allowing us to understand the affordances the interface allows in terms of gender representation.

Function—the purpose of the widget;

Behaviour—the action solicited by the widget;

Structure—the technical description of the widget;

Identifier—the text or icons that convey the widget’s purpose;

Hierarchy—the widget’s position within a hierarchical interface made up of nested

sub-sections;

Default—whether the widget defaults to a particular option and what that default

is

We adapted the framework to focus on Function, Behavior, and Identifier; and we chose those widgets we believed allowed a user to represent their gender: Photos, Own-Gender, About and Show Gender (see Fig. 1).

| Widget | Function | Behaviour | Identifier |

| Photos | Choose 2 to 6 profile pictures (Photos must show a clear face) | Add (from Facebook, camera, or photo album) | Preloaded images, x and + icons, ‘hold & drag your photos to change the order’ |

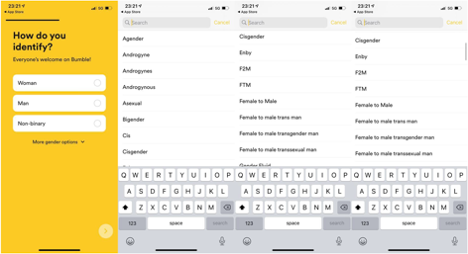

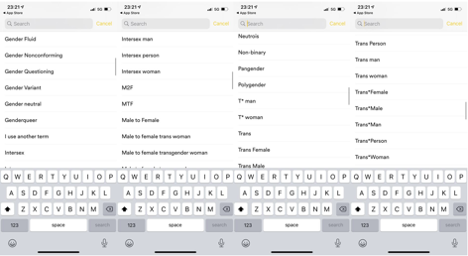

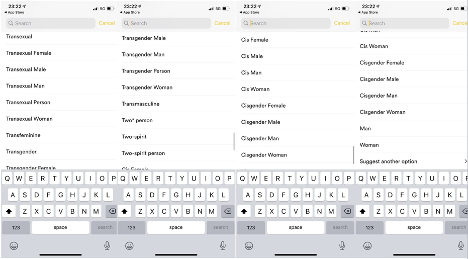

| Own Gender | Set User’s Gender | Choose 1 of 52 | Gender |

| About | Add custom text to profile | Enter Text | About Me; A little bit about you… |

| Show Gender | Choose gender of profiles shown to user (3 options, Male, Female, Everyone) | Tap on and off | (Gender chosen); ‘Show on Profile’ |

Fig. 1: Gender Related Affordances in Bumble version: 5.236.0.

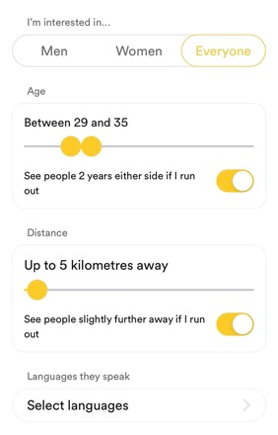

Fig. 2: Current filter options on Bumble

It is worth noting that what is not captured on the table is that Bumble does allow users to stop their profiles from being displayed to other users. This function only then allows your profile to only be seen to users you have swiped right to (chosen as a possible match). This can be understood as a method of allowing users to limit the discrimination that arises from relenting power of who views your profile to the app’s algorithm.

Remaining Problems in Bumble

A previous study was done on Bumble and its interface by MacLeod & Victoria McArthur in 2018. Since then, the dating app has improved by adding more gender options, 52 in fact, in the onboarding process (see Fig. 3). However, while analysing its interface we identified three affordances that are still problematic to the LGBTQIA+ community, that we would like to address.

Fig. 3: Current gender options in Bumble.

The first observation we made was that when women match with men, they have 24 hours to respond first, or ‘make the first move’ as Bumble calls it, otherwise the match expires. When it comes to people belonging to the LGBTQIA+ community who gets to talk first is assigned randomly. This shows that although the LGBTQ+ community is welcome to use the app, they are not the norm, and this app is not built for them.

Secondly, when a user sets their filters about their preferences, they can choose between ‘women’, ‘men’ or ‘everyone’. Other genders, such as non-binary people, intersex people and numerous other genders that do not fit the gender binary, are literally Othered and are not acknowledged by being thrown in the same category together. Although multiple genders are acknowledged in the onboarding process, the app does not present filters for all these varieties. For instance, if a non-binary person wants to talk only to another non-binary person, the app does not afford that kind of preference.

Lastly, trans individuals and disabled people face a lot of discrimination, harassment, and death threats on the dating app, as the regulations to protect them are very limited. Transphobia is real and isolates trans people. Especially trans women and trans women of colour who match with straight men on the app are in danger and a lot of the times their privacy is violated or they get humiliated (2018).

Bumble Without Gender

To creatively intervene with Bumble we decided to go off the beaten academic track and look for alternative methods. In an attempt to work towards a solution of the previously identified problems we turned to futuring and speculative design practices.

A possible future

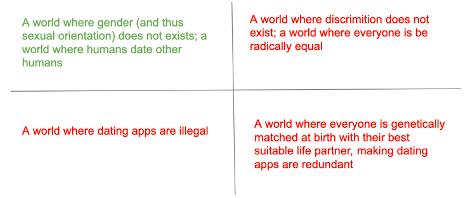

Futuring is a method that is often used in design practices, where possible futures and scenarios are imagined, based on a current problem, using relevant theory. These scenarios help prepare for possible futures and help to understand the problem at hand more deeply. Usually, these methods can take up to months, however, for this project we simplified Peter Schwartz’s method, where we imagined four different possible futures in the case of Bumble’s exclusivity nature towards the LGBTQIA+ community (see Fig. 4). The bottom two scenarios are too dystopic to be able to provide the circumstances for a solution, thus we decided to go for the top left scenario as it solves all the problems that we identified.

Fig. 4: Four possible future scenarios.

Participatory Knowledge Building

Taking Rosemary Clark-Parsons and Jesse Lingel Margins as Methods approach, we made sure to include participatory knowledge building in our methodology, centering the voices and experiences of the marginalized group we are researching. We involved the LGBTQIA+ community in our project by asking 21 queer people (a mixture of gay, lesbian, bi, pan, trans, intersex and non-binary people) and 14 cisgender straight people the following question:

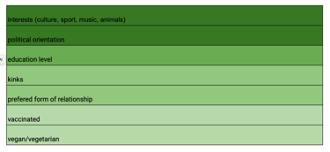

“What kind of filters would be most important to you on dating apps if gender (and therefore sexual orientation) would not exist, if humans would just date other humans?”

Apart from distance and age, the filters that were mentioned the most can be found in Fig. 5.

Fig. 5: Results of preferred filters for dating apps if gender would not exist.

If the scope of this assignment had been bigger we would have included focus groups, asked more questions, would have discussed more features and interviewed more people. As Clark-Parsons and Lingel state, as a researcher, it is important to be aware of one’s own biases. That is what it is important to note that firstly, respondents were selected in our own network due to the scope, which means most of them are younger progressive left voices, and secondly, that Chloë was biased because she is part the queer community herself. She wanted to interview only people from the queer community, as they are the ones having to deal with these problems. Joseph, Abraham and Olta rightfully pointed out that if there is a world where there is no gender or sexual identity, straight people should have a say as well.

Speculative Design

The results might not be surprising, but we did not want to speculate without consulting the marginalized group we are researching. We were able to conclude the hypothesis we were expecting; what becomes clear is that what become most important features on dating apps for a future partner become personality, interests, norms and values and relational and sexual preferences. In a world where gender does not exist (and sexual orientation does not either) everyone automatically becomes pan sexual; where one’s sexual attraction to another human is not based on gender but on personality.

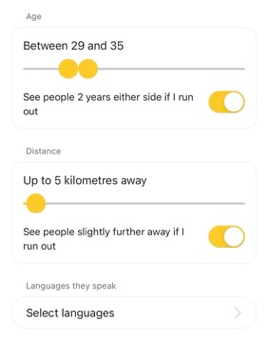

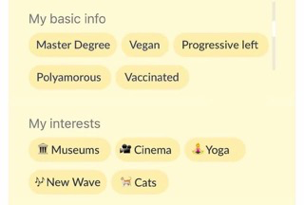

Fig. 6: Filtering options in Bumble without Gender.

Fig. 7: Profile settings in Bumble without Gender.

If this app would actually be developed we would have to dive into app development techniques such as A/B testing, however, we are not app developers and building an app is not the point we are trying to make. The above mock-up (Fig. 6-7) is merely to show what Bumble could potentially look like in a possible future where gender does not exist. In this solution, trans people are safe, people outside of the gender binary are not Othered and the LGBTQIA+ community does not even exist so it cannot feel left out. The data of this marginalized group cannot be used for other exclusivity or dangerous practices either.

It is important to note that this speculative design project is merely to serve as a starting point for finding tactile solutions to these problems. Discrimination based on gender and sexual identity are problems that go beyond Bumble and other dating apps. It would be a utopia if gender definitions and categorizations would no longer exist. However, various stigmas that have tactical consequences for the LGBTQIA+ community are very much present in our society. In order to address these issues adequately, definitions and categorizations are, unfortunately, still very much needed. In the context of this project, it is important then, that these issues should be discussed interdisciplinary and intersectionality in future research.

Works cited

Albury, K., Burgess, J., Light, B., Race, K. and Wilken, R. 2017. Data cultures of mobile dating and hook-up apps: Emerging issues for critical social science research. Big Data & Society, 4(2), pp. 1-11.

Barbagallo, Camila, and Lantero, Rocio. “Dating apps’ darkest secret: their algorithm”. Rewire Magazine, 24 October 2021, https://rewire.ie.edu/dating-apps-darkest-secret-algorithm/.

Boyd, D. and Crawford, K. 2012. Critical questions for big data: Provocations for a cultural, technological, and scholarly phenomenon. Information, communication & society, 15(5), pp. 662-679.

“Bumble: Female-founded dating app tops $13bn in market debut”, BBC, 11 February, 2021, https://www.bbc.com/news/business-56031281

Callander Denton. 2013. “Just a preference: Exploring concepts of race among gay men looking for sex or dates online”, Faculty of Arts and Social Sciences

Clark-Parsons, Rosemary and Jessa Lingel. 2020. “Margins as Methods, Margins as Ethics: A Feminist Framework for Studying Online Alterity.” Social Media + Society 6 (1): 2056305120913994. https://doi-org.proxy.library.uu.nl/10.1177%2F2056305120913994.

Gillespie, Tarleton. 2014. “The Relevance of Algorithms”. In: Gillespie, T, Boczkowski, P J and Foot, K A (eds.) Media Technologies: Essays on Communication, Materiality, and Society. Cambridge MA: MIT Press, 167-194.

Gitelman, Lisa, ed. Raw data is an oxymoron. MIT press, 2013.

Goffman, Erving. 1990. The Presentation of Self in Everyday Life. 1959. London: Penguin

Jevan A. Hutson, Jessie G. Taft, Solon Barocas, and Karen Levy. 2018. Debiasing Desire: Addressing Bias & Discrimination on Intimate Platforms.

Joint CSO submission to the Special Rapporteur on the right to privacy, “Gender Perspectives on Privacy in the Digital Era: A joint submission on sexual orientation, gender identity and expression and sex characteristics”, 2018

MacLeod Caitlin and McArthur Victoria. 2019. “The construction of gender in dating apps: an interface analysis of Tinder and Bumble, Feminist Media Studies”, 19:6, 822-840, DOI: 10.1080/14680777.2018.1494618

Maroti. A, “Algorithms behind Tinder, Hinge and other dating apps control your love life. Here’s how to navigate them”, Chicago Tribune, December 6, 2018, https://www.chicagotribune.com/business/ct-biz-app-dating-algorithms-20181202-story.html

McArthur, Victoria, Robert John Teather, and Jennifer Jenson. 2015. The Avatar Affordances Framework: Mapping Affordances and Design Trends in Character Creation Interfaces. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play, 231–240. New York: ACMSchwartz, Peter. The Art of the Long View: Planning for the Future in an Uncertain World. DOUBLEDAY & CO, 1996.

Suchman, Lucille Alice. 2007. Human-Machine Reconfigurations: Plans and Situated Actions. Cambridge: Cambridge University Press