Fooling the deepfakes: How we can outsmart A.I. in pursuit of the truth

From innocuous face-swapping tools to perfectly realistic doctored footage of global leaders and personalities: Deepfake technology makes it all possible. The applications of this technology are seemingly endless, and the rate at which it is developing is mind-boggling, to say the least. The question then remains: How can we govern deepfake applications and keep malicious use of it at bay?

What are deepfakes?

Deepfakes are essentially the product of deep machine-learning software (often known by its more popular handle ‘A.I.’) using two sources to merge the content of these sources to create something fake (such as this video of Barack Obama explaining the dangers of deepfakes and telling us to “stay woke”). What’s worrisome is that, while still in its infancy, this technology is rapidly pushing the boundaries of realism, making itself indistinguishable from the real thing (Benjamin).

There’s already a plethora of tools out there that use deepfake technology. Online communities, such as the subreddit dedicated to deepfakes, pioneered amateur use of the technology, mostly for superimposing celebrity faces onto pornographic videos (Cole). This quickly blossomed into a wide variety of apps that allow the user to create deepfakes in a matter of seconds, often to arguably hilarious results.

How deepfakes pose an unprecedented risk

Now, after seeing some examples of deepfake technology, you’re not convinced yet of the risks they pose. After all, it is still comparatively easy to spot a fake, and the uses, while maybe unsettling, are far from a major reason for concern. However, with industry figures like Hao Li suggesting that the technology might be ‘perfected’ within a year (Stankiewicz), making them practically undetectable by humans, this can soon change; as soon as the upcoming U.S. elections, as many political figures have already expressed their concerns (Metz). Humans naturally tend to believe what they see, as they have no reason to believe otherwise (Parry 176), making this all the more pressing.

This development could lead to a new, heightened level of disinformation in both online discourse and (geopolitical) news and journalism. The core of this problem is the very nature of deepfakes itself: Not the idea that such content can be created, but rather the fact that all of this can be done without any form of consent required and with little repercussions (Maras and Alexandrou 255). This will continue the trend of decreasing levels of trust in a variety of fields, such as journalism, scientific research and politics, not to mention the ramifications for content creators, even if the content people are seeing is actually legitimate (Fletcher 465).

Finding a solution to deepfakes

“What problems powerful computation gives, powerful computation can (usually) also take away, at least partially.

— Antonio García Martínez, WIRED

While the last paragraph may have sounded slightly dystopian and pessimistic, there are several options in the works to combat the use of deepfake technology for malicious intent, from its use in politics and global events to small-scale cyberbullying practices. “Researchers need to take an approach assuming deepfakes will be perfect”, Li argued, which is exactly what’s happening (Miller).

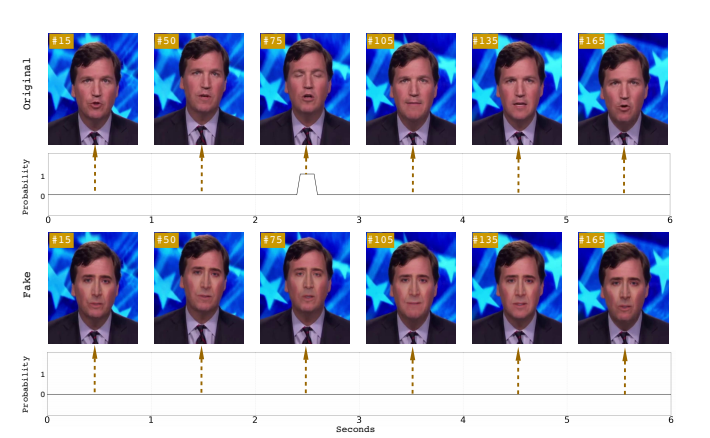

Yuezun Li and Siwei Lyu from the Computer Science department of the University at Albany, SUNY, for example, have recently published a new paper detailing a way to identify deepfake videos and photos using different “face warping artefacts” that only result from the use of deepfake technology (46). These same two researchers also published research that looks for eye blinking to determine the authenticity of a video, stating that, since deepfakes are generally based on images, the notion of blinking eyes is not represented in the source material, resulting in a deepfake video with an unblinking face, as well (Li, Chang and Lyu 2).

Other research and industry leaders have pointed to A.I. technology being the solution to its own problem. Several platforms such as Gfycat, Pornhub and Facebook have started using A.I. to recognise and remove deepfake content from their platform (Matsakis). This ban on deepfake content has been more widespread, with many platforms, including inaugural website Reddit, following suit.

From Bitcoin to deepfake

As is always the case, however, those that seek to use the technology will find ways around these restrictions. As such, there is a need for a more foolproof system that can verify sources and constrain disinformation properly. One candidate for this gargantuan task might be blockchain technology, commonly known for governing the systems of cryptocurrencies around the globe. Martínez states that “a decentralised, public ledger of consensus-driven facts about the world — which is what a blockchain fundamentally is — has a utility well beyond wild-eyed, crypto-anarchist dreams”, and he is essentially right; blockchain technology is objective and decentralised in nature, making it a perfect candidate for validation of information worldwide, with startup organisations such as Factom hoping to put this into practice.

Research into this possibility is already underway, with Hasan and Salah presenting ways to allow for blockchain-based proofs of authenticity (PoA’s) that work with unique hashes and metadata to offer a decentralised, independent way of verifying online content (41598). This is far harder to tamper with, making it far more secure and reliable than previous methods and excellent for providing probative and executive value to the verified content (Martínez). These solutions, according to them, are “generic enough and can be applied to other types of digital content”, allowing for far more extensive use overall.

Final remarks

At MIT Technology Review‘s Emtech conference, Yoshua Bengio, an expert on artificial intelligence, said that “it is important to recognise we’re very far from human-level A.I. in many ways,” (Miller), and thankfully so. As A.I. technology develops, many of our commonplace understandings of discourse and social structures are a topic of debate and need to be redefined accordingly, so as to properly incorporate the technology into our lives.

More importantly, these concepts to combat misinformation are flawless in theory but don’t find their proper representation in real-life applications and situations. Even if there is a decentralised, end-all-be-all governing entity that shows something is truly authentic, it is ultimately worthless if we are, in fact, headed for a post-truth era of information and public discourse (Lewandowsky 34-36) and have no ways to discern these credible sources from the rest.

Cited works

Benjamin, Garfield. “Deepfake Videos Could Destroy Trust in Society-Here’s How to Restore It.” Phys.org, Phys.org, 6 Feb. 2019, https://phys.org/news/2019-02-deepfake-videos-societyhere.html.

BuzzFeedVideo. “You Won’t Believe What Obama Says In This Video! .” YouTube, YouTube, 17 Apr. 2018, https://www.youtube.com/watch?v=cQ54GDm1eL0.

Cole, Samantha. “We Are Truly Fucked: Everyone Is Making AI-Generated Fake Porn Now.” Vice, 24 Jan. 2018, https://www.vice.com/en_us/article/bjye8a/reddit-fake-porn-app-daisy-ridley.

Fletcher, John. “Deepfakes, Artificial Intelligence, and Some Kind of Dystopia: The New Faces of Online Post-Fact Performance.” Theatre Journal 70.4 (2018): 455-471.

Hasan, Haya R., and Khaled Salah. “Combating Deepfake Videos Using Blockchain and Smart Contracts.” IEEE Access7 (2019): 41596-41606.

Lewandowsky, Stephan, Ullrich KH Ecker, and John Cook. “Beyond misinformation: Understanding and coping with the “post-truth” era.” Journal of Applied Research in Memory and Cognition 6.4 (2017): 353-369.

Li, Yuezun, Ming-Ching Chang, and Siwei Lyu. “In ictu oculi: Exposing ai generated fake face videos by detecting eye blinking.” arXiv preprint arXiv:1806.02877 (2018).

Li, Yuezun, and Siwei Lyu. “Exposing deepfake videos by detecting face warping artifacts.” arXiv preprint arXiv:1811.00656 2 (2018).

Maras, Marie-Helen, and Alex Alexandrou. “Determining authenticity of video evidence in the age of artificial intelligence and in the wake of Deepfake videos.” The International Journal of Evidence & Proof 23.3 (2019): 255-262.

Martínez, A. “The Blockchain Solution to Our Deepfake Problems.” Wired Magazine (2018).

Matsakis, Louise. “Gfycat Uses Artificial Intelligence to Fight Deepfakes Porn.” Wired, Conde Nast, 15 Feb. 2018, https://www.wired.com/story/gfycat-artificial-intelligence-deepfakes/.

Metz, Rachel. “The Fight to Stay Ahead of Deepfake Videos before the 2020 US Election.” CNN, Cable News Network, 12 June 2019, https://edition.cnn.com/2019/06/12/tech/deepfake-2020-detection/index.html.

Miller, Michael J. “A Deepfake Putin and the Future of AI Take Center Stage at Emtech.” PCMAG, 20 Sept. 2019, https://www.pcmag.com/article/370887/a-deepfake-putin-and-the-future-of-ai-take-center-stage-at-e.

Stankiewicz, Kevin. “’Perfectly Real’ Deepfakes Will Arrive in 6 Months to a Year, Technology Pioneer Hao Li Says.” CNBC, CNBC, 20 Sept. 2019, https://www.cnbc.com/2019/09/20/hao-li-perfectly-real-deepfakes-will-arrive-in-6-months-to-a-year.html.

Xia, Allan. “For Those Curious about How Protection of Identity Works in #Zao, the App Verifies against a Database of ‘Public Figures’ When You Upload a Photo. Apparently It’s Quite a Badge of Honour for Some Folks to Be Rejected by the System Because They Were Mistaken for a ‘Celebrity’. Pic.twitter.com/WZVcI58Hfo.” Twitter, Twitter, 2 Sept. 2019, https://twitter.com/AllanXia/status/1168529279006625793.

Xia, Allan. “What Truly Remains to Be See Is How Apps like #Zao Can Prevent Users Uploading Videos of Others to Be #Deepfake’d without Permission. Corporations Can Always Sue, but as the #BarbraStreisandEffect Has Shown, the Average Person Can’t Do Much If They’re Forced to Become a #Meme.” Twitter, Twitter, 2 Sept. 2019, https://twitter.com/AllanXia/status/1168529392160501760.