Picturing visual search technology

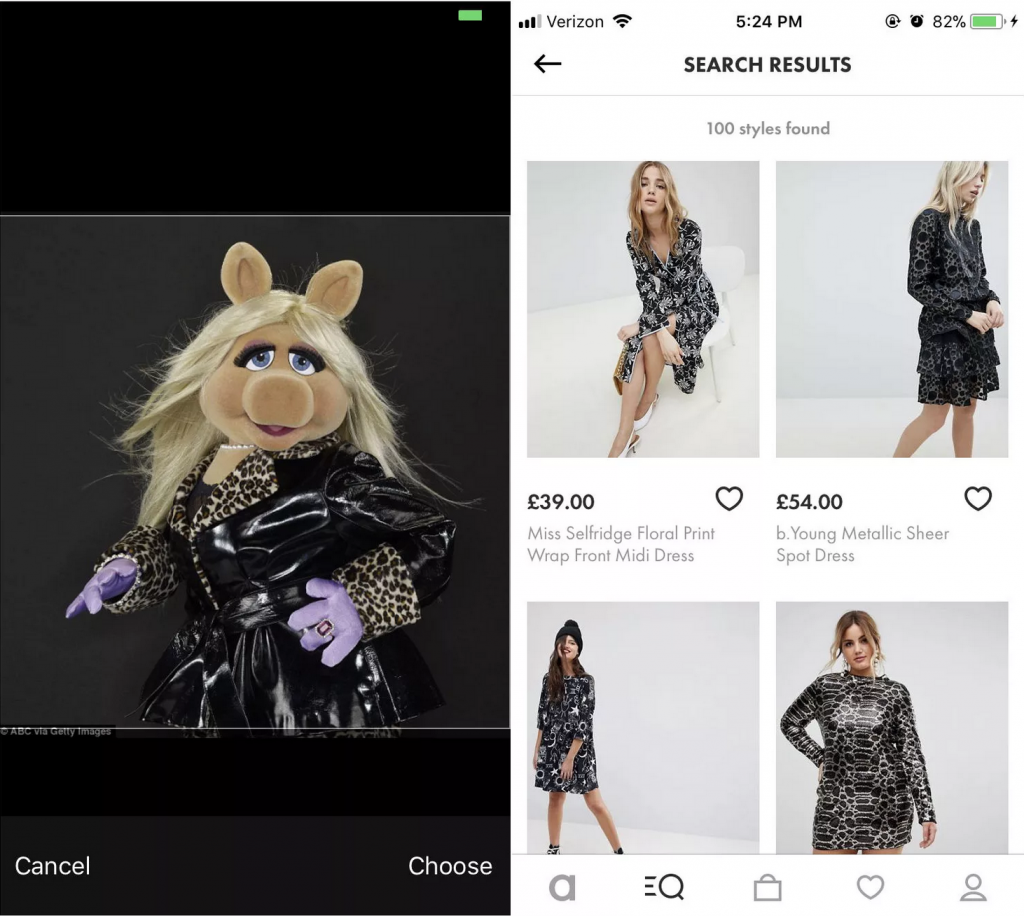

source: https://www.racked.com/2018/3/9/17097160/asos-style-match-visual-search

We all know Google’s image search: an immense database of images on your screen. Fewer people know about the reversed image search, which is for example used to track down Catfishes, (people who are using someone else’s photos online). Another similar but a bit more complex technology is visual search. In performing visual search, rather than text, the input query is an image. Thus, visual search engines are providing the affordance of searching with images, without using words to describe your question.

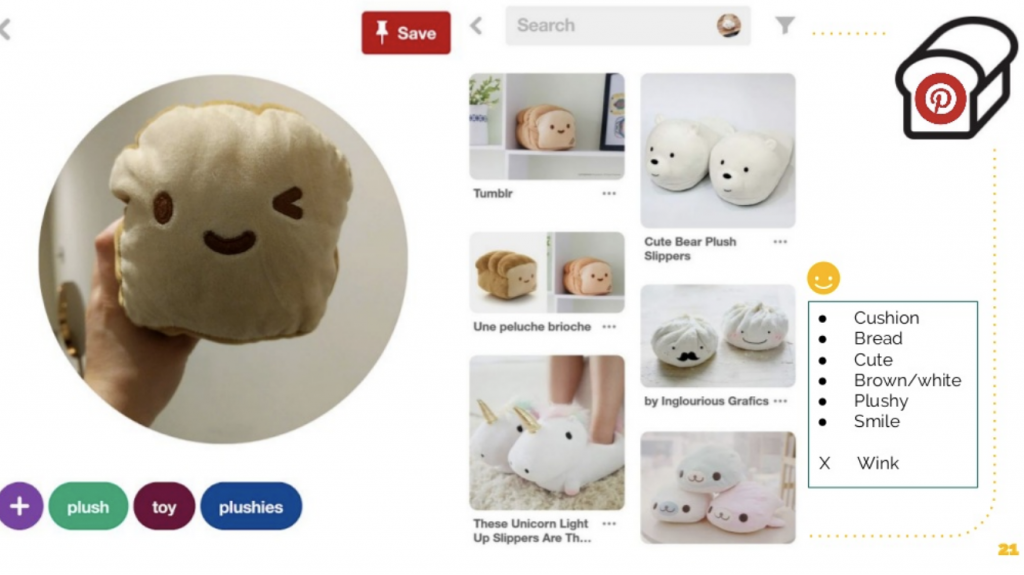

So, how does this work? There are roughly two kinds of computational visual search: one provides information on the visual, for example describes the images in words, percentages, or gives other extra information. For example: ‘give me all images containing dogs, taken in the summer, without trees in them. In this visual search the images are often still classified with words. On the other hand, the second kind of visual search results in even more images. For example: ‘give me all images similar to image X’.

The techniques for the computer to ‘see’ are content-based image retrieval, for the analysis of images and the image processing. As well as ‘deep tagging’: an image-to-text feature making the pictures classified by words, as described in the first category mentioned above. Nowadays, deep tagging is new because it is done automatically and not by a person picture for picture. The visual search engines use these keywords and other contextual signals to interpret the images (Boyd, 2018).

‘Because a picture is worth

Bing, Microsoft intelligent visual search

a thousand text-searches’

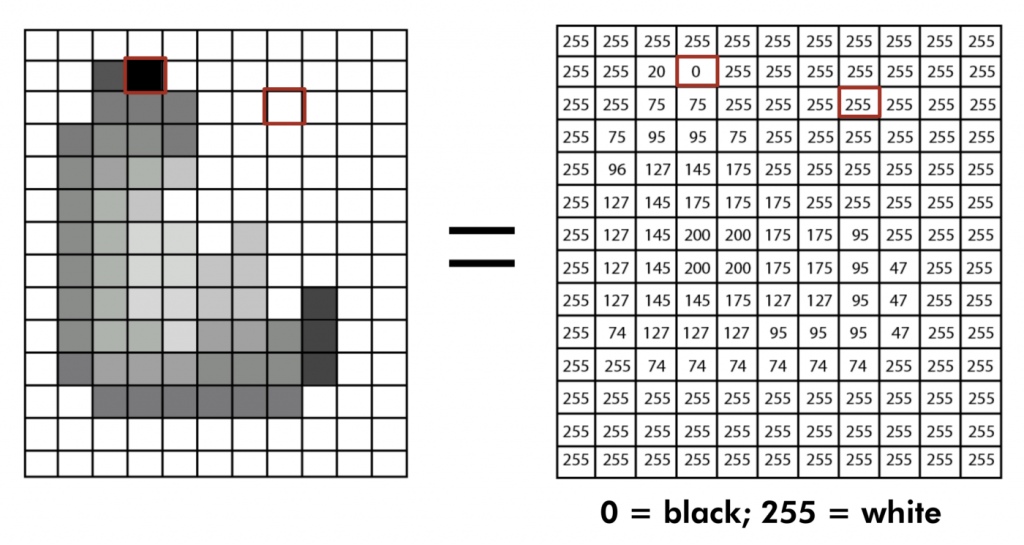

Because have you ever thought about how a computer ‘sees’? Does a computer see what we see, or how does it perceive images? Generally, visual search is a technology dealing with this matter. An image is perceived by a computer as something like this greyscale example:

So, what makes visual search a complex technology is this translating principle. The translation of the high-dimensional data from our world, (photographs), to a language which computers understand: numbers.

On to more practical matters, what are the overall uses of visual search? Visual search makes it possible for every individual with a smartphone to identify a flower in a garden, identify a food with your camera, or to break down language barriers in everyday life.

Companies offering visual search are Google, Amazon, Microsoft, Pinterest and others. Facebook uses the technology to identify individuals in pictures on the platform. You can also be familiar with visual search by your own gallery on your smartphone: some phones automatically identify and categorize your personal photographs. It’s funny to see a friend’s face being classified in the ‘food’ category. But visual search has a lot more to offer than this.

The combination of visual search and social media will result in even quicker shopping. Click on what the influencer is wearing, resulting in a range of look-alike options of one jumper. Or point-and-shoot photo’s of people in the streets to score the same trainers. But it also is possible to recommend which shoes go by certain trousers, like Pinterest offers.

Another functional example of visual search is the feature eBay Motors launched in 2018. A feature to let consumers find car parts via an app. The app shows an interactive schematic visual of their vehicle with which one is able to tap the different parts. It automatically redirects to buy the needed parts on eBay (Merten, 2018). Comes in handy for when a person does not know how to name the part they are looking for, since one wouldn’t get very far Googling ‘the thingy next to the thingy’.

Other applications of visual search are still developing. Over the last two years Pinterest worked on skin tone ranges, to empower a more inclusive and representational visual search database (Bhasin, 2020).

However, there are also provocative or maybe even dangerous applications of visual search technology. As mentioned-above, the face detection in Facebook pictures is already something that challenges opinions. But what about face detection of people with security cameras? Also, the combination of visual search and other emergent technologies, like Google Glasses or smart lenses, fuels debate. Earlier, Google has disabled face recognition in their goggles, due to severe privacy concerns (Schaap, 2011). Facial recognition leads to discussion on visual surveillance since many organizations are now able to make complete profiles of individuals (Yang & Huang, 1994).

Moreover, visual search has been in the news rather negatively, in terms of self-driving vehicles. The systems in these cars used the technology to detect pedestrians (Eckstein, 2011). This caused deadly accidents because the cars were not programmed to see pedestrians crossing roads without using zebras.

Another application of visual search is the detection of abnormalities in medical pictures, like three out of four US mammograms are read by computers (Rao et al., 2010). These examples show how visual search becomes incorporated in society.

What’s next for visual search? Speculatively speaking the future for visual search is looking bright, especially combined with other emergent technologies in the field. Think of immersive virtual reality or the combination of visual search engines and geolocation: Google Maps, Flickr… Thought for future fruits and worries!

Of course, image-understanding is a whole different subset in computer vision that has more to do with the semantics of images. Since right now computers are not very good at interpreting images and unable to place these in a field of meaning. Actually, due to the subjective nature of images, this interpreting part can already be quite hard for humans. This is why further research is to be done in cognitive and psychology of human visual search. Because how does a person process an image? This, to later be able to answer, when practising computational visual search, how should a machine do this? This is why the technology of visual search engines is complex (Boyd, 2018).

One of the goals in the fields of visual search and computer vision is to make a computer see like a human brain. Or as Gibson said, “in order to perceive the world, one must already have ideas about it. Knowledge of the world is explained by assuming that knowledge of the world exists. Whether the ideas are learned or innate makes no difference” (Gibson, 1986, 304). Luckily, it still takes quite a lot of scientific steps before we learn computers how to perceive the world as directly and realistically as humans do.

–

Beaumont, R. (2020). July 20. Medium. Image embeddings. Image similarity and building embeddings with modern computer vision. https://medium.com/@rom1504/image-embeddings-ed1b194d113e

Bhasin, L. (2020). August 20. Pinterest engineering. Medium. Powering inclusive search & recommendations with our new visual skin tone model. https://medium.com/pinterest-engineering/powering-inclusive-search-recommendations-with-our-new-visual-skin-tone-model-1d3ba6eeffc7

Boyd, C. (2018). June 26. Medium. The Past, Present, and Future of Visual Search. https://medium.com/swlh/the-past-present-and-future-of-visual-search-9178f006a985

Eckstein, MP. (2011). Visual search: A retrospective. Journal of Vision, 11(5):14. DOI: https://doi.org/10.1167/11.5.14

Gibson, J. J. (1986). The Ecological Approach to Visual Perception. New York, Hove UK: Psychology Press. (Chapter “The Theory of Affordances”).

Merten, P. (2018). October 30. ODSC – Open Data Science. Six Big Companies That Use Visual Search.

https://opendatascience.com/six-big-companies-that-use-visual-search/

Rao V. M. Levin D. C. Parker L. Cavanaugh B. Frangos A. J. Sunshine J. H. (2010). How widely is computer-aided detection used in screening and diagnostic mammography? Journal of the American College of Radiology, 7(10), 802–805. DOI: https://doi.org/10.1016/j.jacr.2010.05.019

Schaap, J. (2011). October 10. Masters of media. University of Amsterdam. App Review: Google Goggles.

https://mastersofmedia.hum.uva.nl/blog/2011/10/10/app-review-google-goggles/

Yang G. Huang T. S. (1994). Human face detection in a complex background. Pattern Recognition, 27, 53–63.

DOI: https://doi.org/10.1016/0031-3203(94)90017-5